Mathematics I Course Note

Made by Mike_Zhang

Notice | 提示

个人笔记,仅供参考

PERSONAL COURSE NOTE, FOR REFERENCE ONLYPersonal course note of AMA2111 Mathematics I, The Hong Kong Polytechnic University, Sem1, 2022.

本文章为香港理工大学2022学年第一学期 AMA2111数学I(AMA2111 Mathematics I) 个人的课程笔记。Mainly focus on Complex Number, Linear Algebra, Ordinary Differential Equation, and Partial Differentiation.

主要内容包括复数、线性代数、常微分方程 以及 偏微分。

1. Complex Number

1.1 Notations

$i$: imaginary unit, where $i^2=-1$

A Complex Number $z=x+iy$

where:

- $x,y$ are real numbers;

- $x$ is the real part of $z$, $x=Re(z)$;

- $y$ is the imaginary part of $z$, $y=Im(z)$.

complex number is NOT a number, but $i^2$ is a real number;

No $>,<$ between complex number, only $=,\not ={}$;

Complex number can be treated as a point or vector.

1.2 Propositions

For $z=a+ib,w=c+id$:

- $z+w=(a+c)+i(b+d)$

- $z-w=(a-c)+i(b-d)$

- $zw=(ac-bd)+i(ad+bc)$

- $z^0=1,z^n=zz^{n-1}$, for $z\not ={0},n=1,2,3,…$

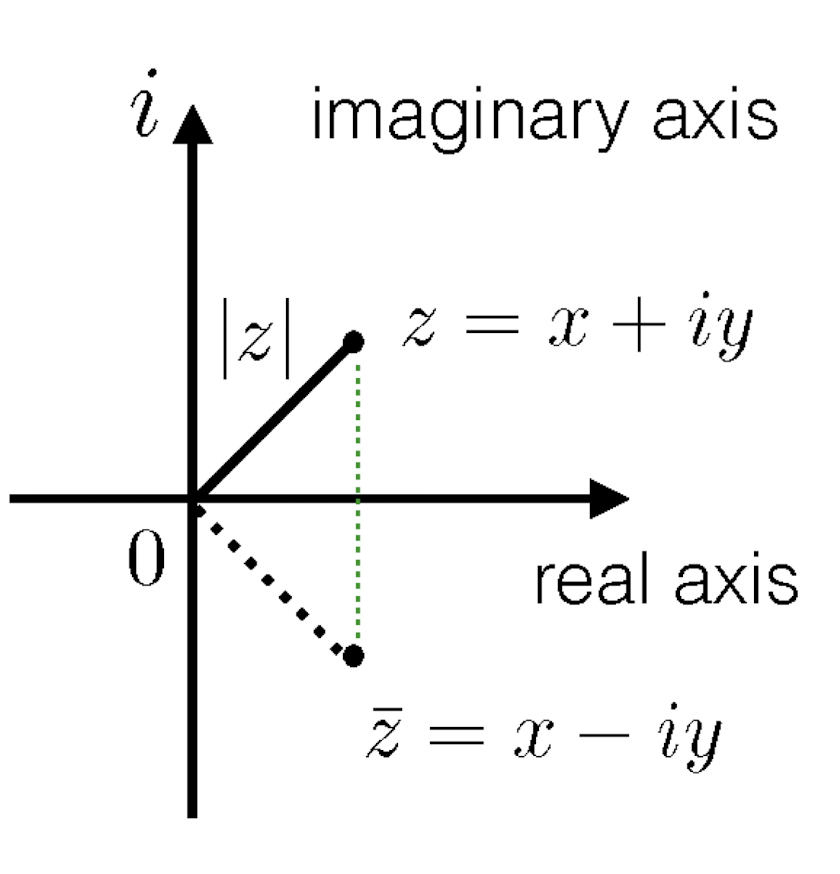

1.3 Conjugate

For $z=x+iy$, the conjugate of $z$ is $\bar{z}=x-iy$, where the real parts are same, imaginary parts are opposite.

Propositions:

- $\bar{\bar{z}}=z$

- $\bar{z \pm w}=\bar{z}\pm \bar{w}$

- $\bar{zw}=\bar{z}\bar{w}$

- $\bar{(\frac{z}{w})}=\frac{\bar{z}}{\bar{w}},w\not ={0}$

1.4 Modulus

For $z=x+iy$, the modulus of $z$ is $|z|=\sqrt{z\bar{z}}=\sqrt{x^2+y^2}$

Propositions:

- $|z|\ge 0$, and $|z|=0\iff z=0$

- $|\bar{z}|=|z|$

- $z\bar{z}=|z|^2$

- $\frac{1}{z}=\frac{\bar{z}}{|z|^2}$

- $|zw|=|z||w|$

- $|\frac{z}{w}|=\frac{|z|}{|w|}$

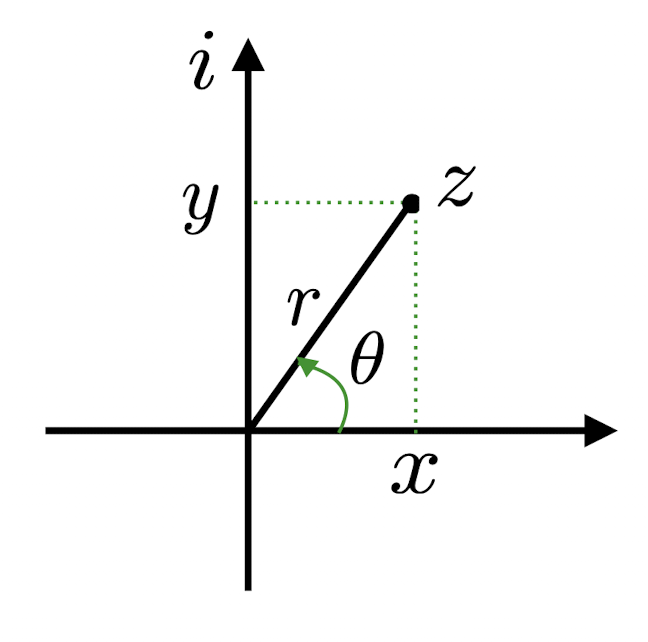

1.5 Polar Form

For $z=x+iy$:

Where:

- $r=\sqrt{x^2+y^2}=|z|$, the modulus of $z$

- $x=r\cos\theta$

- $y=r\sin\theta$

Thus the Polar Form of a nonzero complex number $z$ is:

and the Polar Coordinates is

Where $\theta$ is the argument of $z$, $\theta=arg(z)$

1.6 Complex Exponential Function

For $w=x+iy$, we have

which is the Complex Exponential Function of $z$.

In which $(\cos \theta+i\sin \theta)=e^{i\theta}$, therefore the Polar Form can be written as

1.7 De Moirve

Set

$z_1=r_1e^{i\theta_1}=r_1(\cos\theta_1+i\sin\theta_1)$,

$z_2=r_2e^{i\theta_2}=r_2(\cos\theta_2+i\sin\theta_2)\not ={0}$,

then

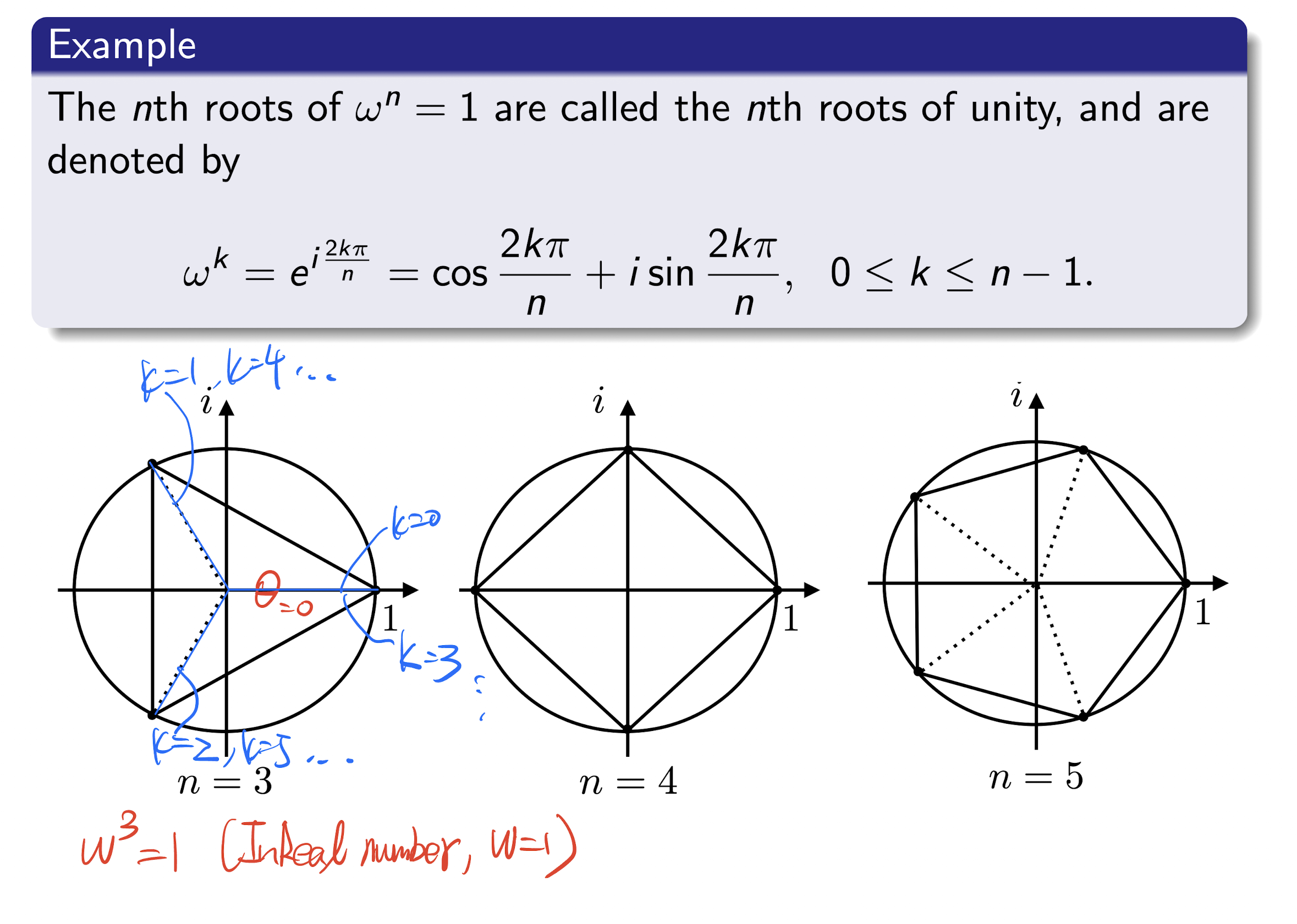

1.8 $n^{th}$ Root

For $n\ge 2$, $w$ as a non-zero complex number.

Complex number $z$ is an $n^{th}$ root of $w$, if

or

For complex number $w=re^{i\theta}$, the $n^{th}$ root of $w$ is

where $k=0,1,…,n-1$($n^{th}$ root has $n$ roots)

When $k=n-1$, it is the final one root. If moving forward to $k=n$, then $\frac{2k\pi}{n}=\frac{2n\pi}{n}=2\pi$, as same as the first one, starting repeat. So just stop at $k=n-1$;

Where $\frac{\theta}{n}$ is the initial position, and $k$ is the number of add up, from 0 to $n-1$;

nth roots of unity

2. Linear Algebra

2.1 Matrix

Definition:

Table, a rectangle array of numbers with finite number of rows and columns.

An $m\times n$ matrix: m rows and n columns,

$A_{m \times n}$

- $2\times 3$ matrix:

2.1.1 Special Matrix

Row vector ($1\times n$ matrix):

Column vector ($n\times 1$ matrix):

- Zero Matrix: All entries equal to zero, which is $0$

- Square Matrix:

- $n$ rows and $n$ columns, $n\times n$ matrix, square matrix of order $n$

- Upper Triangular Matrix:

- A Square Matrix, all entries below the main diagonal are zeros

- Lower Triangular Matrix:

- A Square Matrix, all entries above the main diagonal are zeros

- Diagonal Matrix:

- A Square Matrix, $a_{ij}=0$ whenever $i\not ={j}$, all entries expect diagonal all are zeros

- Identity Matrix:

- A Square Matrix,

- $a_{i j}=0$

- whenever $i\not ={j}$

- and $a_{i i}=1$.

- All entries expect diagonal all are zeros, and all entries on the diagonal are 1

2.1.2 Matrix Operation

Comparison:

- Same size $m\times n$ Matrix $A, B$,

Addition & Subtraction:

- Same size $m\times n$ Matrix $A, B$,

Scalar Multiplication:

- $m\times n$ Matrix $A$, Scalar $t$

Matrix Multiplication:

- $m\times n$ Matrix $A$, $n\times k$ Matrix $B$,

- In general, Row $\times$ Column, and # of columns = # of rows

Rules of Matrix Algebra:

- ,

- where $I$ is the identity matrix

- ,

- for all positive integer $k$

- ,

2.1.3 Matrix Transpose

For $m\times n$ Matrix $A$, the $n\times m$ Matrix $A^T$ is the transpose of $A$,

where

[Example]

Rules:

- A square matrix $A$ is symmetric if

2.1.4 Matrix Inverse

For square matrix $A$, it is nonsingular(invertible) if a square Matrix $A^{-1}$

where $A^{-1}$ is the inverse of $A$.

Thus,

is nonsingular, and its Inverse is

Rules:

- $A^{-1}$ is unique, one-to-one;

- $(A^{-1})^{-1}=A$

- $(tA)^{-1}=\frac{1}{t}A^{-1}$

- $(AB)^{-1}=B^{-1}A^{-1}$

- $A,B$ are nonsingular and same order

- $(A^k)^{-1}=(A^{-1})^k$

- $A$ is nonsingular

2.2 Determinant

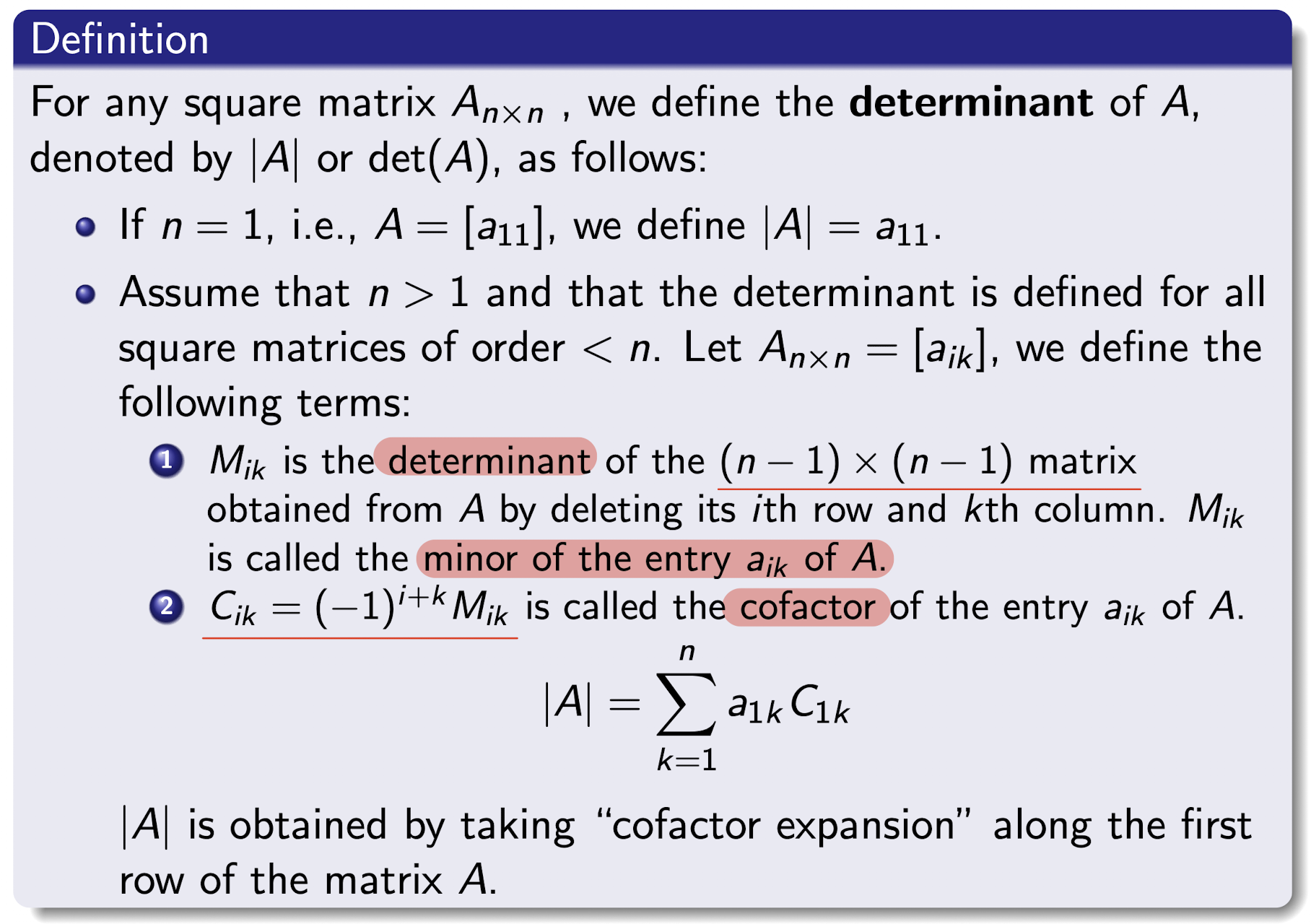

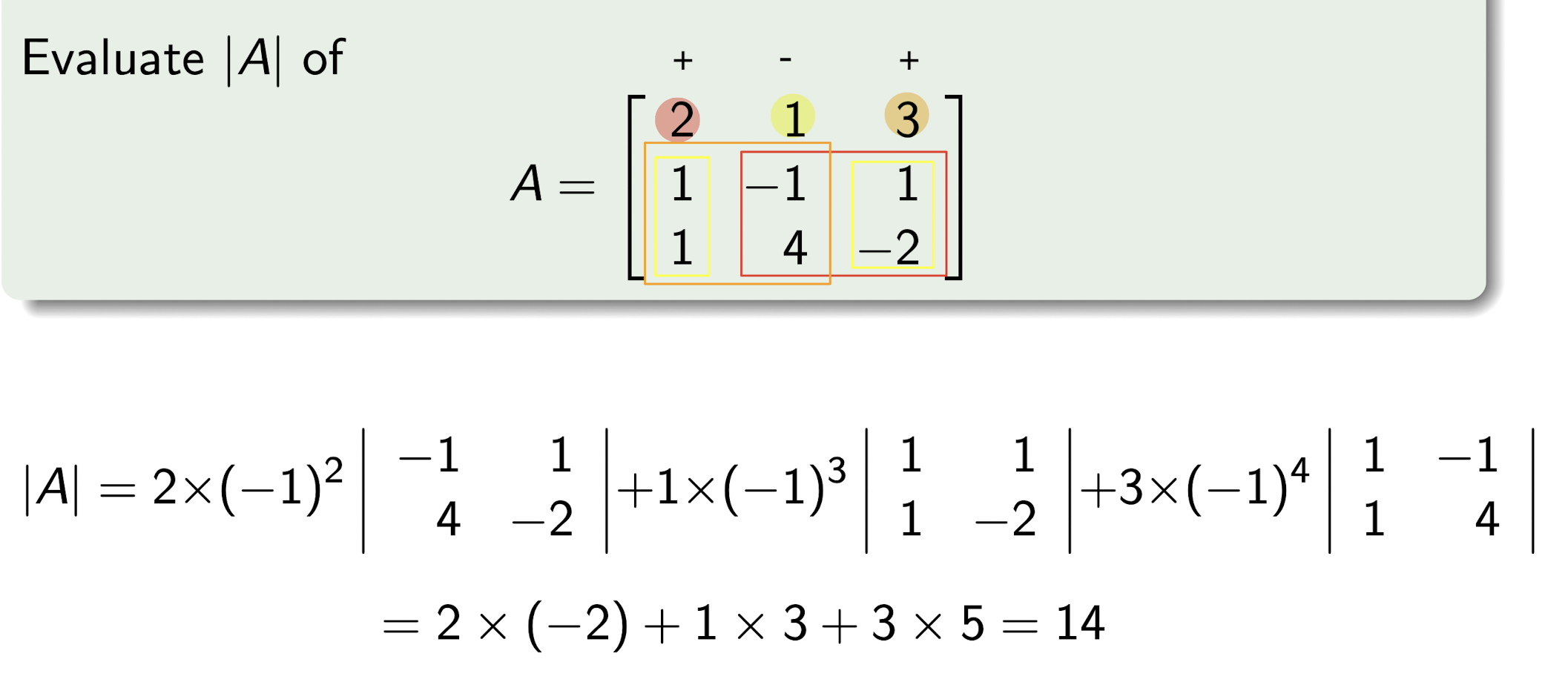

2.2.1 Cofactor

- Can take cofactor expansion along any row or any column

- The sign pattern of cofactor is

$2\times 2$ Matrix $A$:

[Example]

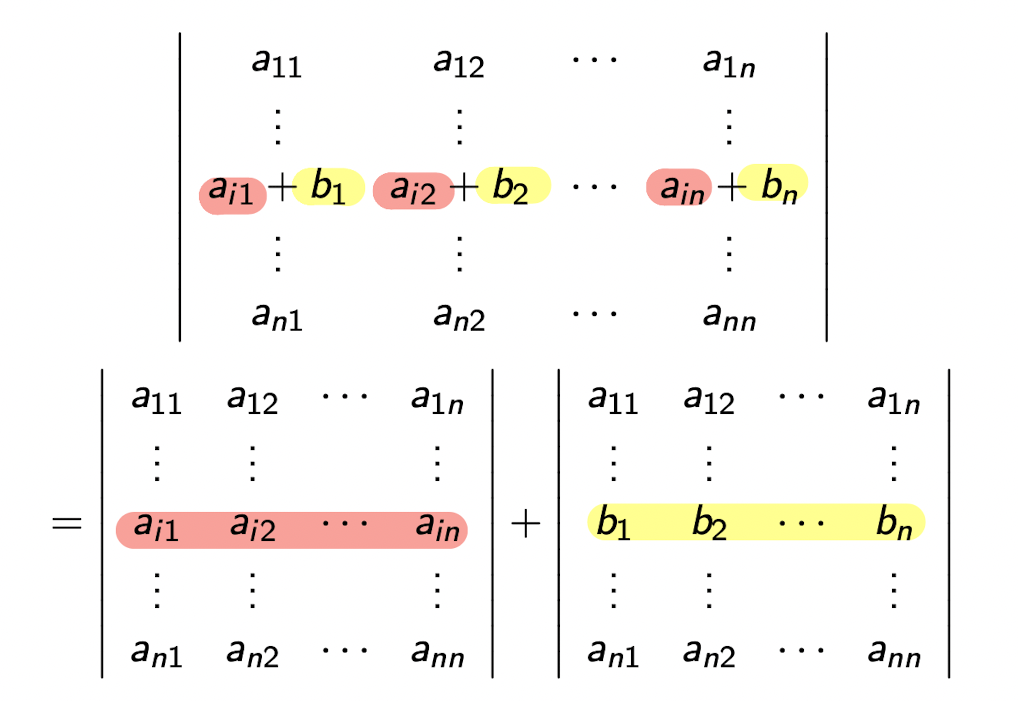

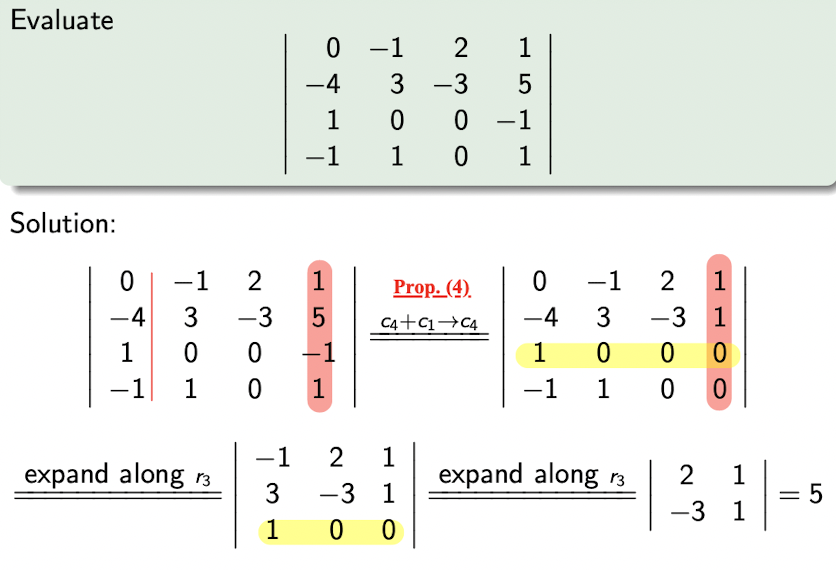

2.2.2 Proposition

- Swap two row or column, $|A\prime|=-|A|$

Multi one row or column, $|A\prime|=t|A|$

[Example]

- Add a scalar row(column) to a row(column), $|A\prime|=|A|$

- Containing two same rows(columns),

- For Diagonal Matrix, Lower Triangular Matrix, Upper Triangular Matrix $A$,

- Multiplication of all diagonal entries.

For square matrices $A, B$,

[Example]

- To get zeros as much as possible using propositions;

- Expand along the row or column with the most number of zeros.

2.3 System of Linear Equations

Rewrite the system into matrix form as

where

- $A$ is the coefficient matrix,

- $\bold{x}$ is the variable,

- $\bold{b}$ is the constant.

2.3.1 Row Operation

Augmented matrix:

For $A\bold{x}=\bold{b}$, the augmented matrix for this system to be the $m \times (n + 1)$ matrix obtained by joining to the right of $A$ the column vector $\bold{b}$.

Operation on ROW ONLY

- Swap 2 Rows of a augmented matrix:

- $r_i \leftrightarrow r_j$

- Multiply a Row by a non-zero scalar:

- $t\times r_i \rightarrow r_i$

- Add a scalar Multiple of one Row to another Row:

- $tr_j+r_i\rightarrow r_i$

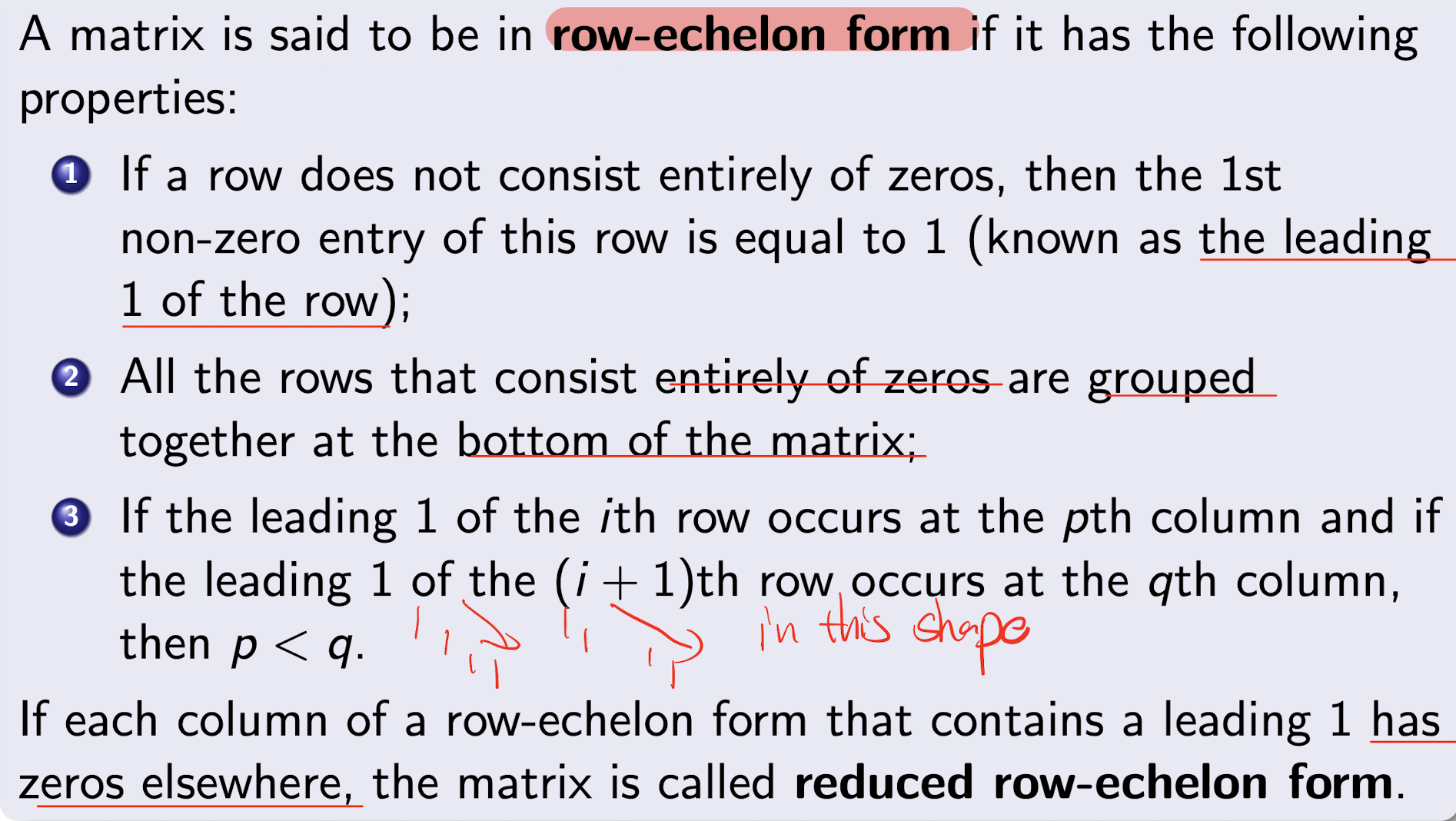

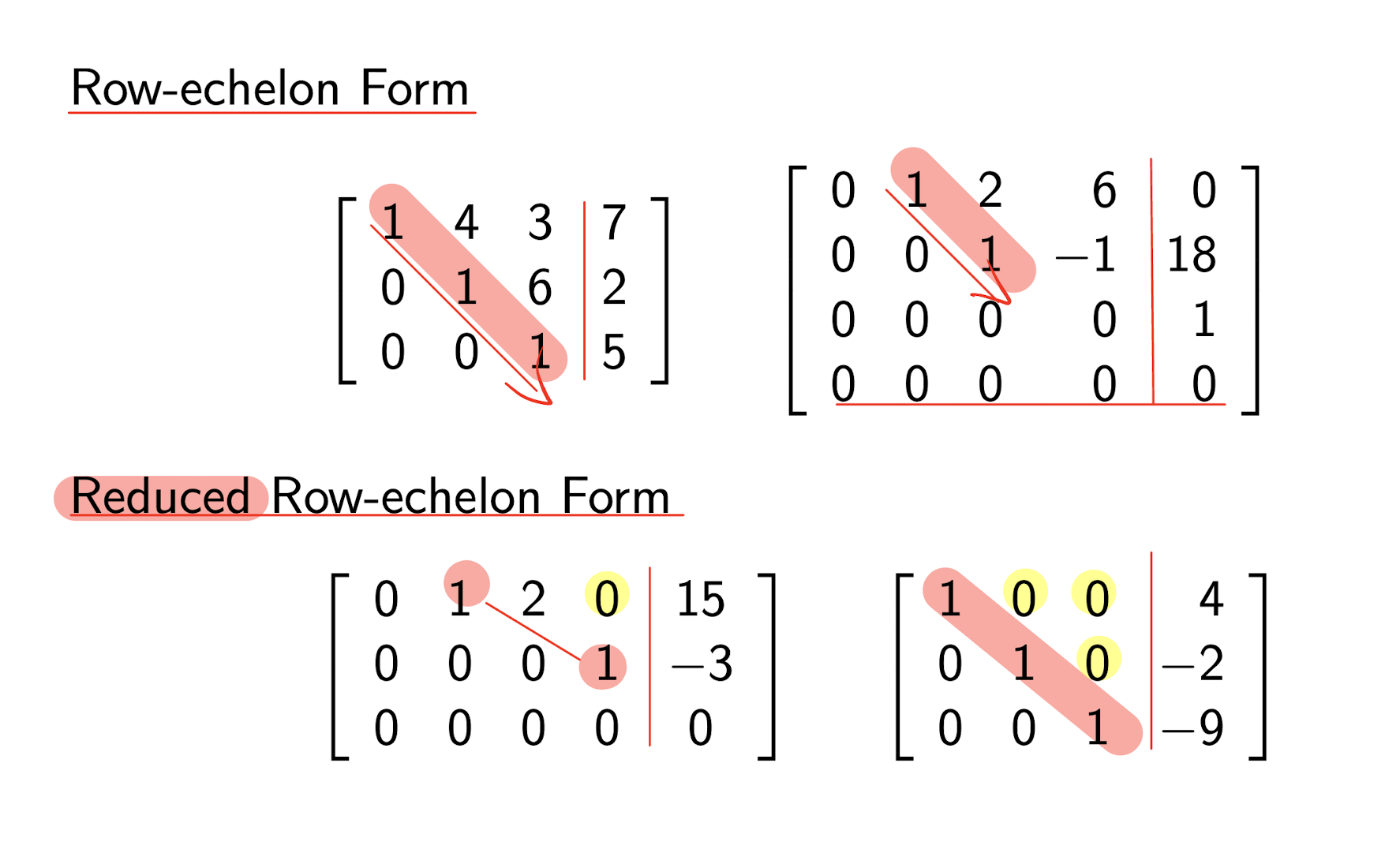

2.3.2 Row-echelon Form

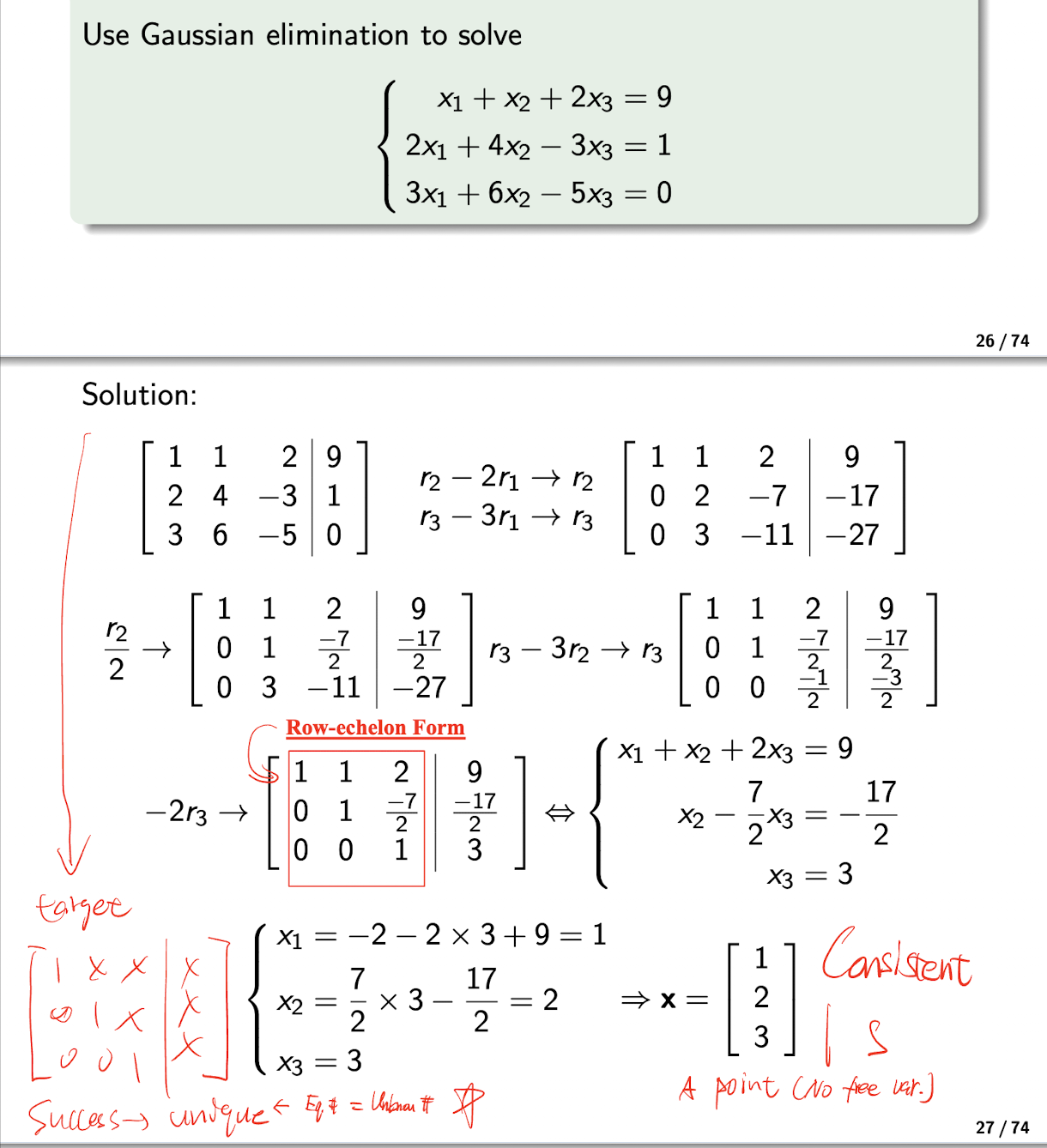

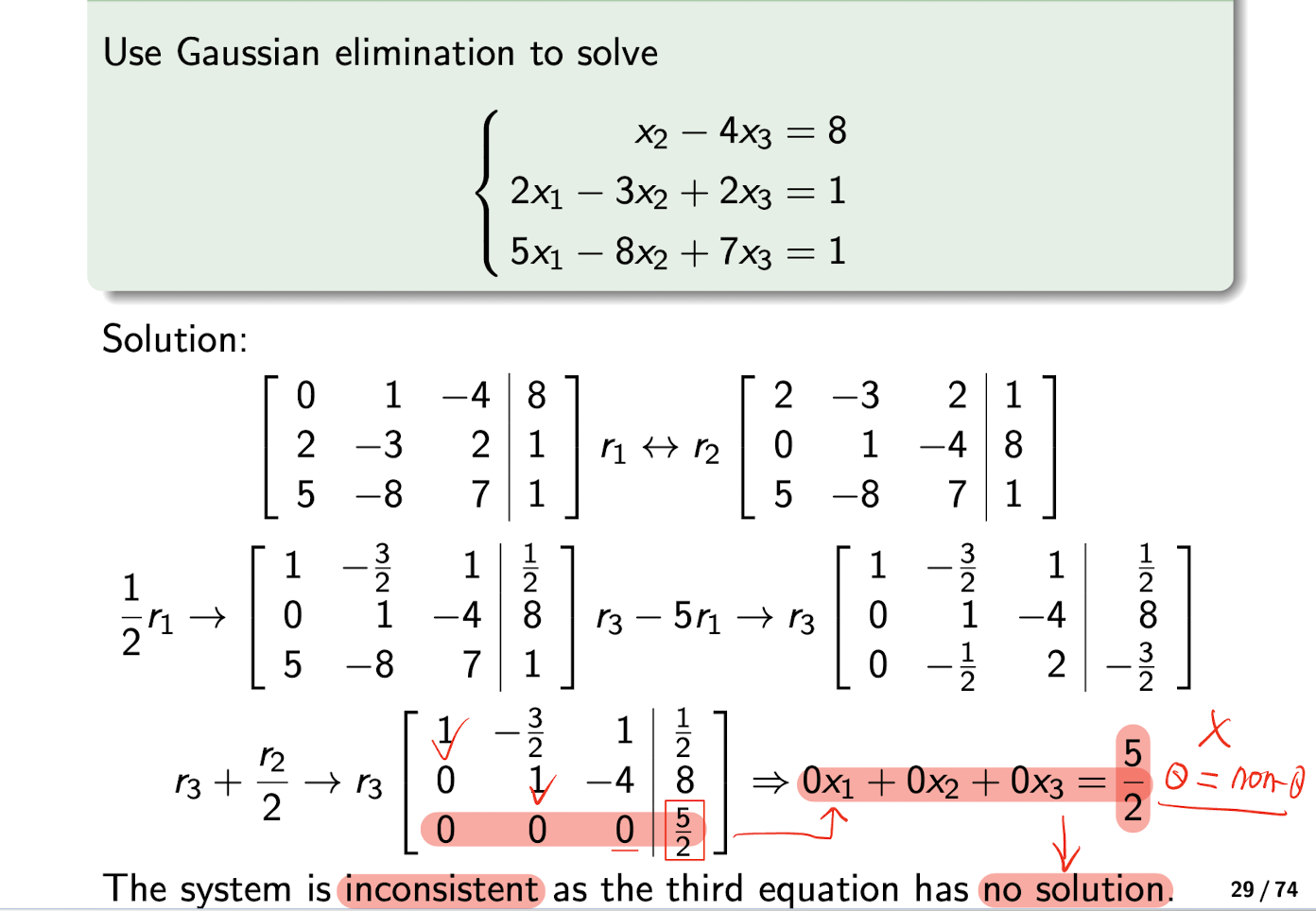

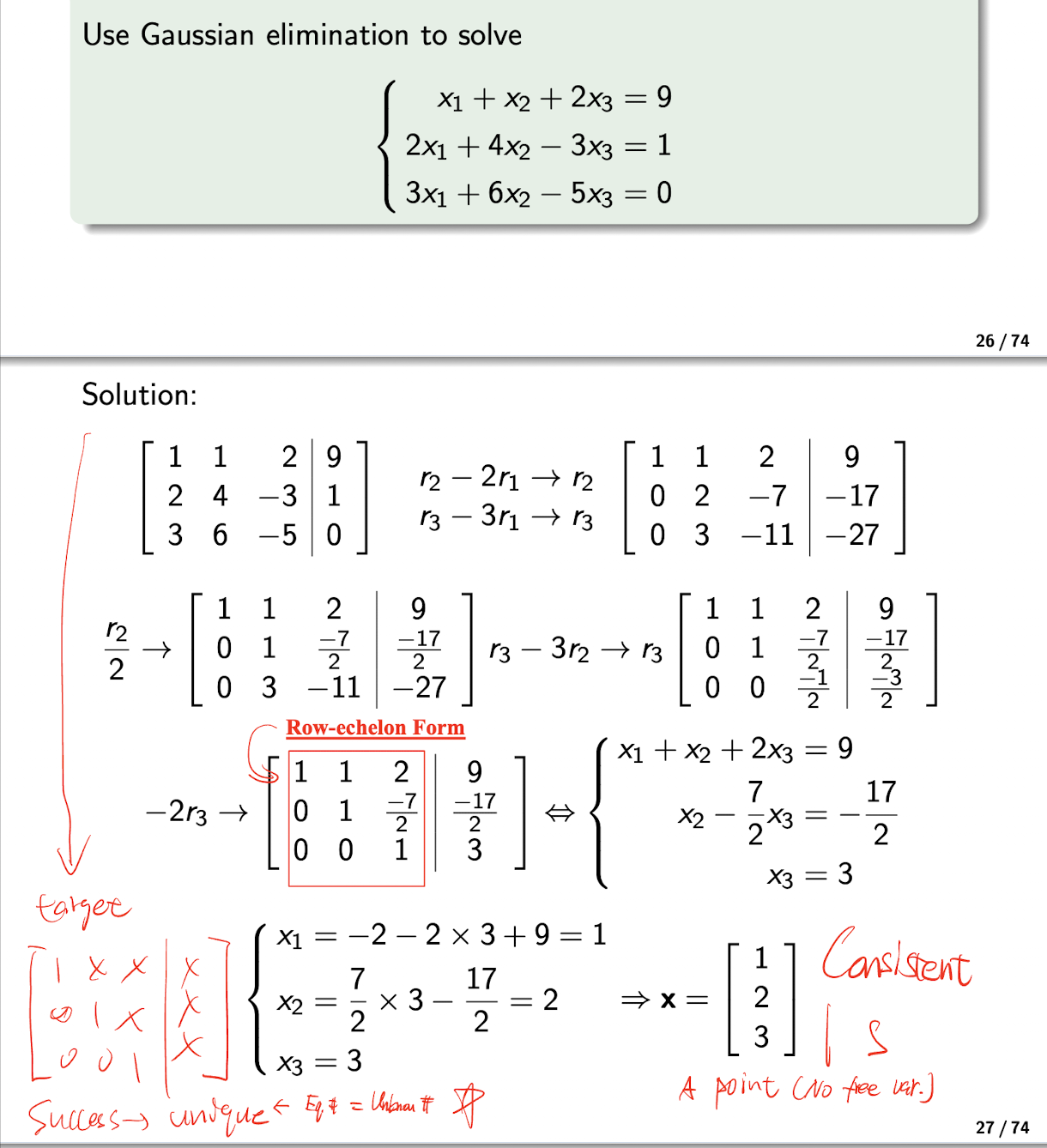

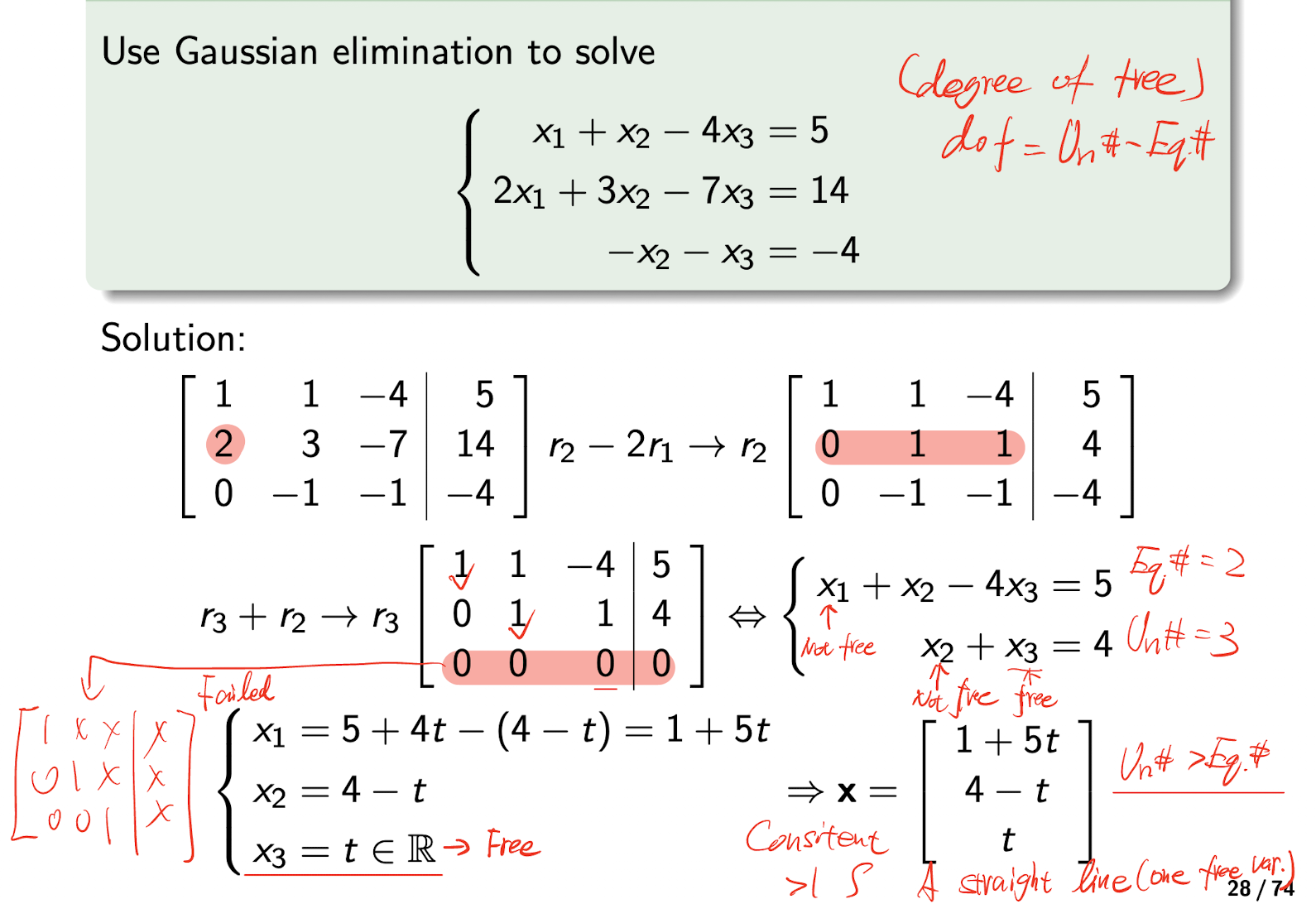

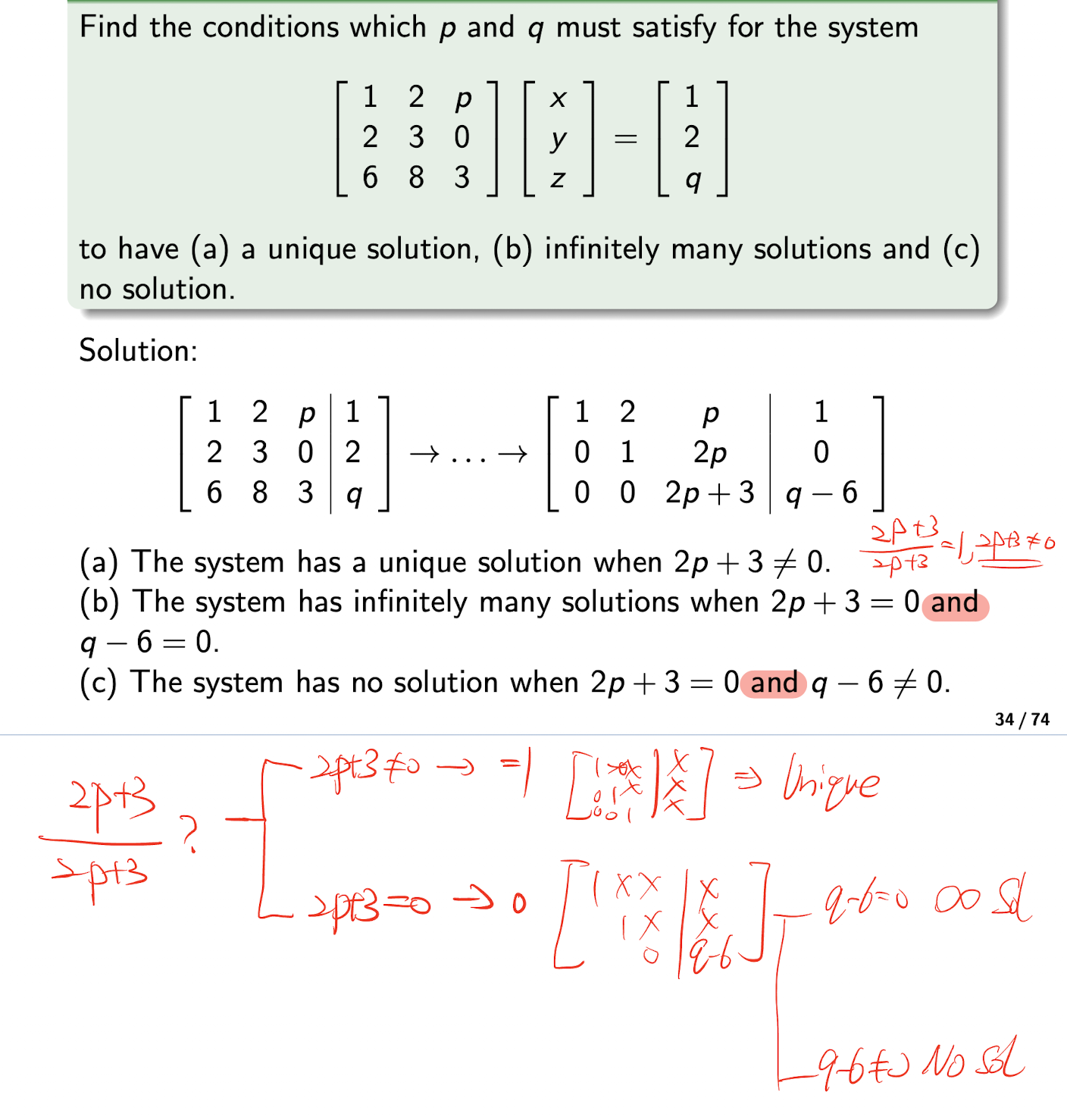

2.3.3 Gaussian Elimination

- Using row operation to reduce the augmented matrix $[A|\bold{b}]$ to the Row-echelon Form $[R|\bold{b}]$;

- Then the System of Linear Equations is equivalent to the $[R|\bold{b}]$, $R\bold{x}=\bold{c}$, then can be solved by backward substitutions;

[Example]

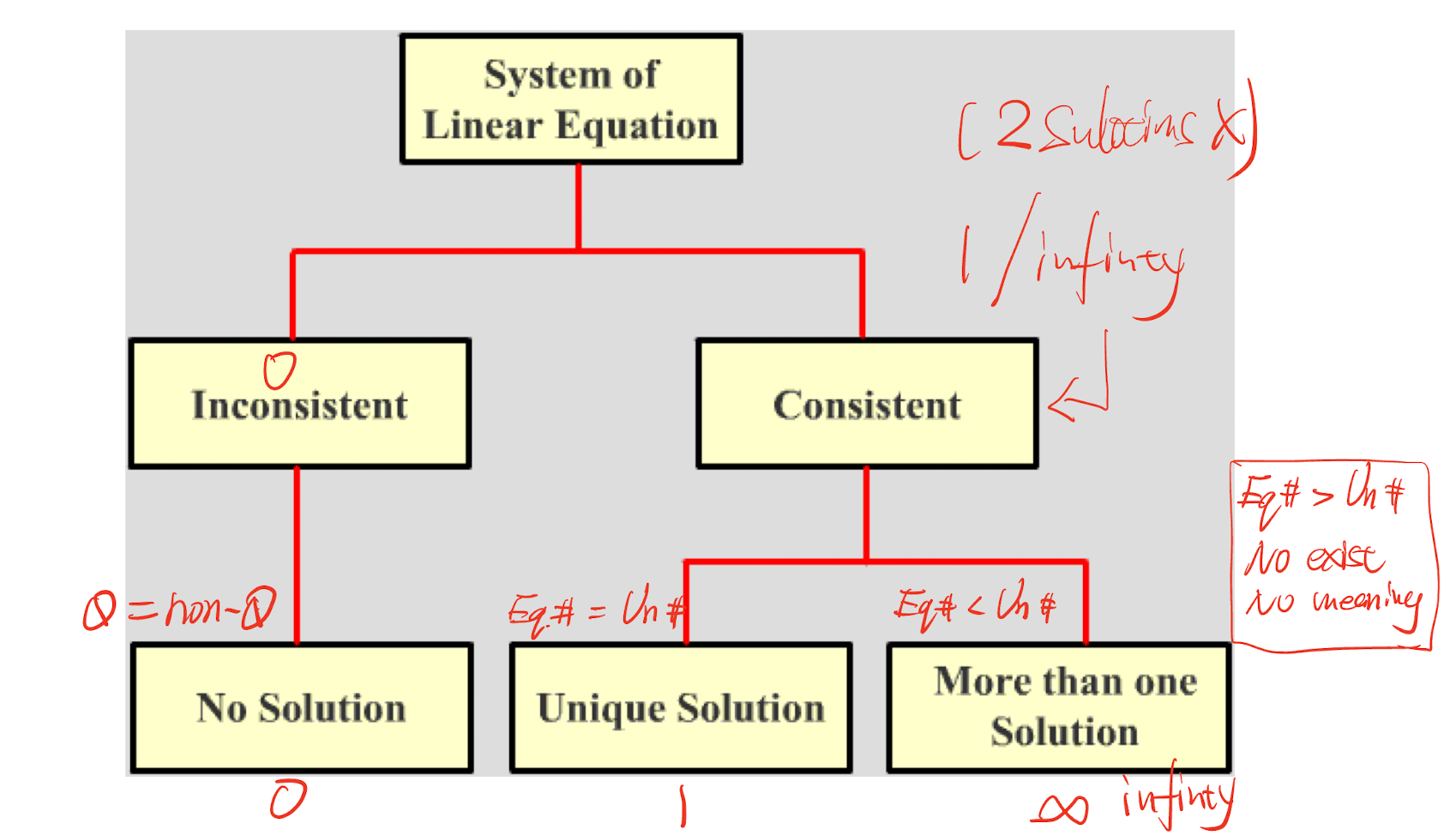

2.3.4 Solution Set

A system of linear equations is either

- Inconsistent

- NO solution

- Consistent

- 1 solution or,

- Infinity solutions

No Solution situation:

- [Example]

- 1 Unique Solution situation:

- NO zero row, all variables are there, no free one

- [Example]

- Infinity Solutions situation:

- Having free variables

- [Example]

[Example]

2.3.5 System of Homogeneous Equations

A System of Linear Equation in a form of:

Its solution set either has ONLY:

- The trivial solution:

- $n \times 1$ zero vector $\bold{0}$

- Infinitely many solutions:

- Non-trivial solution

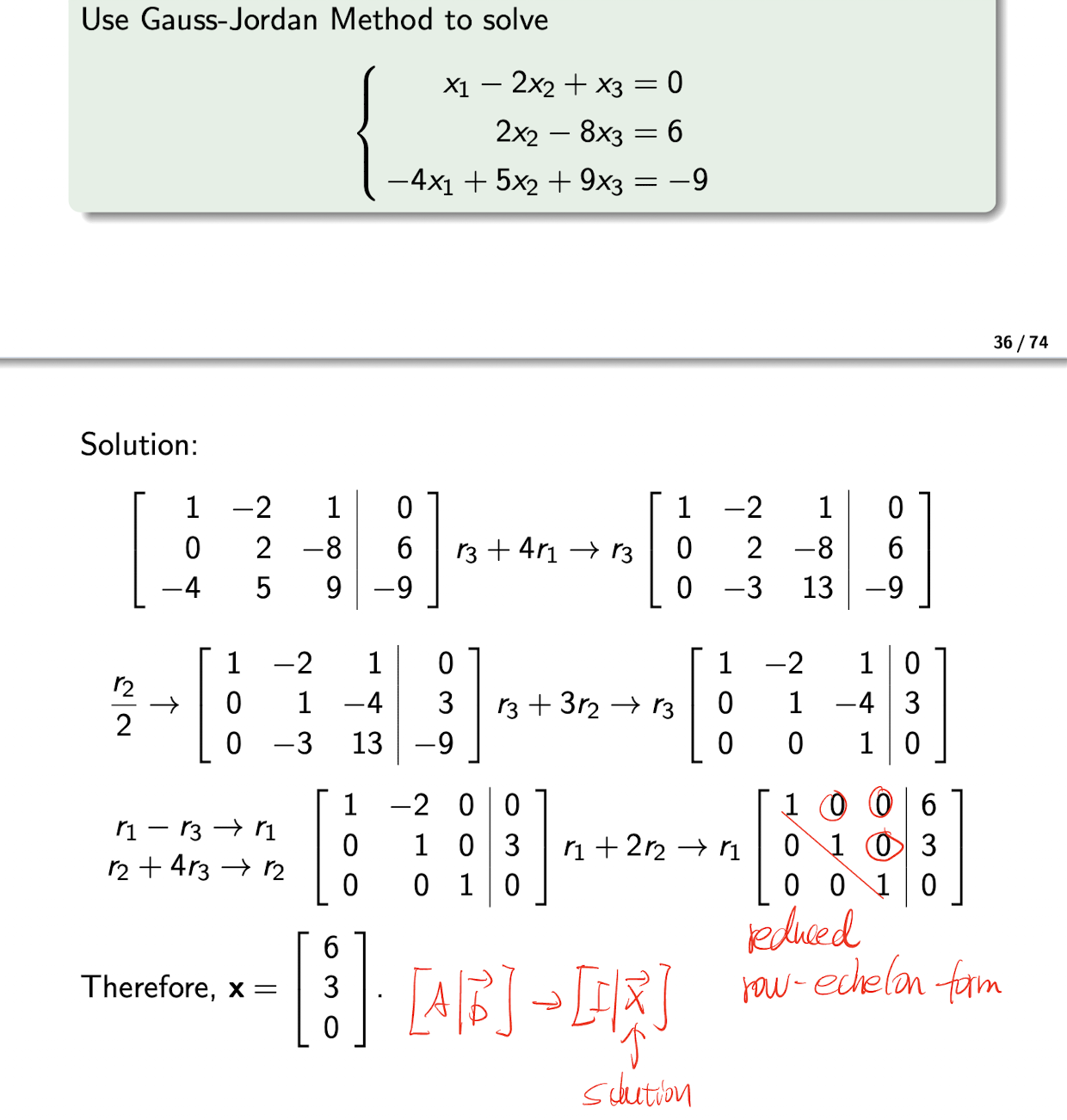

2.3.6 Gauss-Jordan Method

- Using row operation to reduce the augmented matrix $[A|\bold{b}]$ to the Reduced Row-echelon Form $[R|\bold{b}]$;

- Then the System of Linear Equations is equivalent to the $[R|\bold{b}]$, $R\bold{x}=\bold{c}$, then can be obtained simply by inspection;

[Example]

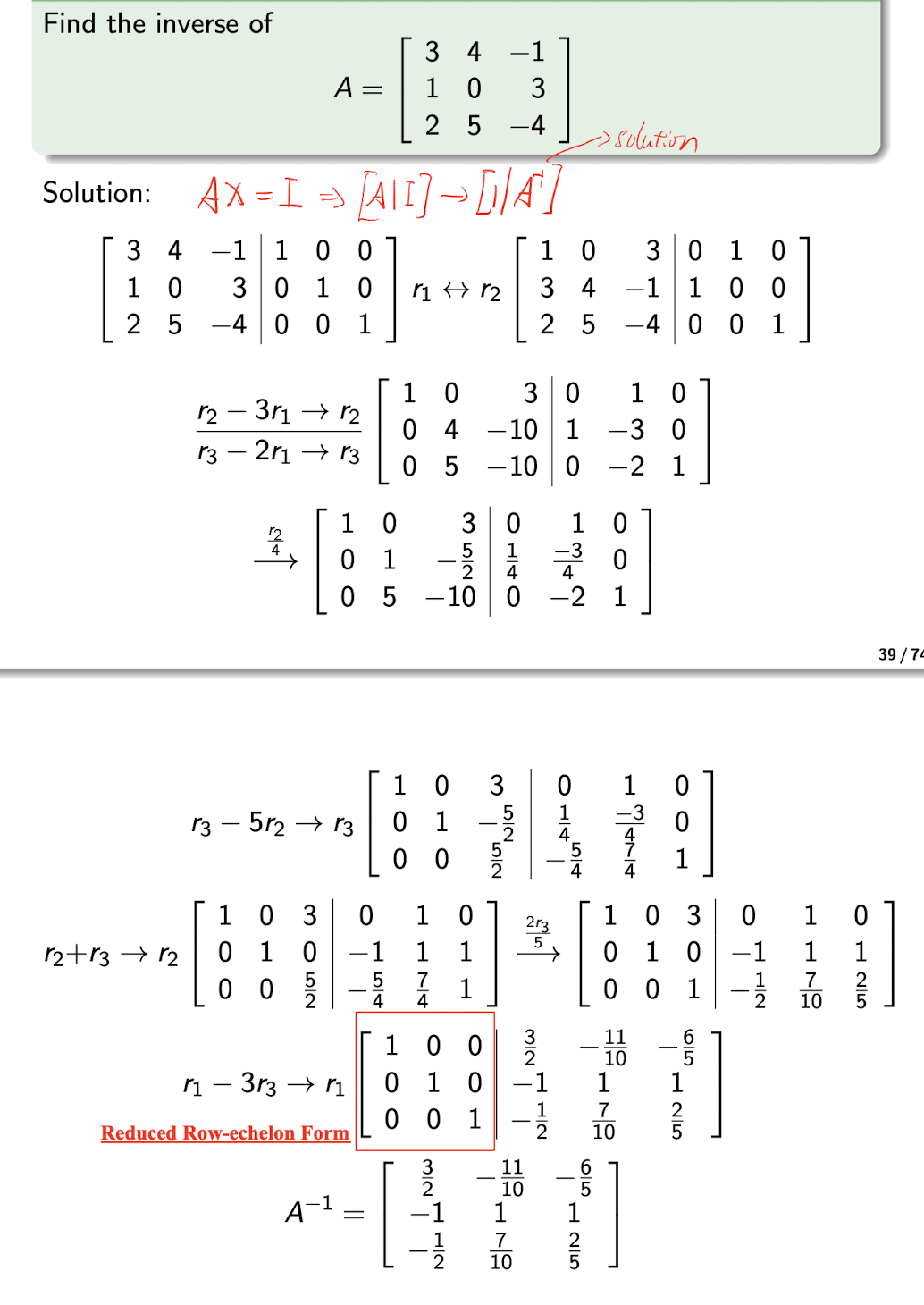

2.3.6.1 Gauss-Jordan Method for Finding Inverse

Solve $AX=I$ to get $X=A^{-1}$ as the inverse of $A$;

where $I$ is in the Reduced Row-echelon Form.

[Example]

2.4 Vector Space

For $\bold{v}_i \in R_n, t_i\in R$, for $i=1,2,…,k$

- The linear combination of $\bold{v}$ is

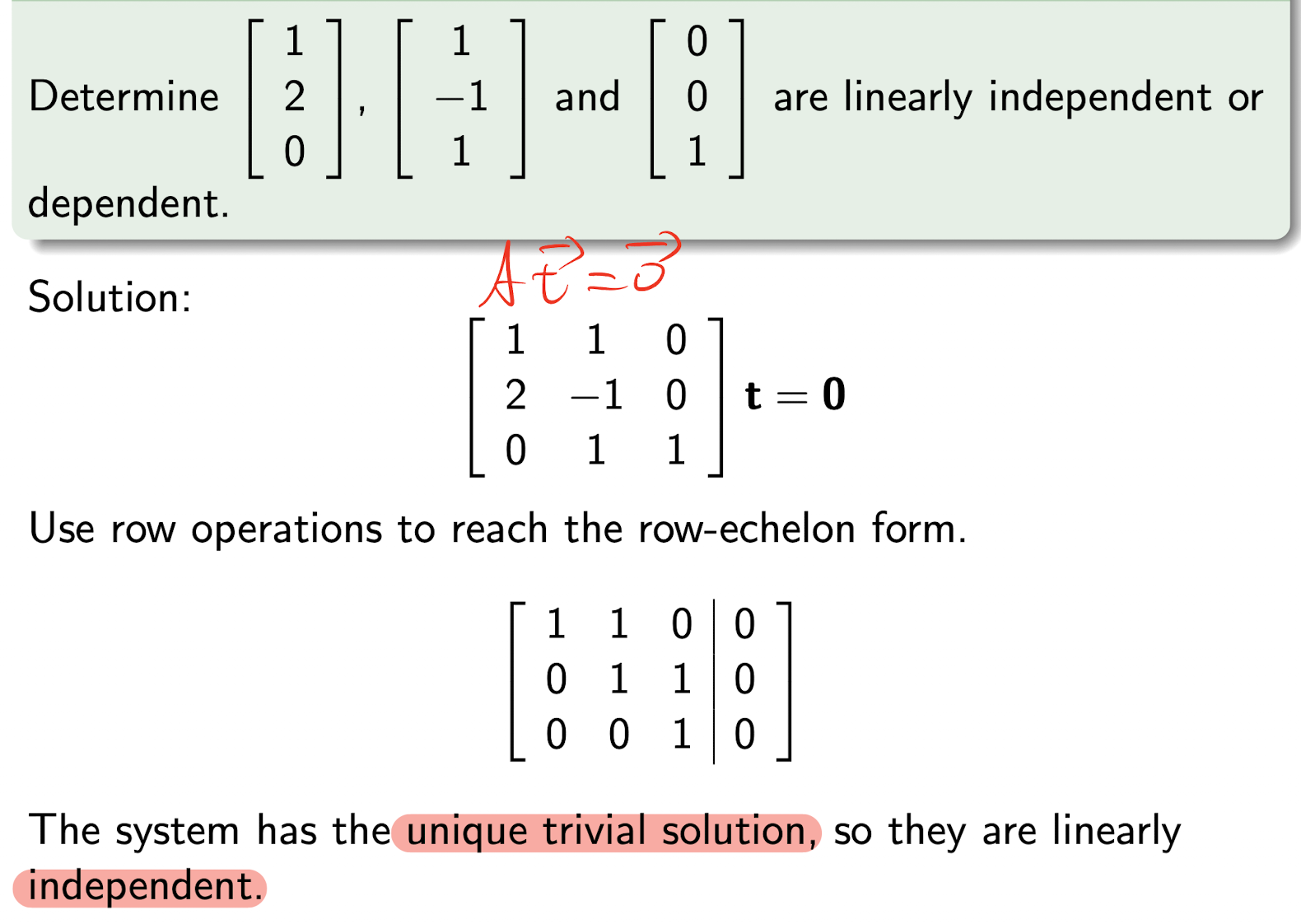

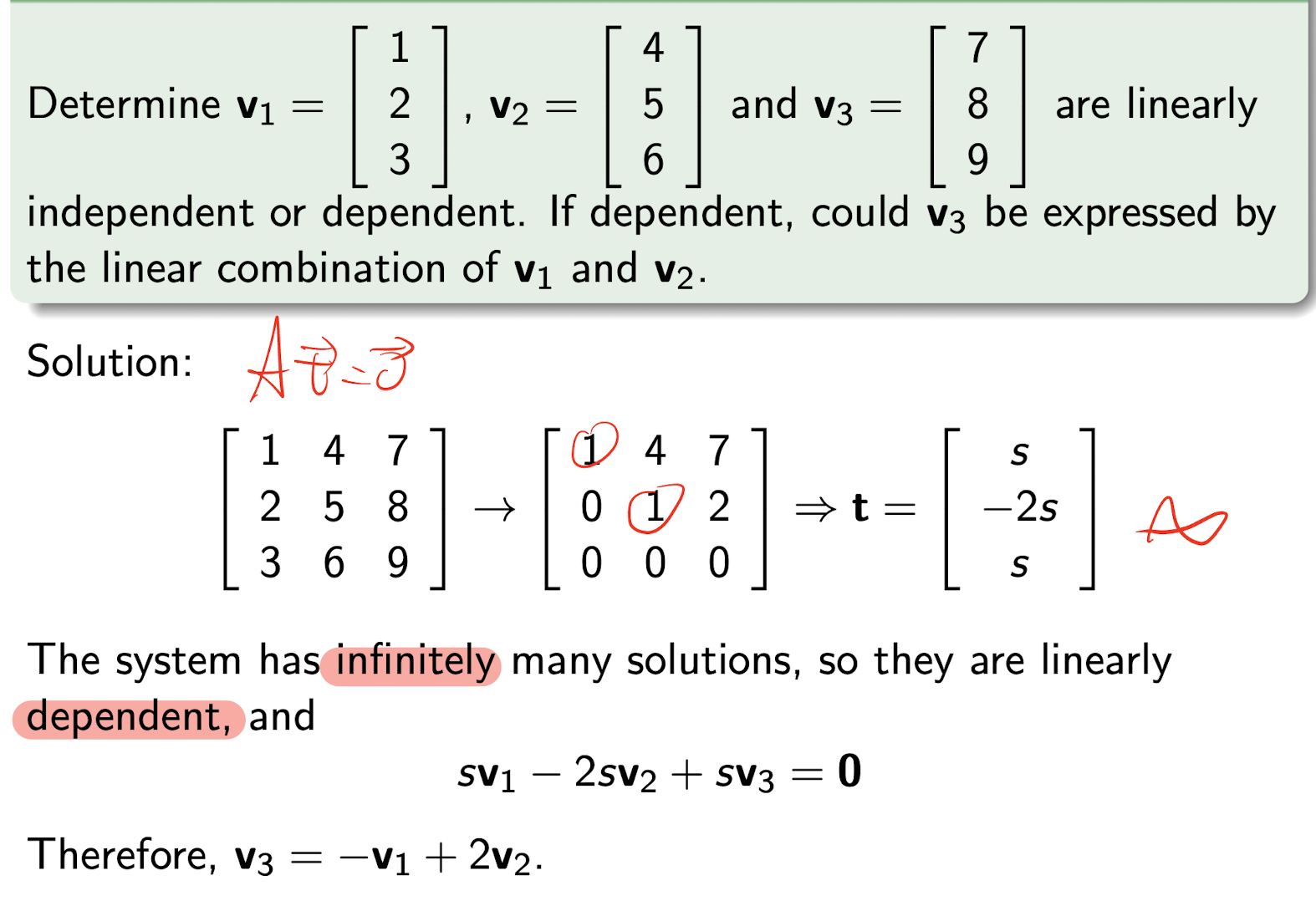

where is similar to the system of linear equations, $V$ is the coefficient matrix, $\bold{t}$ is the variable,

- $\bold{v}$ are linearly dependent, if

- $V\bold{t}=\bold{0}$ has infinitely many solutions;

- $\bold{v}$ are linearly independent, if

- $V\bold{t}=\bold{0}$ ONLY has a the trivial solution: $\bold{0}$;

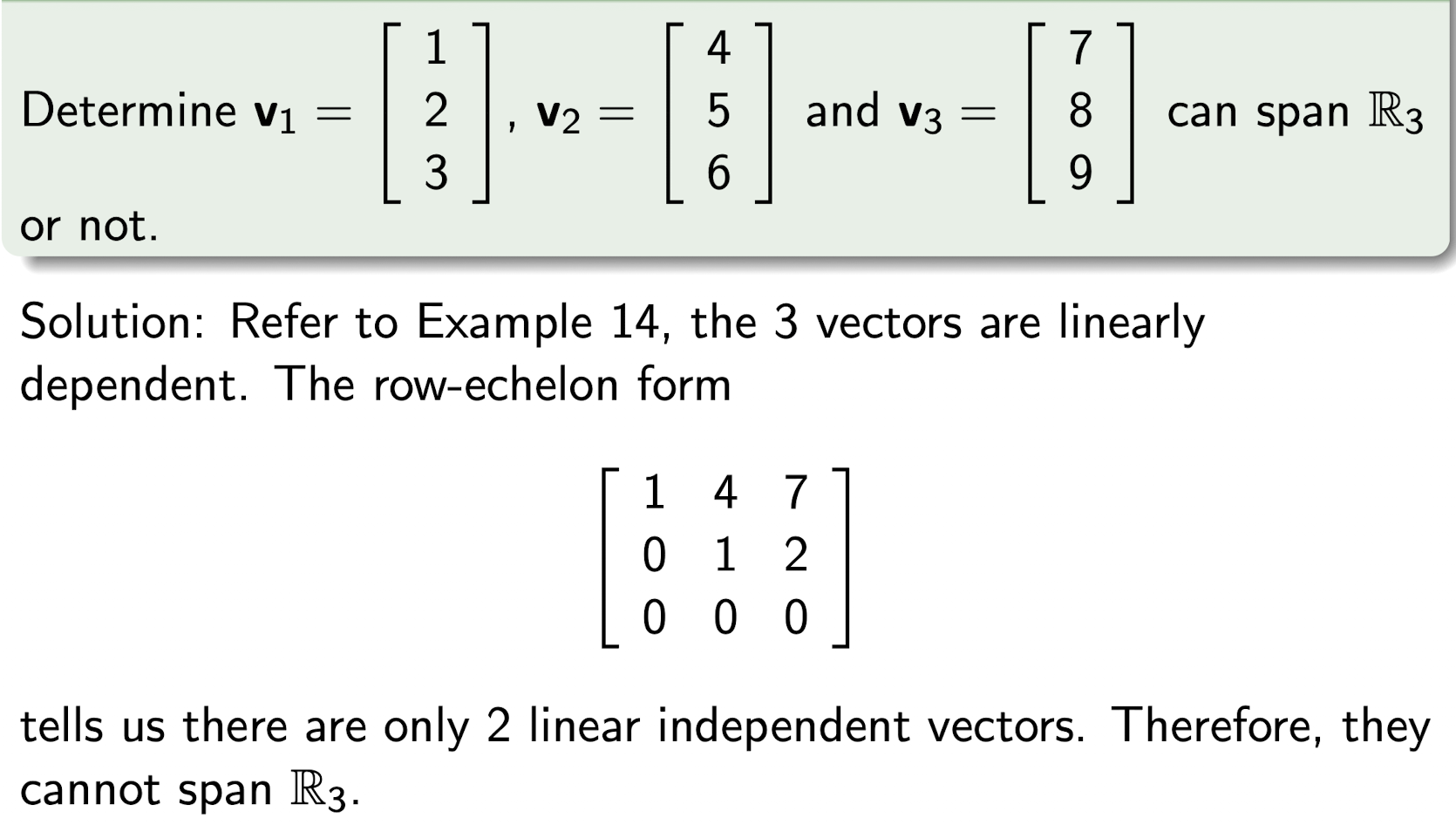

- $\text{span }{v_1,v_2,…v_m\in R_n}=R_n \iff$ there are $n$ linearly independent vectors in ${v_1,v_2,…v_m}$;

[Example]

[Example]

[Example]

2.4.1 Rank

The rank of a matrix $A$ is the dimension of the vector space spanned by its columns. This corresponds to the maximal number of linearly independent columns of $A$.

- The number of independent vector = the number of Equations in the row-echelon form;

- Thus, if no solution, then no Rank

SUM.1 Summary of Above Matrix Points

For square Matrix $A_{n\times n}$, following are equivalent:

- $A$ is nonsingular;

- Homogeneous: $A\bold{x}=\bold{0}\implies$ ONLY trivial solution ($\bold{x}=\bold{0}$);

- Non-homogeneous: $A\bold{x}=\bold{b}\implies$ has a unique solution (#Eq = #Unk);

- $A$ can be reduced to $I$, $AX=I \implies X=A^{-1}$;

- $|A|\not ={0}$;

- Corresponding vectors are linearly independent;

- $Rank(A)=n$: #Eq=n

If $A$ is singular, then take above statement’s negation.

2.5 Eigenvalue and Eigenvector

For a square matrix $A_{n\times n}$, non-zero vector $\bold{d}$, if

then $\bold{v}\in R$ is an eigenvector of $A$ with eigenvalue $\lambda \in R$.

If $\bold{v}$ is an eigenvector of A corresponding to the eigenvalue $\lambda$, then

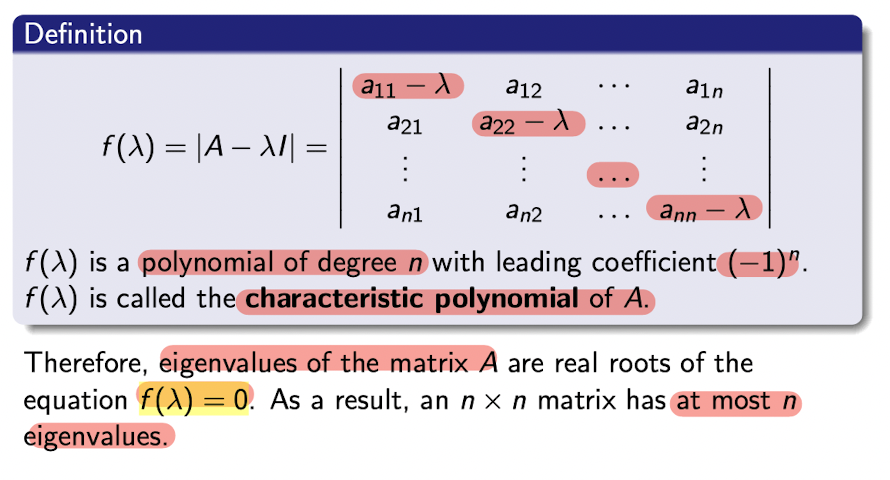

2.5.1 Eigenvalue

$A\bold{v}=\lambda\bold{v}\implies(A-\lambda I)\bold{v}=\bold{0}$

- Explanation1: where is a non-homogeneous without trivial solution (as $\bold{v}\not ={\bold{0}}$), so it has a infinitely many solutions, thus $(A-\lambda I)$ is singular, then $|(A-\lambda I)|=0$

- Explanation2: $(A-\lambda I)$ is a transformation matrix, who transfers $\bold{v}$ to $\bold{0}$, which means its determinant is zero.

Note:

Number of eigenvalue = n, dimension of $A_{n\times n}$;

Even there are some same value, write down them separately. e.g. $\lambda_1=1, \lambda_2 = 1, \lambda_3 = 1$, can not say $\lambda=1$ only.

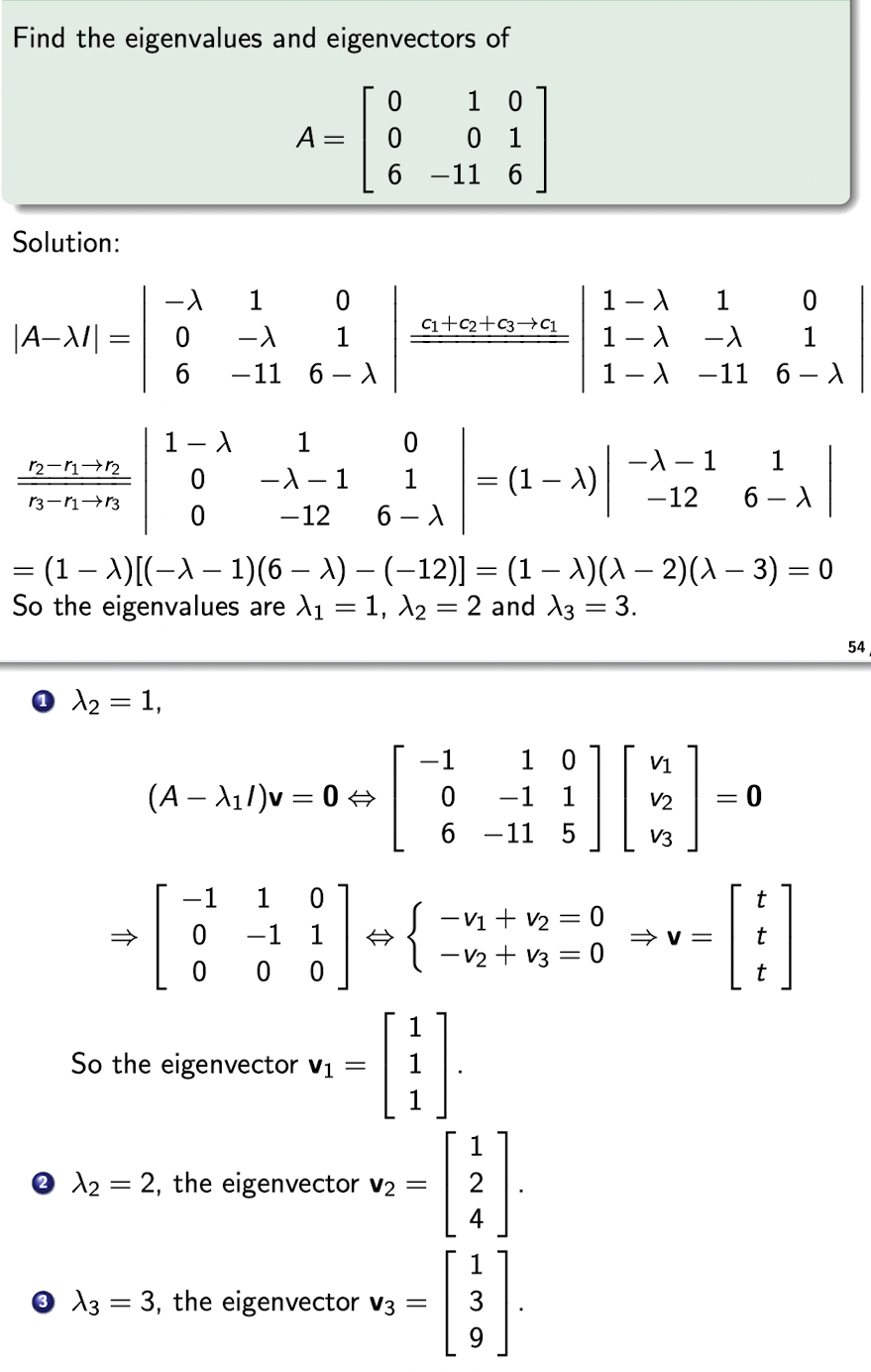

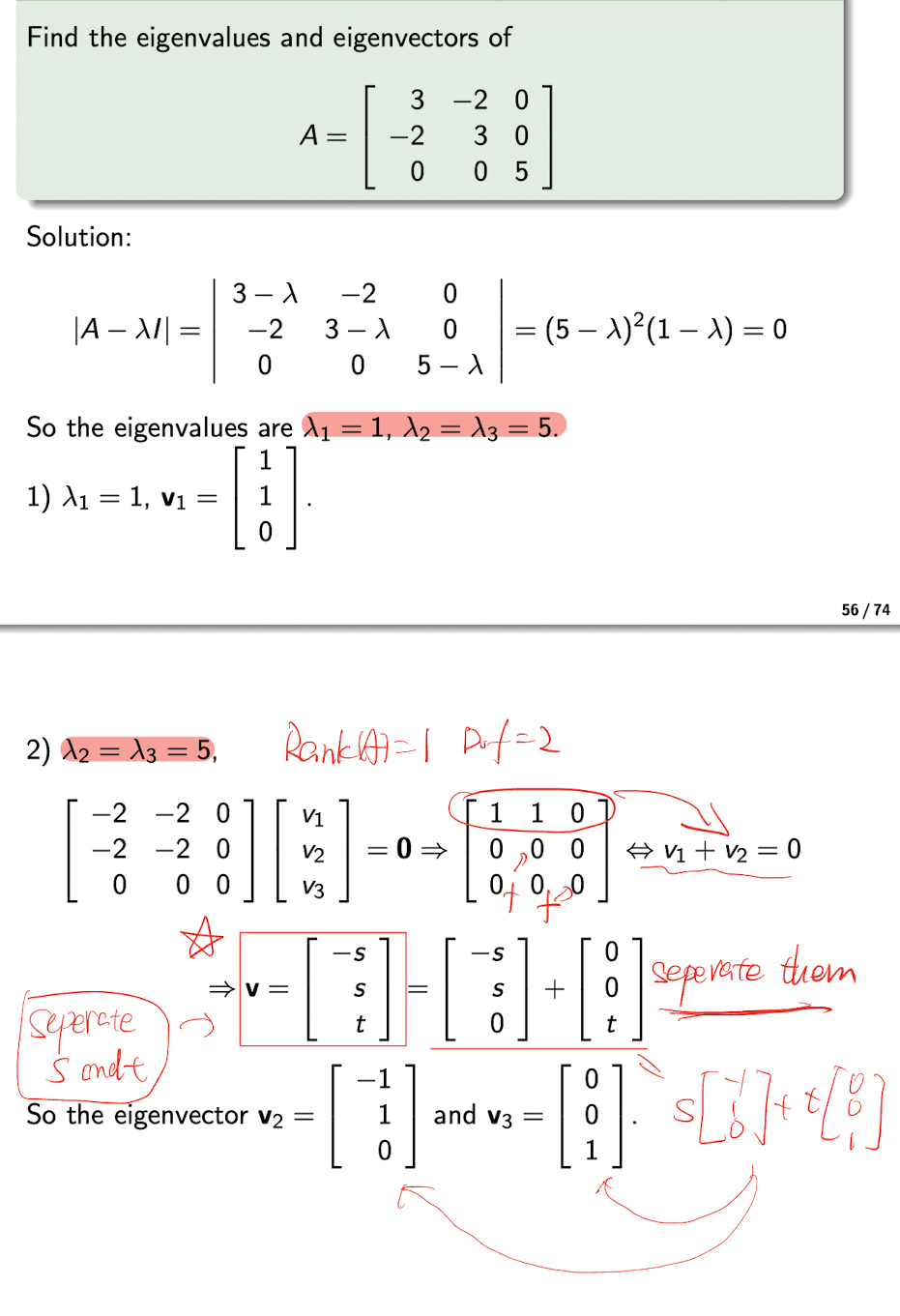

2.5.2 Eigenvector

- Solve $|(A-\lambda I)|=0$ to get $\lambda_1,\lambda_2,…,\lambda_n$;

- Submit $\lambda$ to the original $(A-\lambda I)\bold{v}=\bold{0}$, and solve it to get $\bold{v}_1,\bold{v}_2,…,\bold{v}_n$;

- Where $\bold{v}$ must have infinitely many solutions;

- like:

- Write down a particular solution $\bold{v}_p$

- like:

[Example]

[Example]

Note:

The number of eigenvector = the number of DoF (i.e. the number of free variables)

2.5.3 Diagonalization

For square matrix $A_{n\times n}$, it is diagonalizable if

- Where $D$ is a diagonal matrix;

- May use the Gauss-Jordan to get $P^{-1}$;

- May find the $P^{-1}$ in the last step;

For square matrix $A_{n\times n}$, it is diagonalizable if

- $A$ has n linearly independent eigenvectors $\bold{v}_1,\bold{v}_2,…,\bold{v}_n$;

- and n corresponding eigenvalues $\lambda_1,\lambda_2,…,\lambda_n$;

- The $D$ is

- The $P$ is

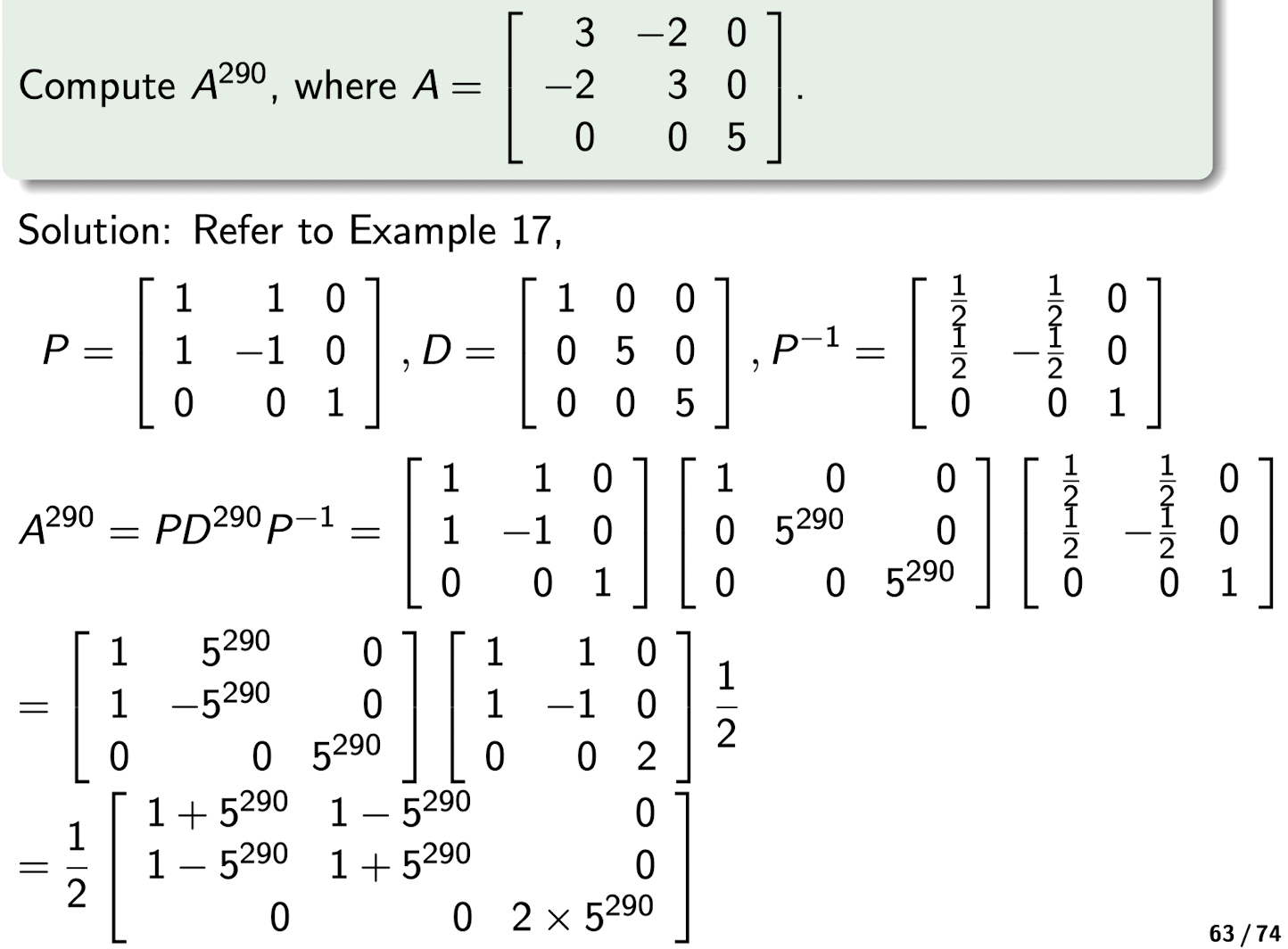

2.5.3.1 Application: Matrix Power

If $A$ is diagonalizable, $A=PDP^{-1}$, then $A^m=PD^mP^{-1}$

- where $D^m$ is

[Example]

2.6 Inner Product

inner product (dot product) os $\bold{v}$ and $\bold{w}$ is

Proposition:

- $\bold{v}\cdot\bold{w}=\bold{w}\cdot\bold{v}$

- $\bold{v}\cdot(\bold{w}+\bold{u})=\bold{v}\cdot\bold{w}+\bold{v}\cdot\bold{u}$

- $t\bold{v}\cdot\bold{w}=t(\bold{v}\cdot\bold{w}) = (\bold{v}\cdot\bold{w})t$

- $ \bold{v}\cdot\bold{v}\geq 0$

- $ \bold{v}\cdot\bold{v}=0 \iff \bold{v}=\bold{0}$

- $ \bold{0}\cdot\bold{v}=\bold{0}$

2.6.1 Norm

The Eucilidean norm of $\bold{v}$ is

Unit vector:

For non-zero vector $\bold{v}$, a unit vector $\bold{u}$ is

- It is pure on direction, and the length is 1;

- Different unit vector has different direction;

The angle between $\bold{v}$ and $\bold{w}$ is unique $\theta$ between $0$ and $\pi$:

2.6.2 Orthogonality

Orthogonal:

- Two vectors $\bold{v}$ and $\bold{w}$ are orthogonal if

- $\cos \theta = 0$

- They are must linearly independent;

- $\bold{0}$ is orthogonal to any vectors;

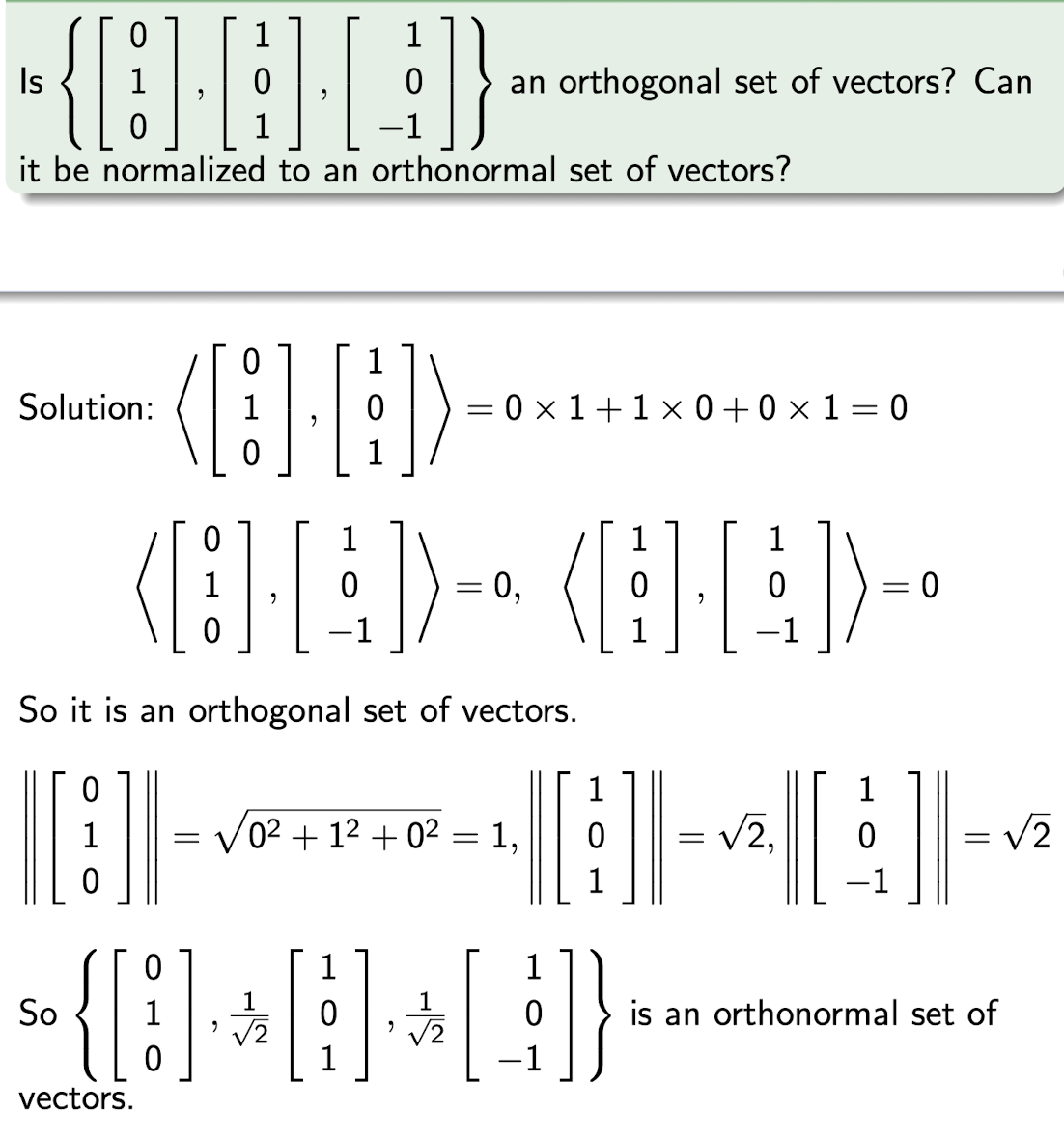

Orthogonal set:

- Orthogonal set of vectors if these vectors are mutually orthogonal to each other.

Orthonormal:

- Orthogonal set of vectors are orthonormal if these vectors are unit vectors.

- They are mutually orthogonal to each other; $\perp$

- They are unit vectors; $1$

[Example]

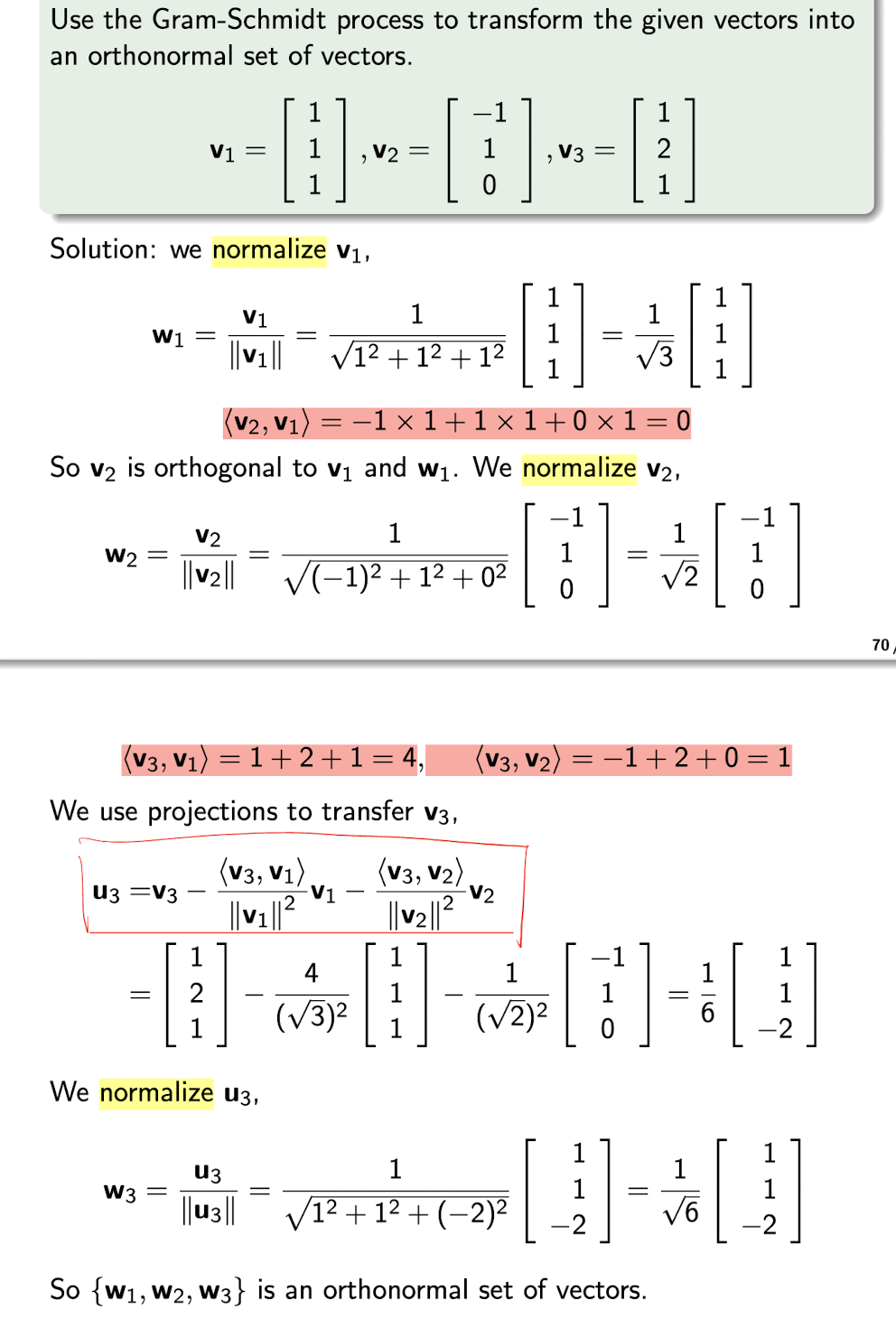

2.6.2.1 Gram-Schmidt Process

- Projection of $v$ onto $w$ is:

where

is the length of the projection;

is the direction of the projection, same as w;

- To construct an orthonormal vector $u$ from a vector $v$ to $w$:

[Example]

2.6.2.2 Orthogonal Matrix

Orthogonal Matrix contains set of orthonormal vectors as its columns.

- Each vector is mutually orthogonal to each other;

- Each vector is unit vector;

For a symmetric matrix $A$, $A^T=A$:

- It is always diagonalizable;

- It has a matrix $P$ formed from its eigenvectors;

- If $P$ is independent, it can be transferred to orthogonal matrix $Q$ using G.S. method, where $Q$ is formed from its eigenvectors;

- Where $A=QDQ^{-1}$, $D$ is a diagonal matrix formed from its eigenvalues;

[Example]

3. Ordinary Differential Equation

(ODE)

Giving a function of $y^\prime$ or $y^{\prime\prime}$, to find the original function $y$.

3.1 1st Order ODE

Form:

- Only $y^\prime$, no $y^{\prime\prime}$,…

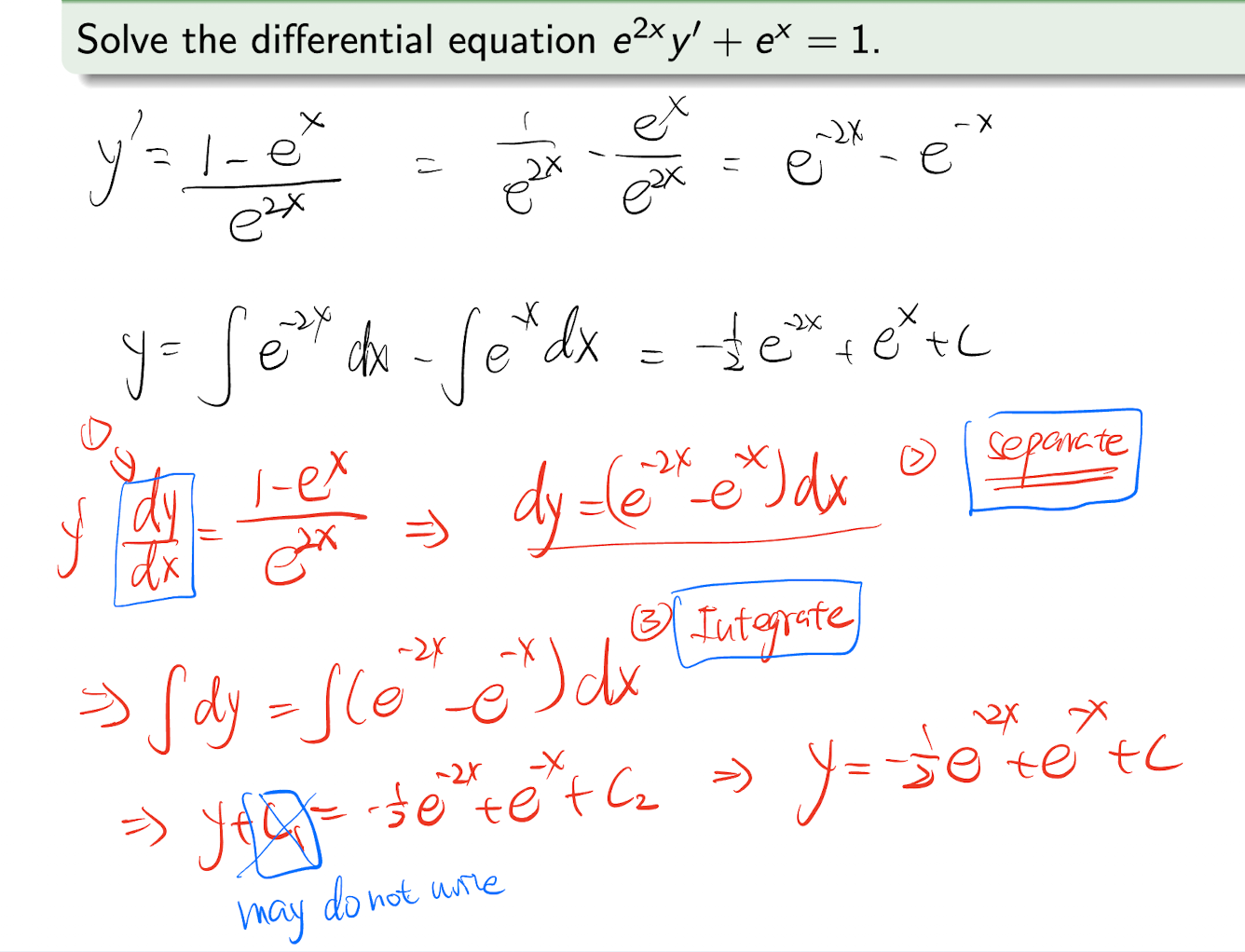

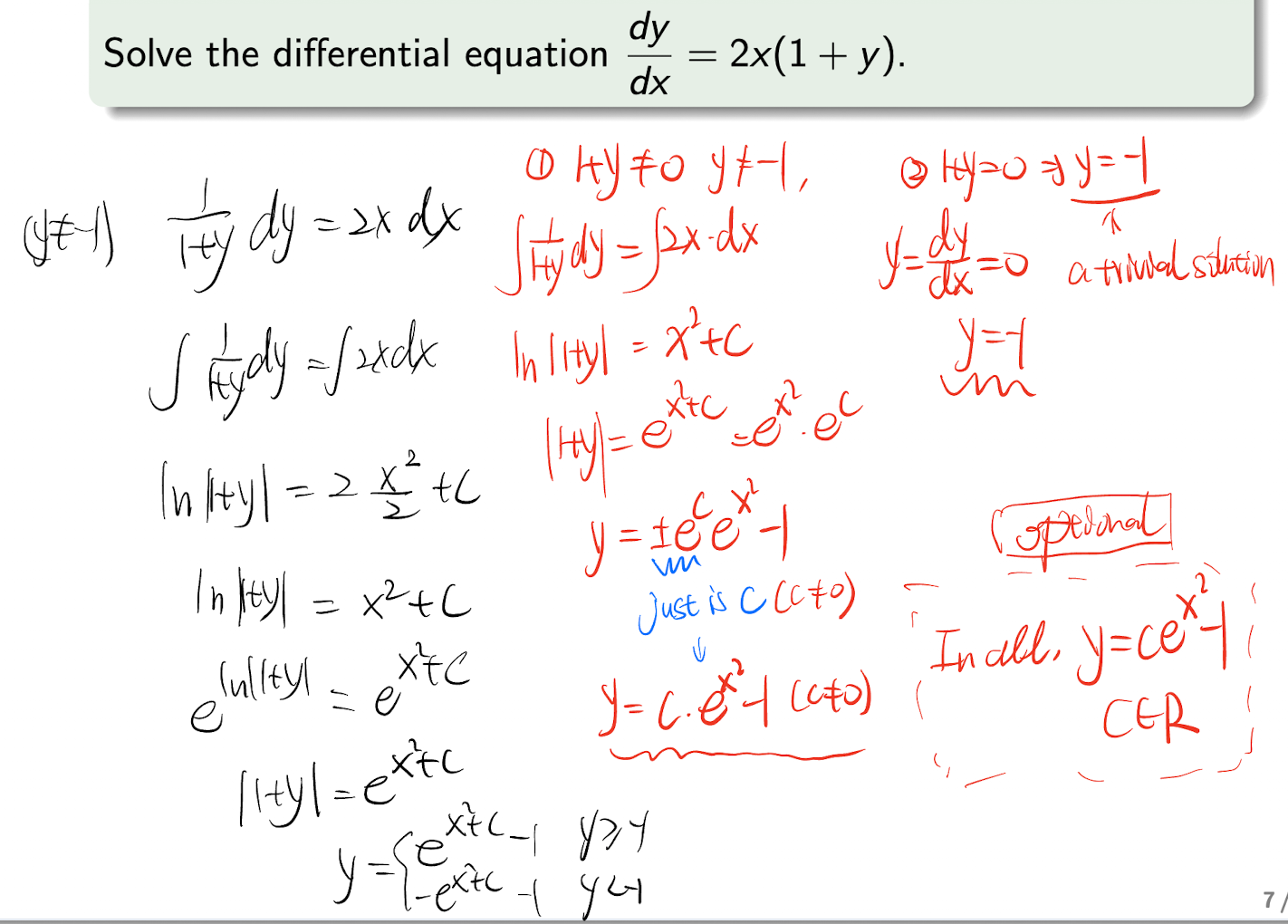

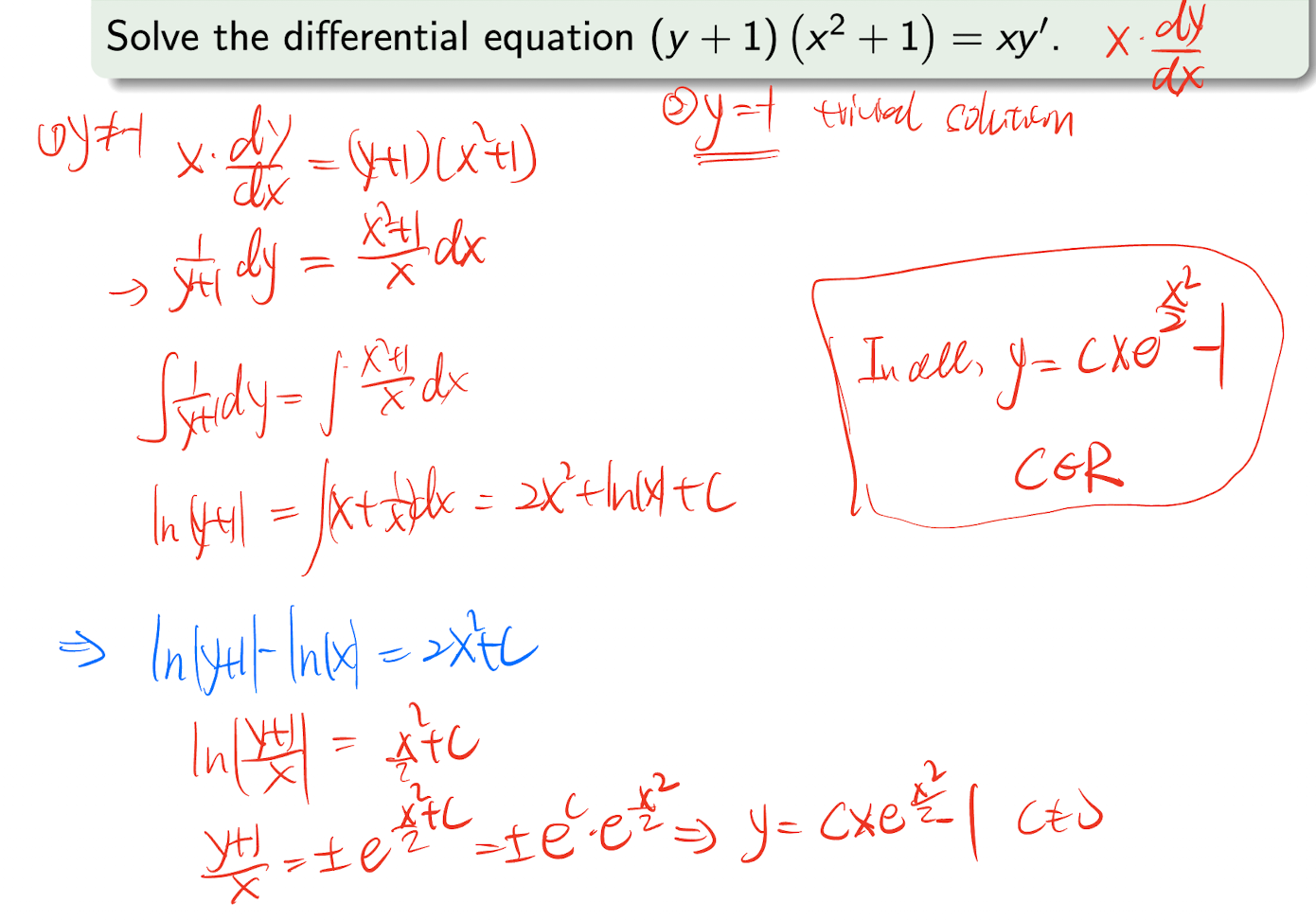

3.1.1 Variables Separable Type

Type:

$\implies$

Solution:

Steps:

- Write in $\frac{dy}{dx} = g(x)h(y)$ form, and separate them;

- Do the integration;

- Solve the equation, and combine into one $C$

[Example]

[Example]

[Example]

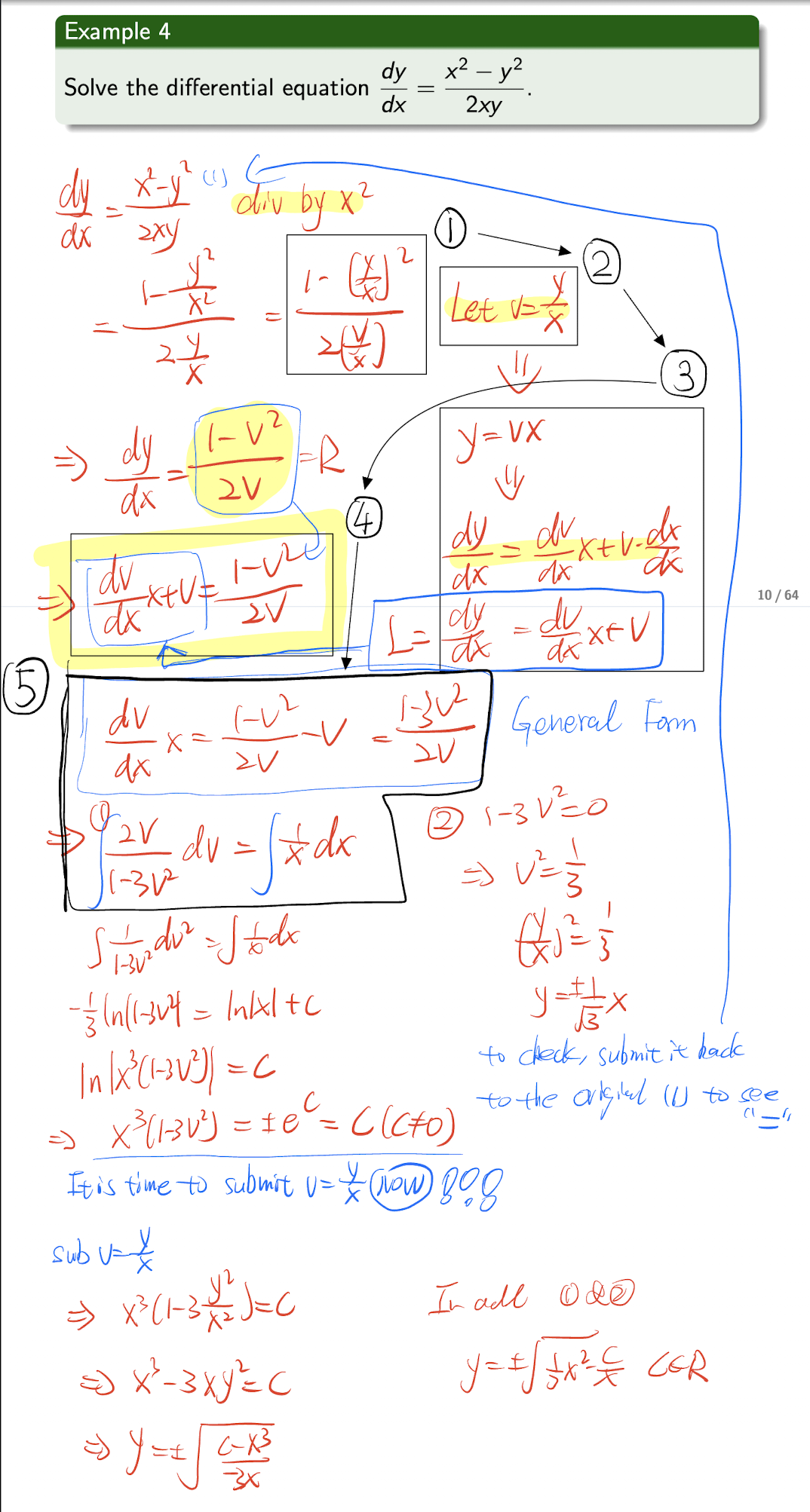

3.1.2 Reducible to Separable Type

ODE in the form of

Steps:

- Write the ODE in the above form with $\frac{y}{x}$ term;

- Let $v=\frac{y}{x}$;

- Get the differential:

- Submit:

- The we get the Separable Type:

- Use above Variables Separable Type method to solve it;

[Example]

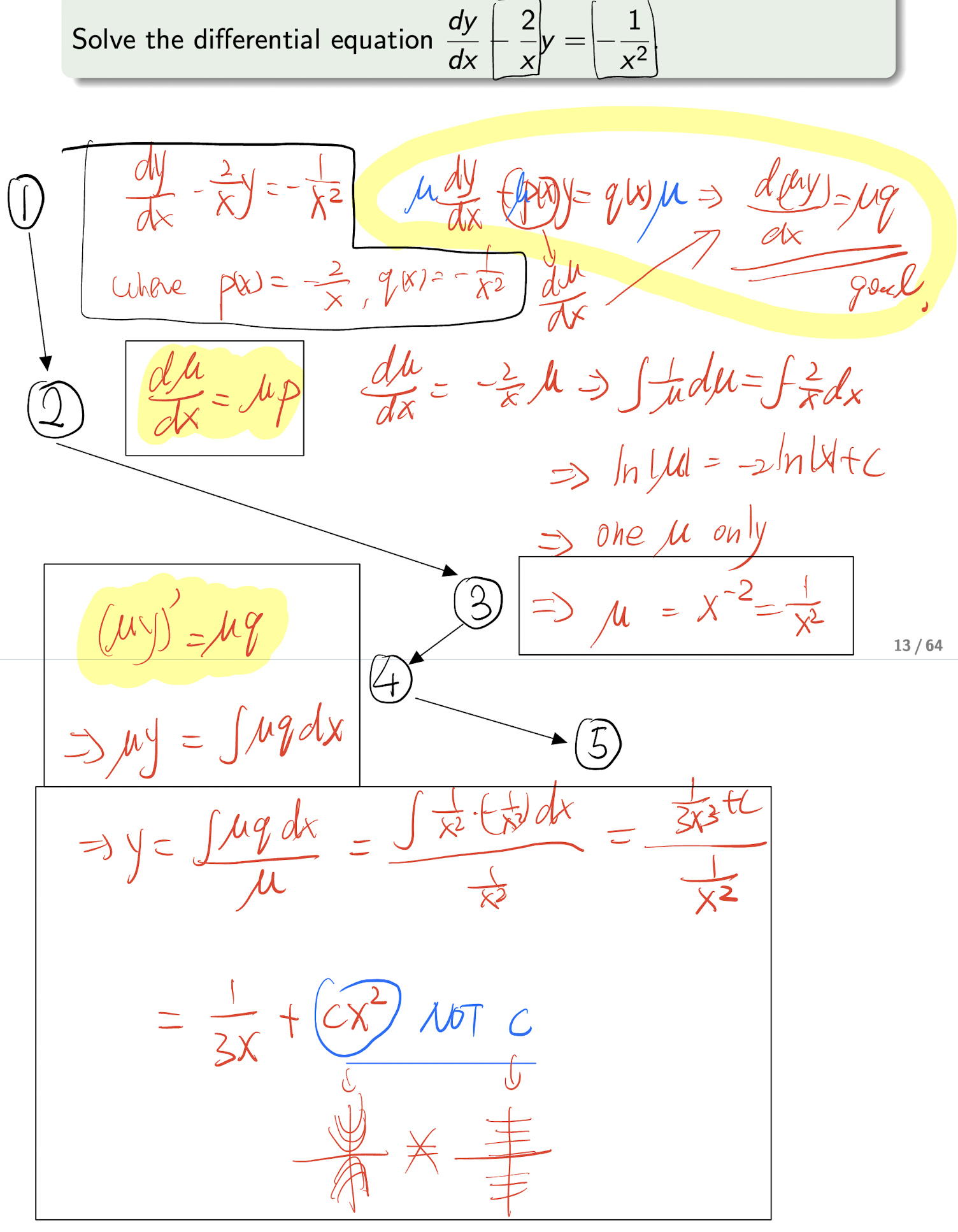

3.1.3 Integrating Factor Method

(1st Order Linear ODE)

An ODE in the form of

Analysis Step:

- multiply both sides by $\mu(x)$:

- Set the condition for the $\mu p$:

- Then we get:

- Which is:

- Take the integral of both sides:

- Then we get:

Steps:

- Write the ODE in the form of $\frac{dy}{dx} +p(x)y = q(x)$;

- Based on the above condition $(1)$, to write:

- Then to calculate the $\mu$;

Based on the above $(2)$, to write:

Then to calculate the $y$:

[Example]

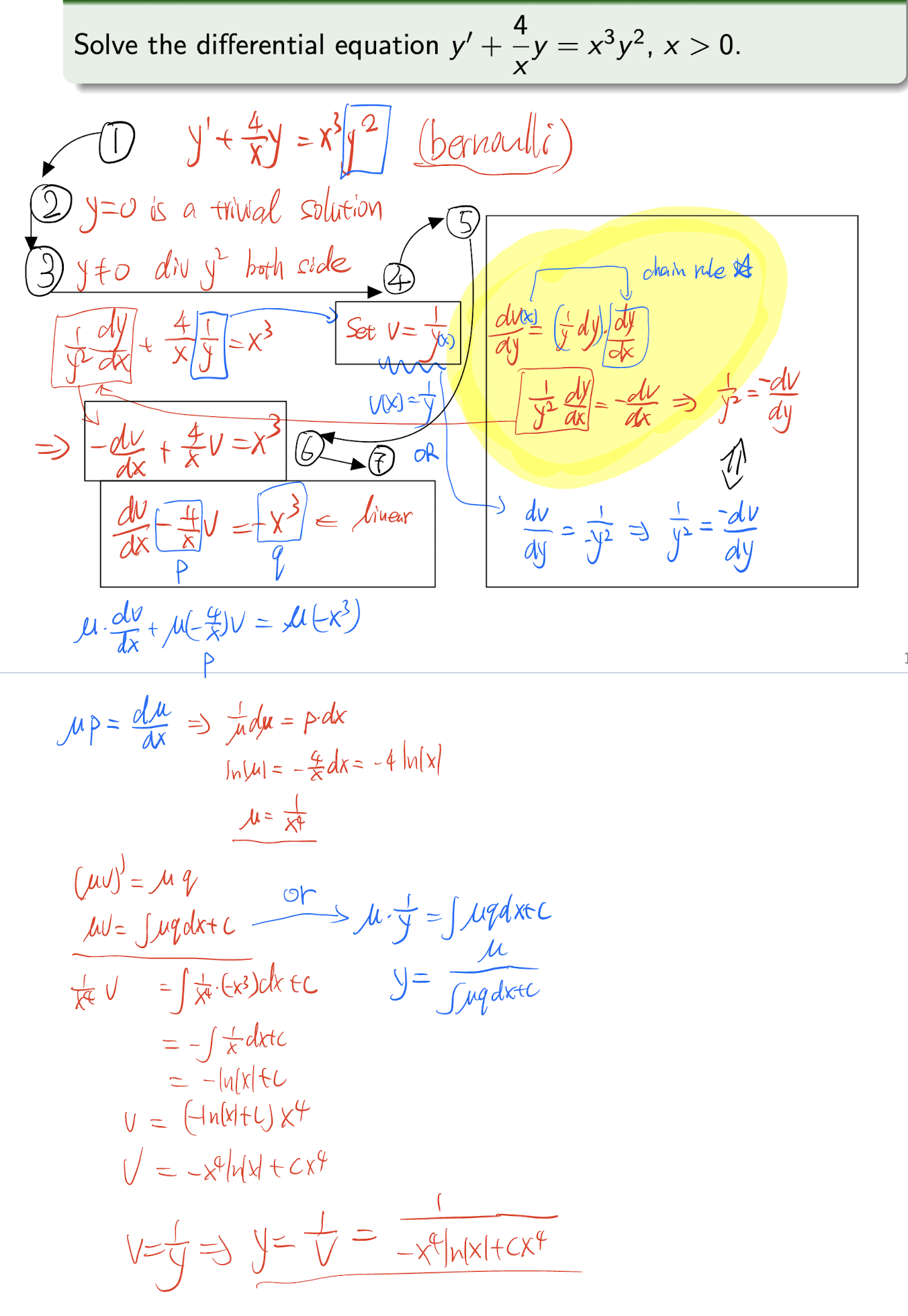

3.1.4 Bernoulli Equation

(homogeneous)

An ODE in the form of

Steps:

- Write the ODE in the form of $\frac{dy}{dx} +p(x)y = q(x)y^n$;

- Discuss the trivial solution $y=0$;

- Discuss the non-trivial solution $y\not ={0}$, and divide it by $y^n$:

- Set:

- Calculate the differential of $v$:

- Submit the differential of $v$ back:

- Now it is in Linear ODE, solve it by Integrating Factor Method in 3.1.3;

[Example]

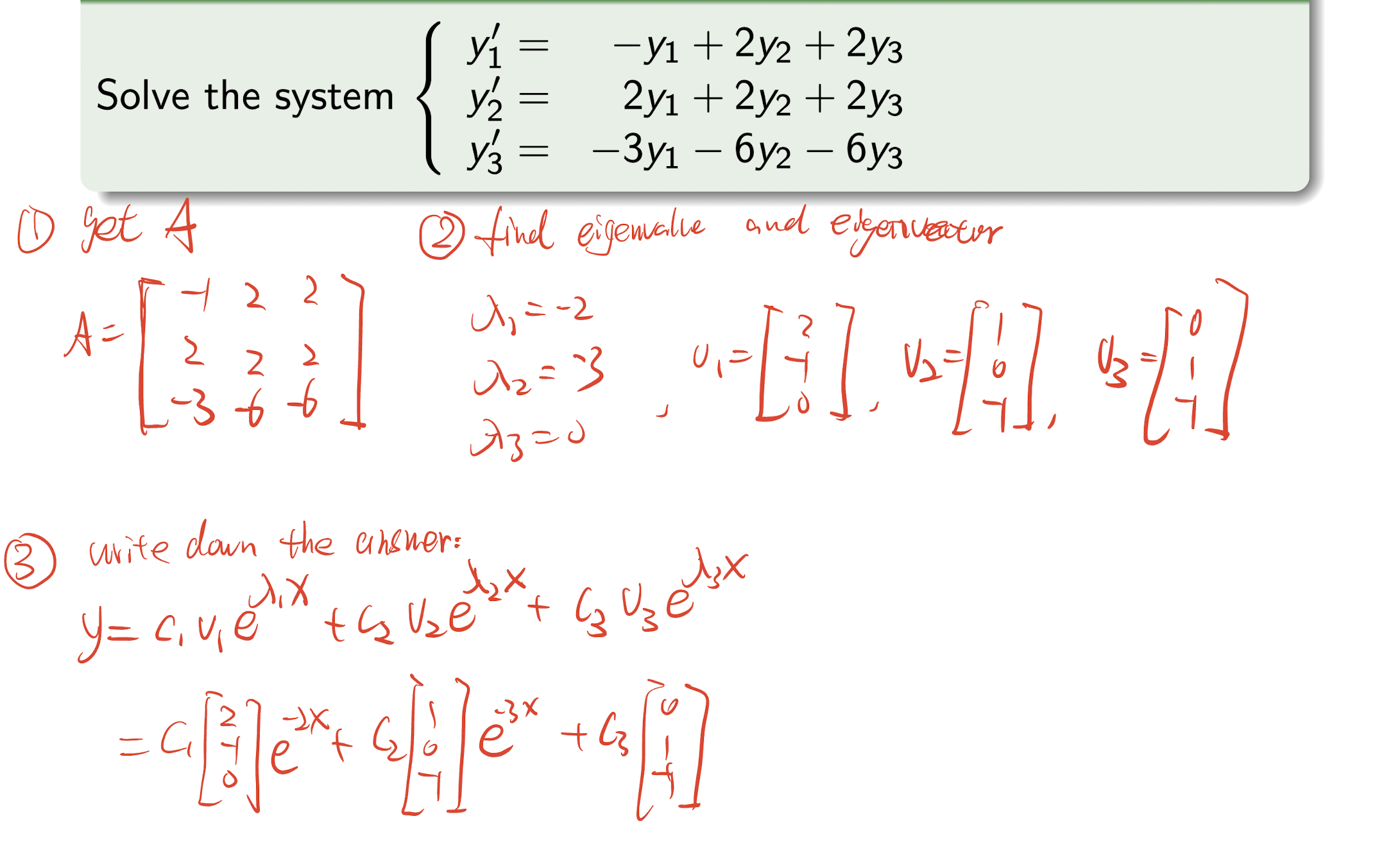

3.2 System of 1st Order Linear ODE

System of 1st Order Linear ODE

Solution:

where $v$ is the eigenvectors, $\lambda$ is the eigenvalues, and $c_1,c_2,…,c_n$ are constants.

Steps:

- Write the system of ODE in the form of $\bold{y}^{\prime}=A\bold{y}$, then get the $A$;

- Find the eigenvalues and eigenvectors of $A$;

- Use the above Solution to solve the system of ODE;

[Example]

3.3 2nd Order Linear ODE

In the form of:

We focus constant coefficient ODE:

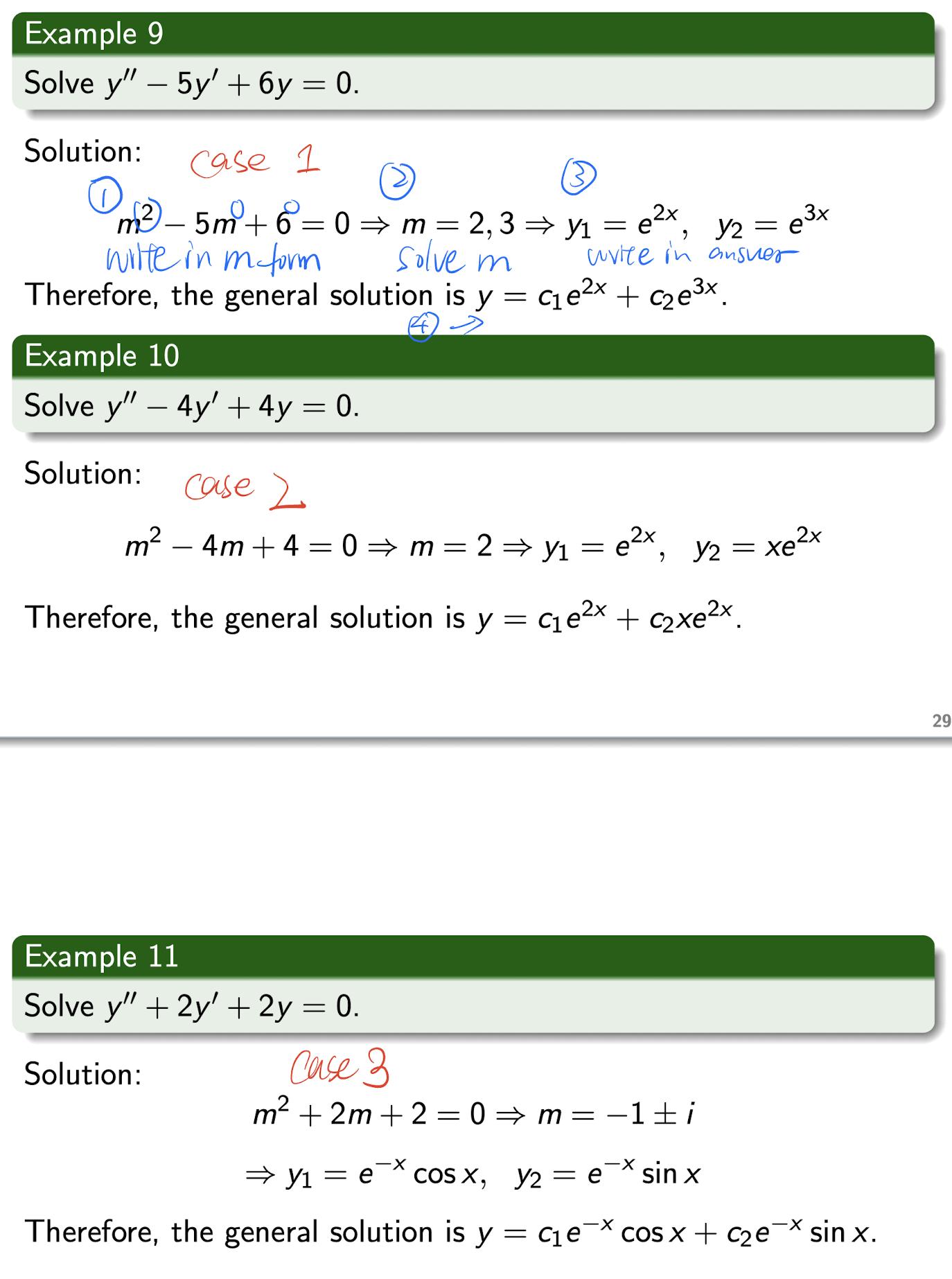

3.3.1 Homogeneous 2nd Order Linear ODE

In the form of:

Septs:

- Write the equation in the above form, and write the $m$ form:

- Solve the $m$ as $a,b$;

- Write down the solution based on following table:

| Case | Root($a$,$b$) | Solution |

|---|---|---|

| Case 1 | $a\not ={b}$ | $y=c_1e^{a x}+c_2e^{b x}$ |

| Case 2 | $a=b$ | $y=c_1e^{a x}+c_2xe^{a x}$ |

| Case 3 | $a=\alpha+i\beta$, $b=\alpha-i\beta$ | $y=c_1e^{\alpha x}\cos\beta x+c_2e^{\alpha x}\sin \beta x$ |

[Example]

3.3.2 Non-homogeneous 2nd Order Linear ODE

In the form of:

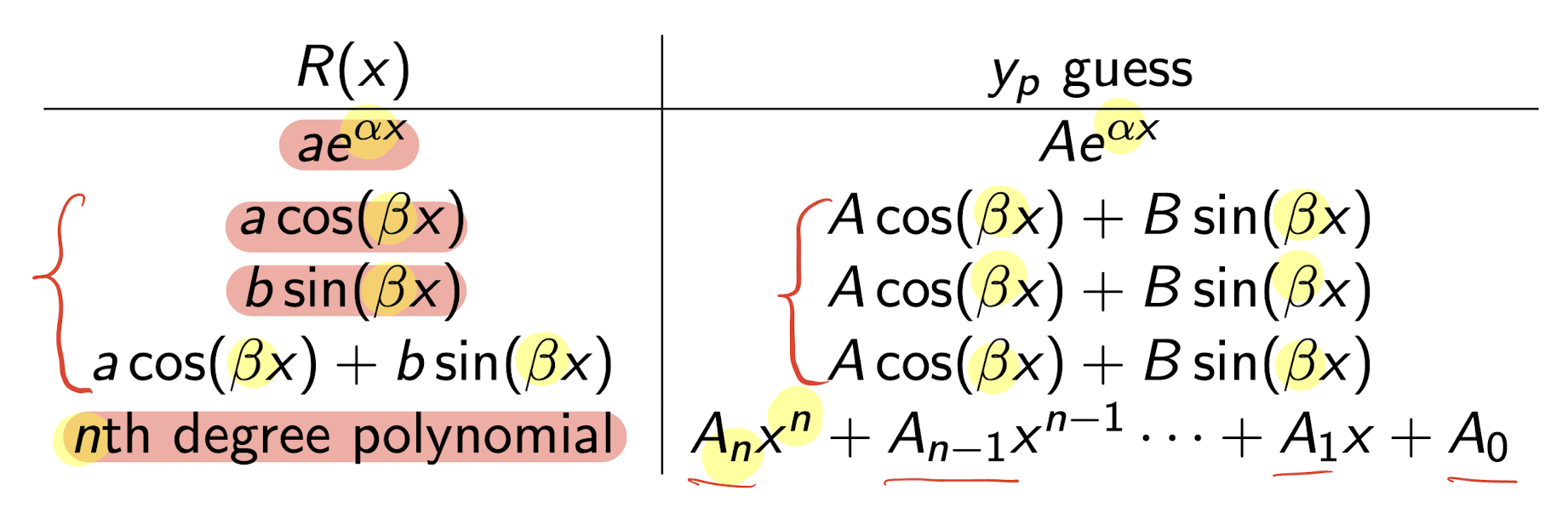

3.3.2.1 Undetermined Coefficients Method

Where $y$ is the general solution, $y_h$ is the homogeneous solution, and $y_p$ is the particular solution.

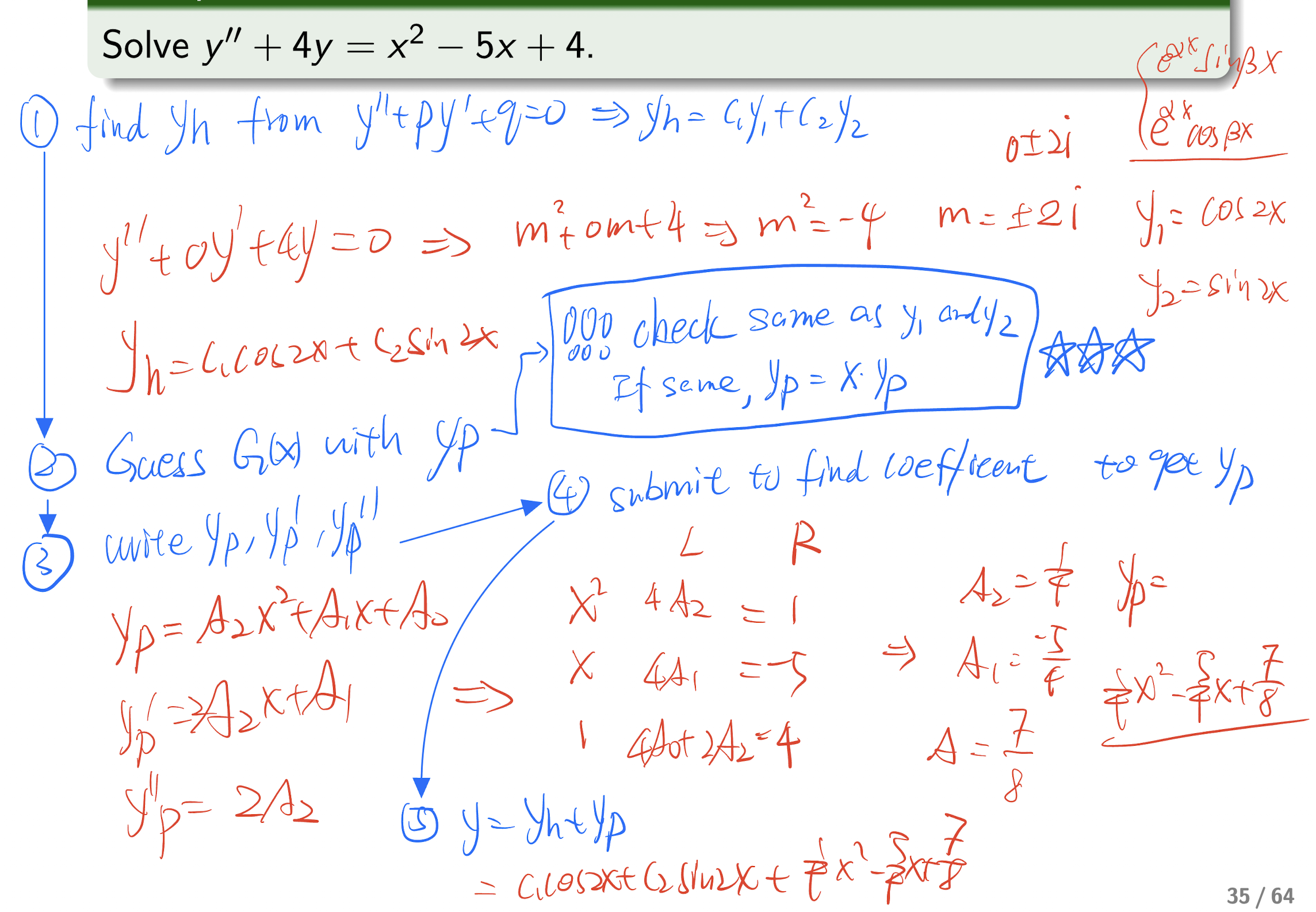

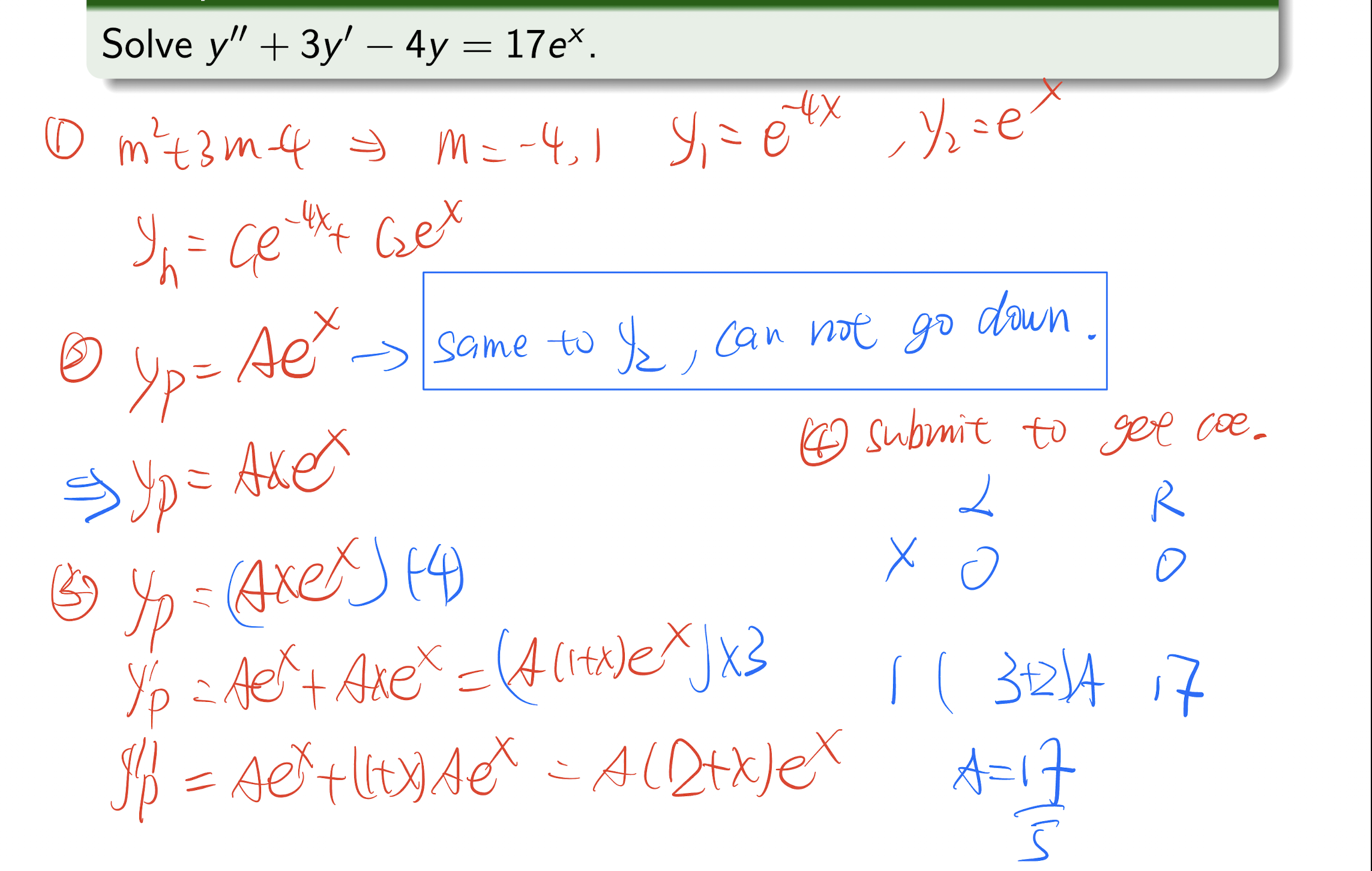

Steps:

- Find $y_h$ from $y^{\prime\prime}+py^{\prime}+qy=0$, $y_h=c_1y_1+c_2y_2$ based on 3.3.1;

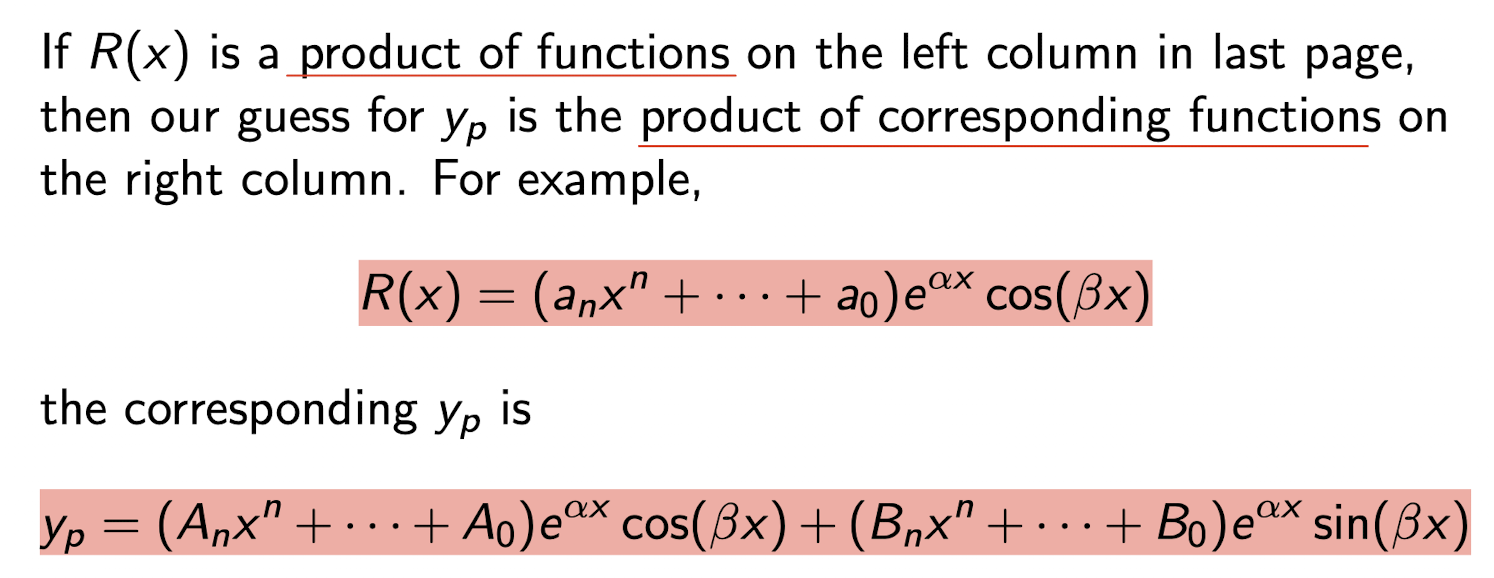

- Guess $y_p$ based on the form of $R(x)$:

!!! note Check the form of $R(x)$:

!!! note Check whether $y_p$ is same as $y_1$ or $y_2$, if same, $y_p=y_p \times x$;

- Get $y_p,y_p^{\prime}, y_p^{\prime\prime}$;

- Submit back to the original equation, and get the Coefficients, then get $y_p$;

- $y = y_h+y_p$

[Example]

[Example]

3.3.2.2 Variation of Parameters Method

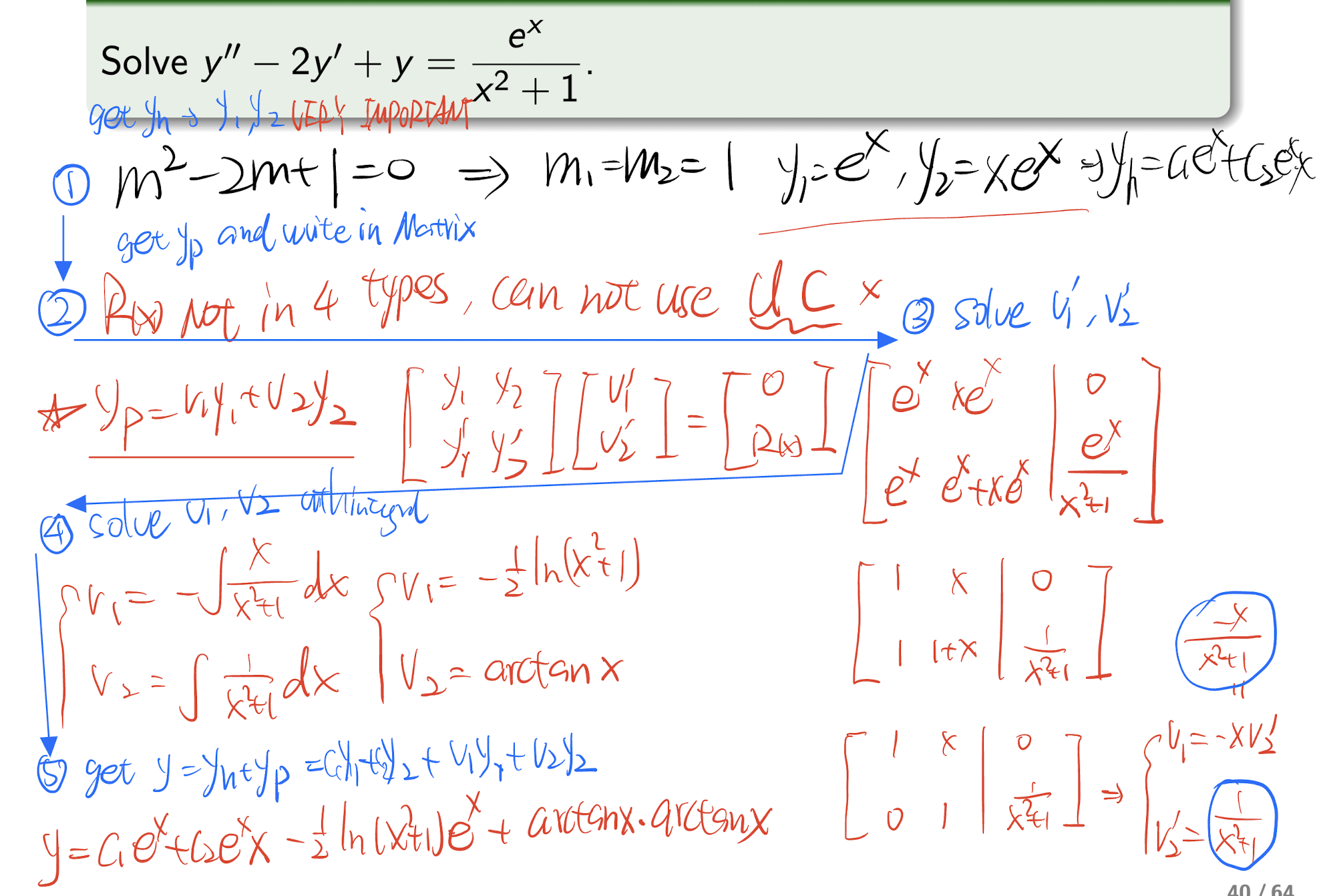

If the ODE is not in the 4 types of UC method, then use this method.

Steps:

- Write the ODE in the form of $y^{\prime\prime}+py^{\prime}+qy=R(x)$, then get $y_h=c_1y_1+c_2y_2$;

- Write $y_p$:And a system of equation:

- Submit and solve the system to get $v_1^{\prime},v_2^{\prime}$;

- Get $v_1$ and $v_2$ by integral;

- Get $y$:

[Example]

3.4 Laplace Transform

For real function $f(t)$, $t\ge0$, the Laplace transform of $f(t)$ is defined as:

Proposition:

- $\mathcal{L}{(af(t)+bg(t))}= a\mathcal{L}{f(t)}+b\mathcal{L}{g(t)}$

!!! note NO for $\mathcal{L}{(af(t)\times bg(t))}$

- $f(t)=g(t)$ for $t\ge 0$ $\implies \mathcal{L}{f(t)} = \mathcal{L}{g(t)}$

- $\mathcal{L}{f^{\prime}(t)} = sF(s)-f(0)$

- $\mathcal{L}{f^{\prime\prime}(t)} = s^2F(s)-sf(0)-f^{\prime}(0)$

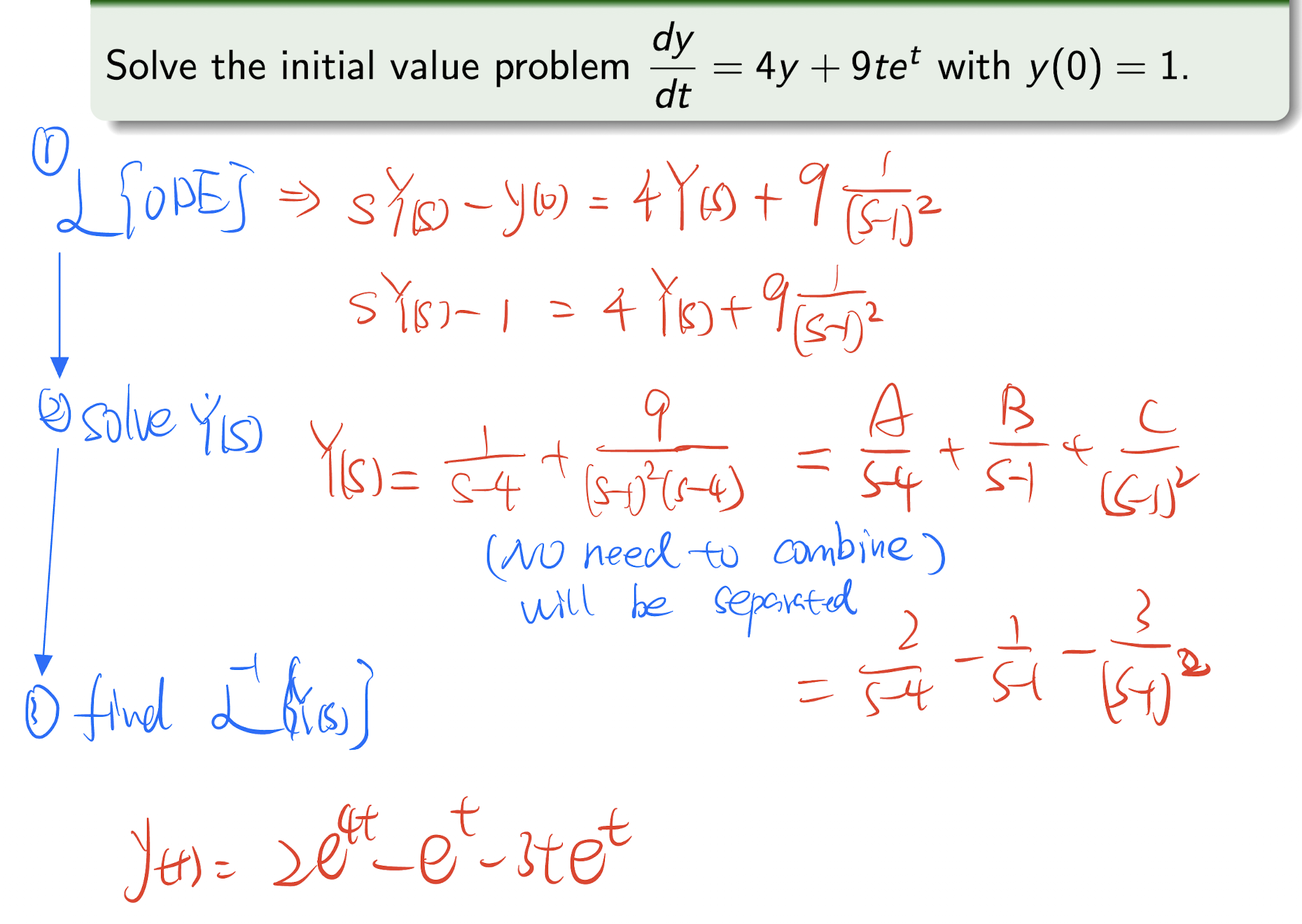

3.4.1 Application on ODE

Using Laplace transform to solve linear differential equations with constant coefficients.

Steps:

- Write the ODE in the form of $y^{\prime\prime}+py^{\prime}+qy=0$.

- $\mathcal{L}{ODE}$, get the Laplace transform of the ODE on both sides;

- Solve the equation, get $Y(s)$;

- Find the $\mathcal{L}^{-1}{Y(s)}$, get $y(t)$.

[Example]

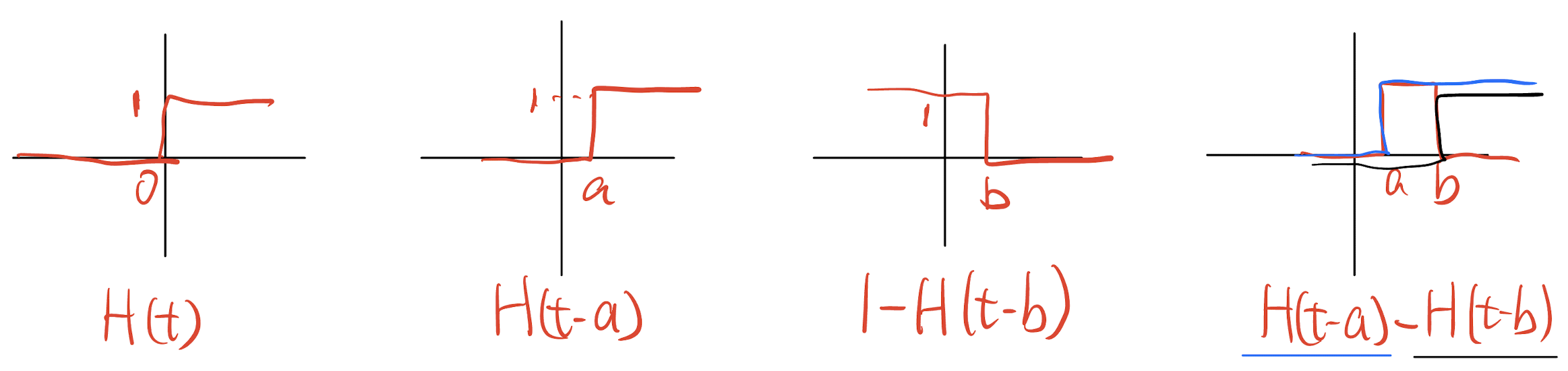

3.4.2 Transform of a Shift in t

- Unit step function:

- Theorem:

or

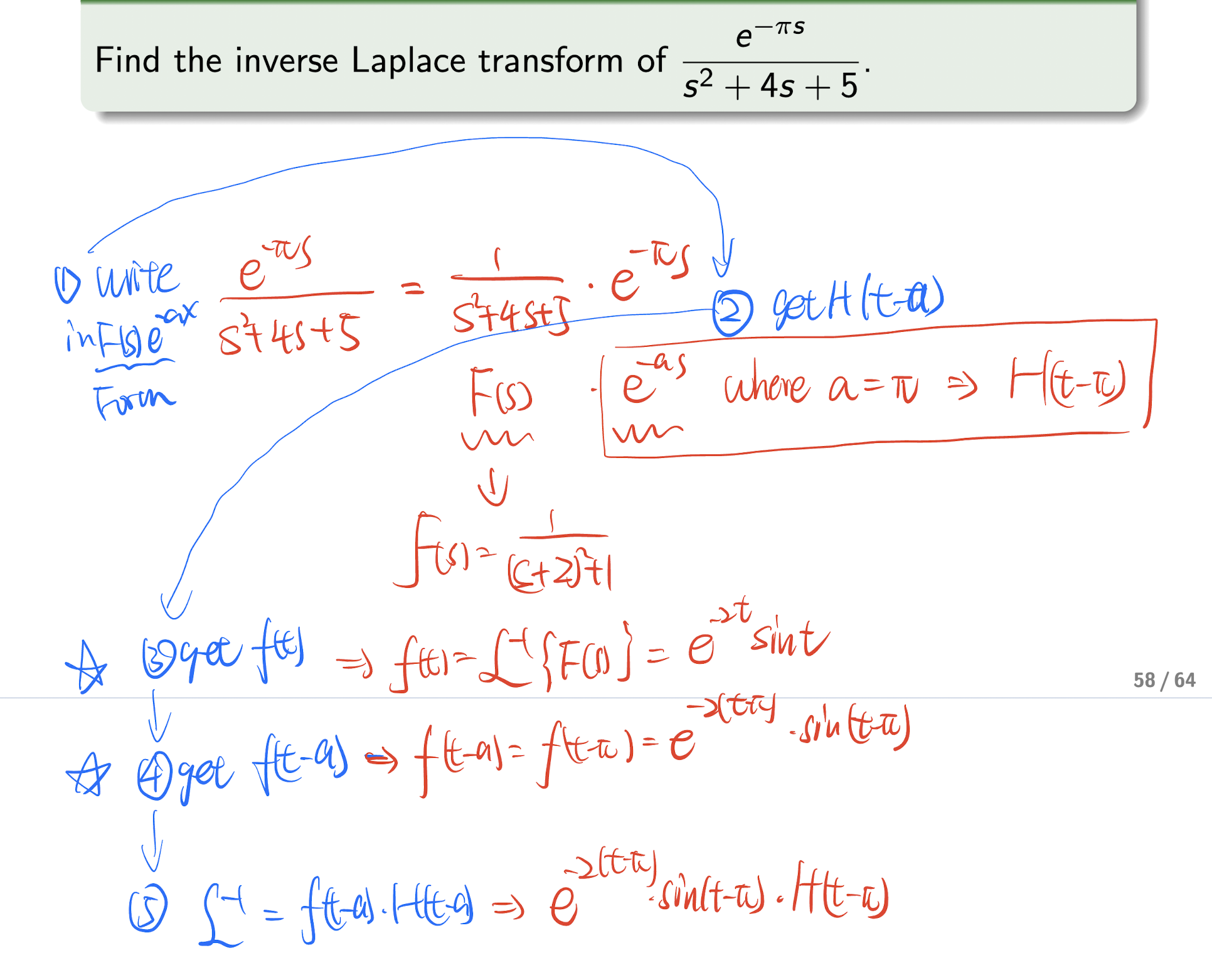

Step of solving inverse Laplace transform using this theorem:

- Write the inverse Laplace transform in the form of $F(s)e^{-as}$;

- Identify the $F(s)$, $e^{-as}$, $a$, and get $H(t-a)$;

- Get $f(t)=\mathcal{L}^{-1}{F(s)}$.

- Get $f(t-a)$.

- Get the final answer $f(t-a)\mathcal{H}(t-a)$.

[Example]

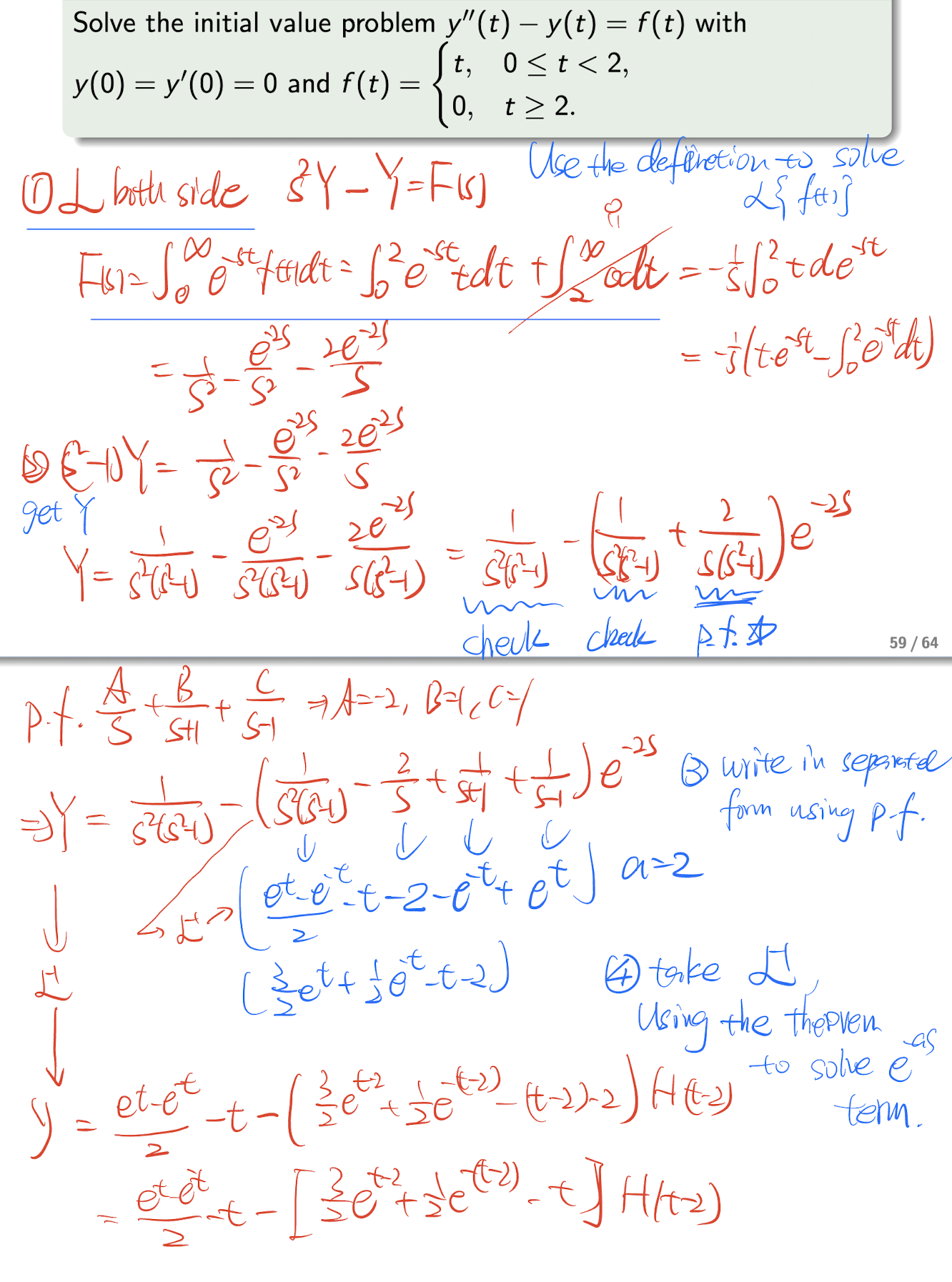

!!! note If encounter any unit step function in solving $f(t)=\mathcal{L}^{-1}{F(s)}$, use definition of the Laplace transform to solve it.

[Example]

4. Partial Differentiation

4.1 Partial Derivative

- Using the $\partial$ not $d$;

- Taking another variable as constant;

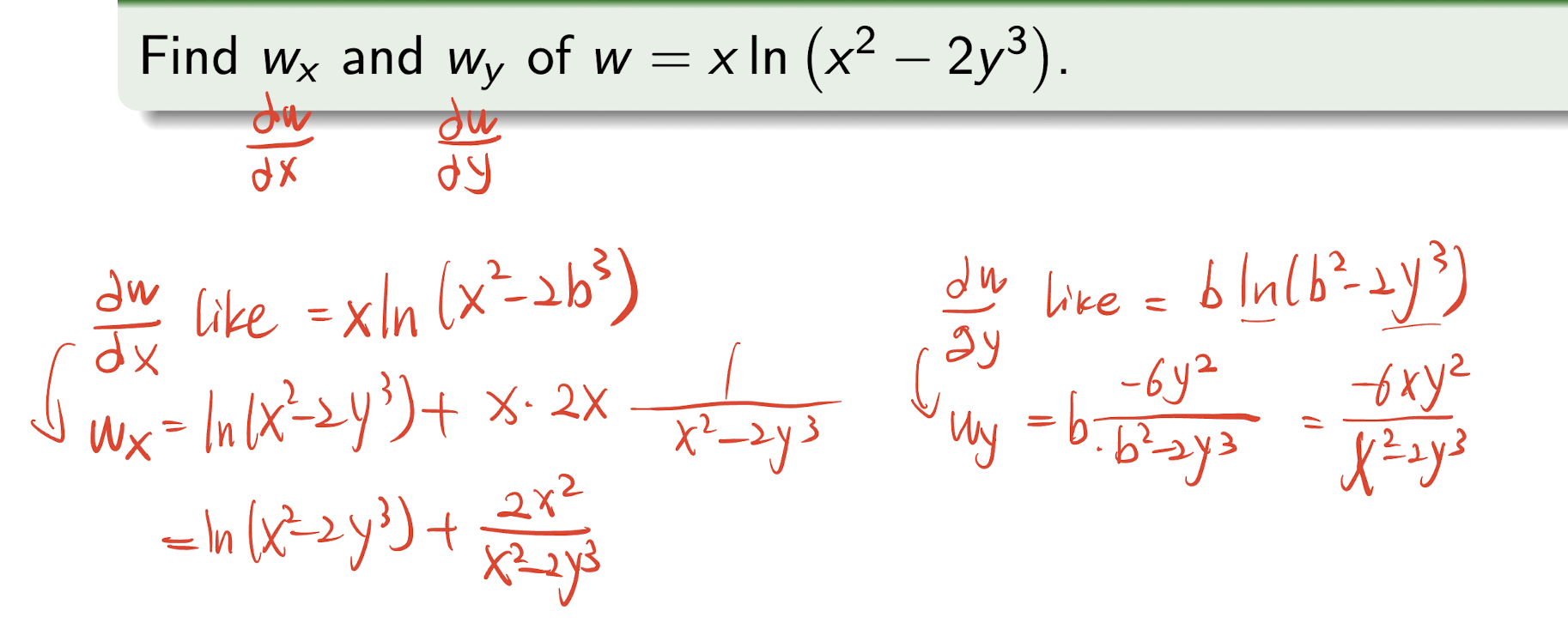

[Example]

4.1.1 Second Order Partial Derivative

If $w=f(x,w)$ is a $C^2$-function, then $w{xy} = w{yx}$

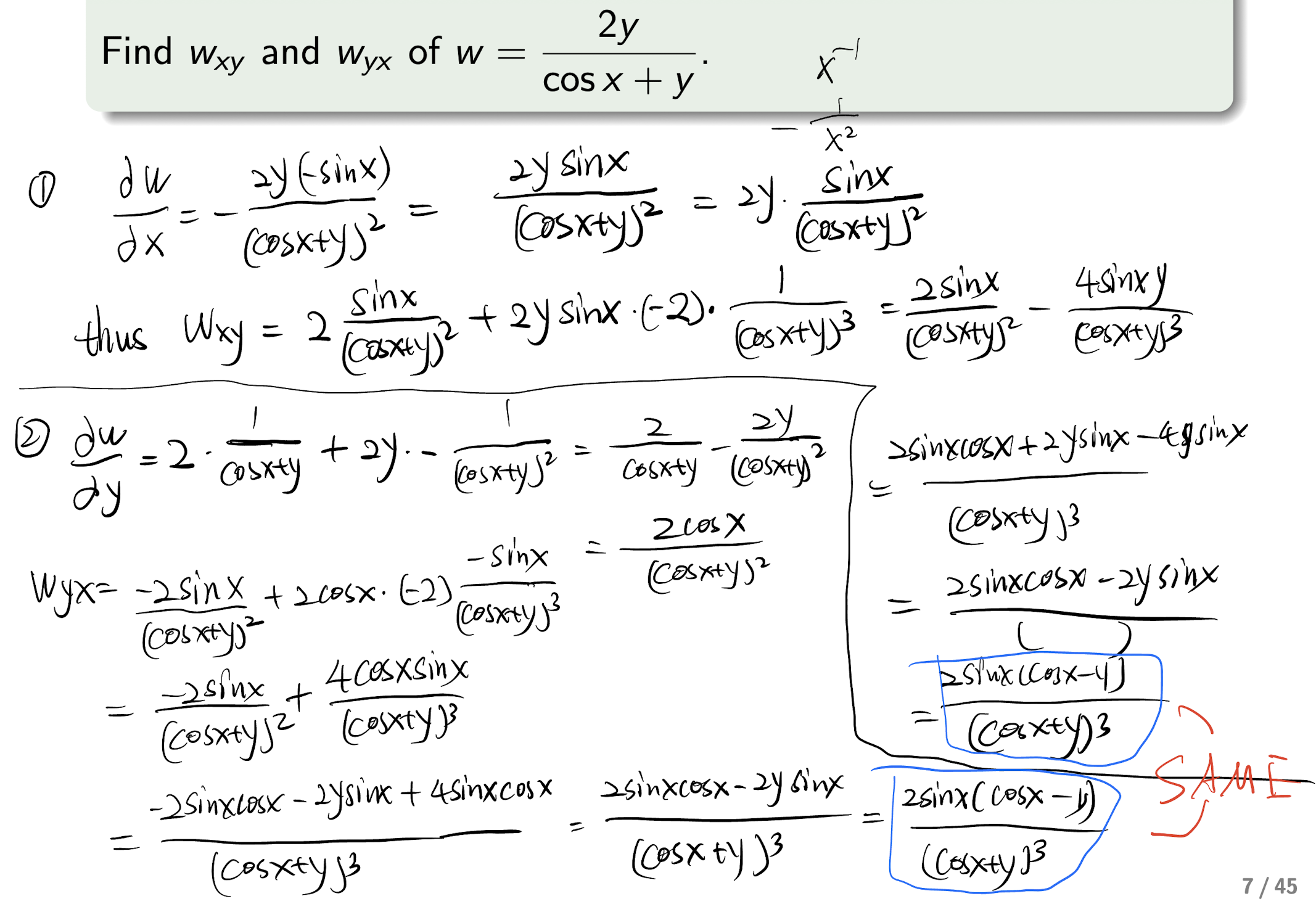

[Example]

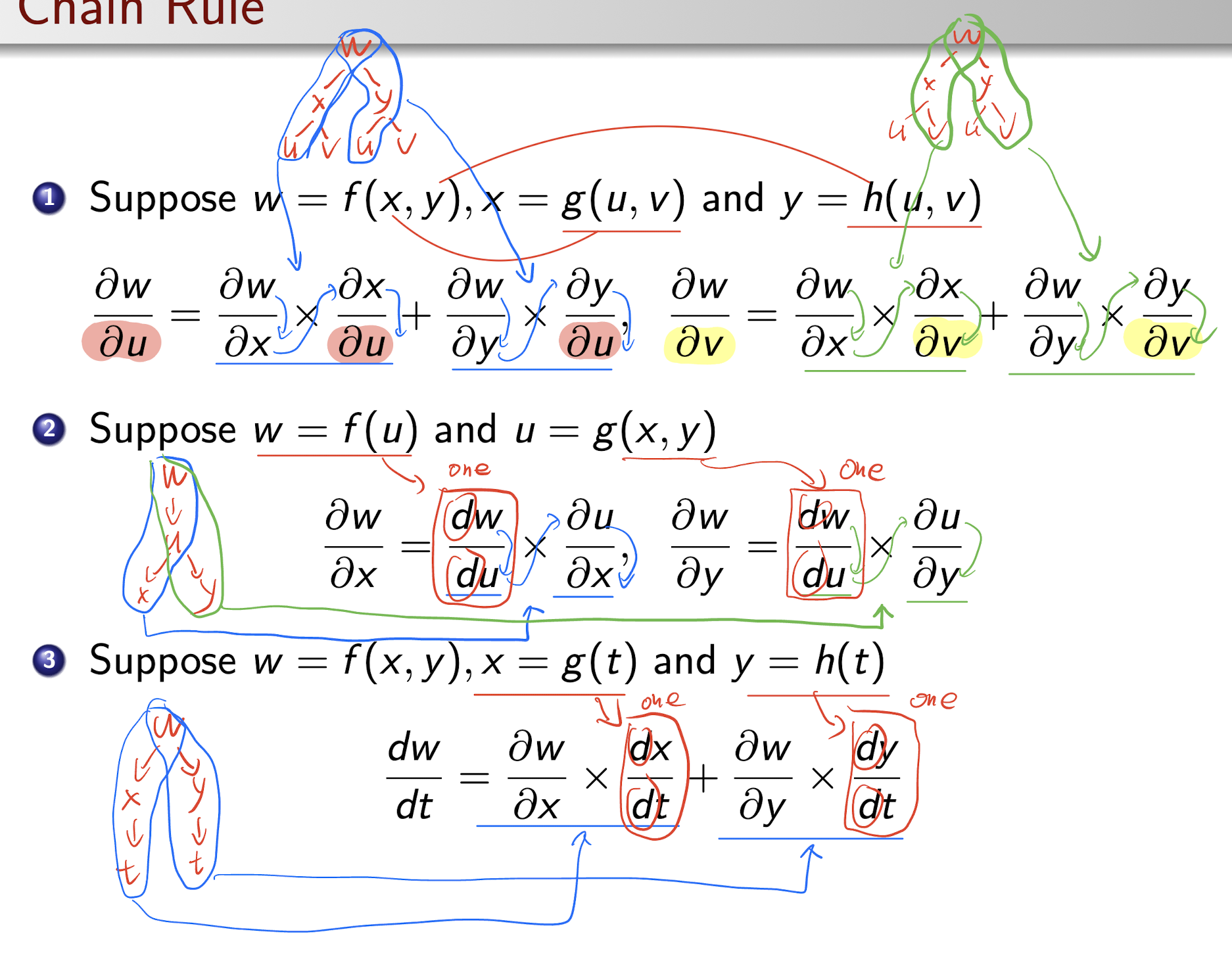

4.2 Chain Rule

- Draw the variable tree to analyze the chain rule;

- Change to $\partial$ to $d$ when only ONE variable is involved.

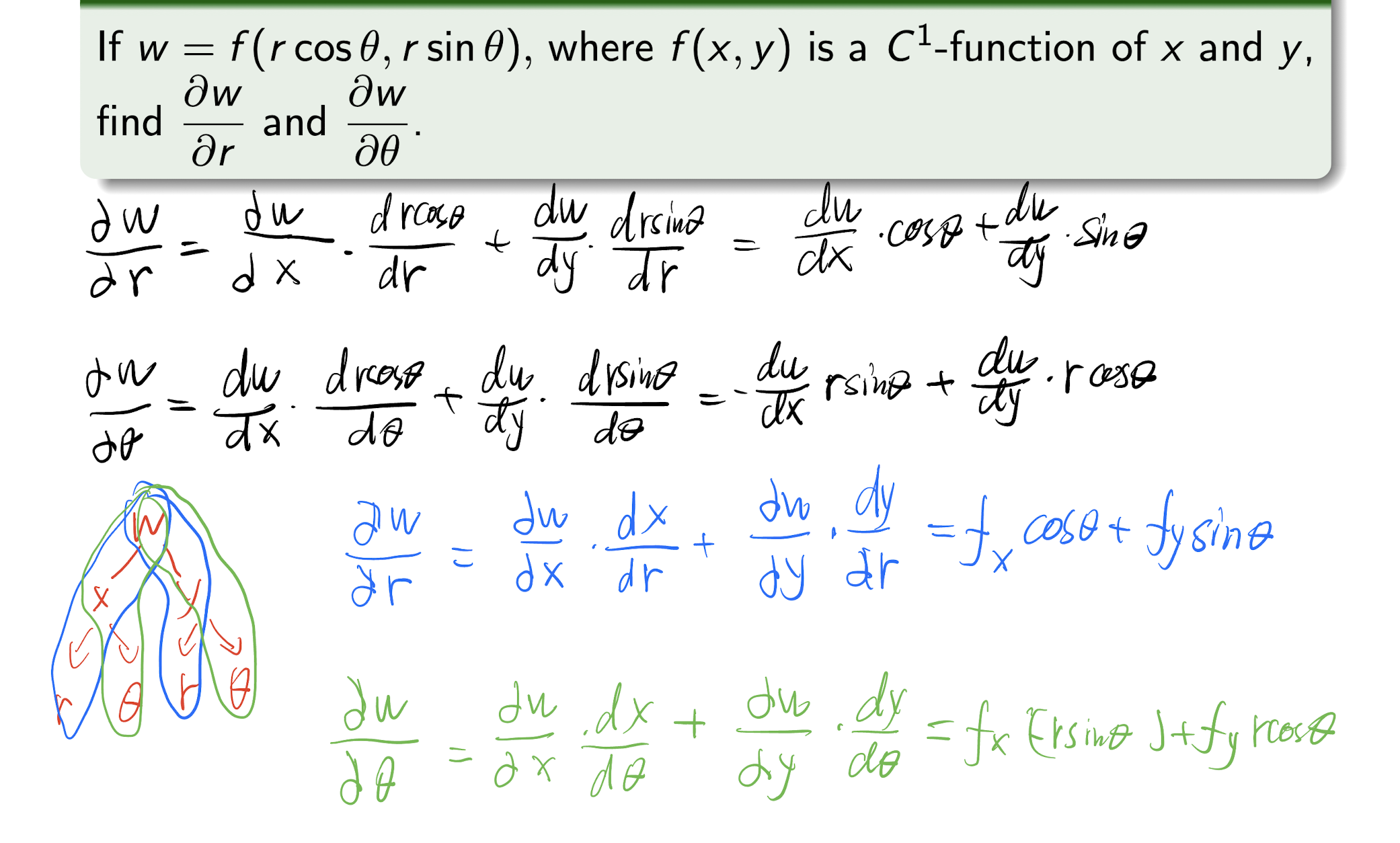

[Example]

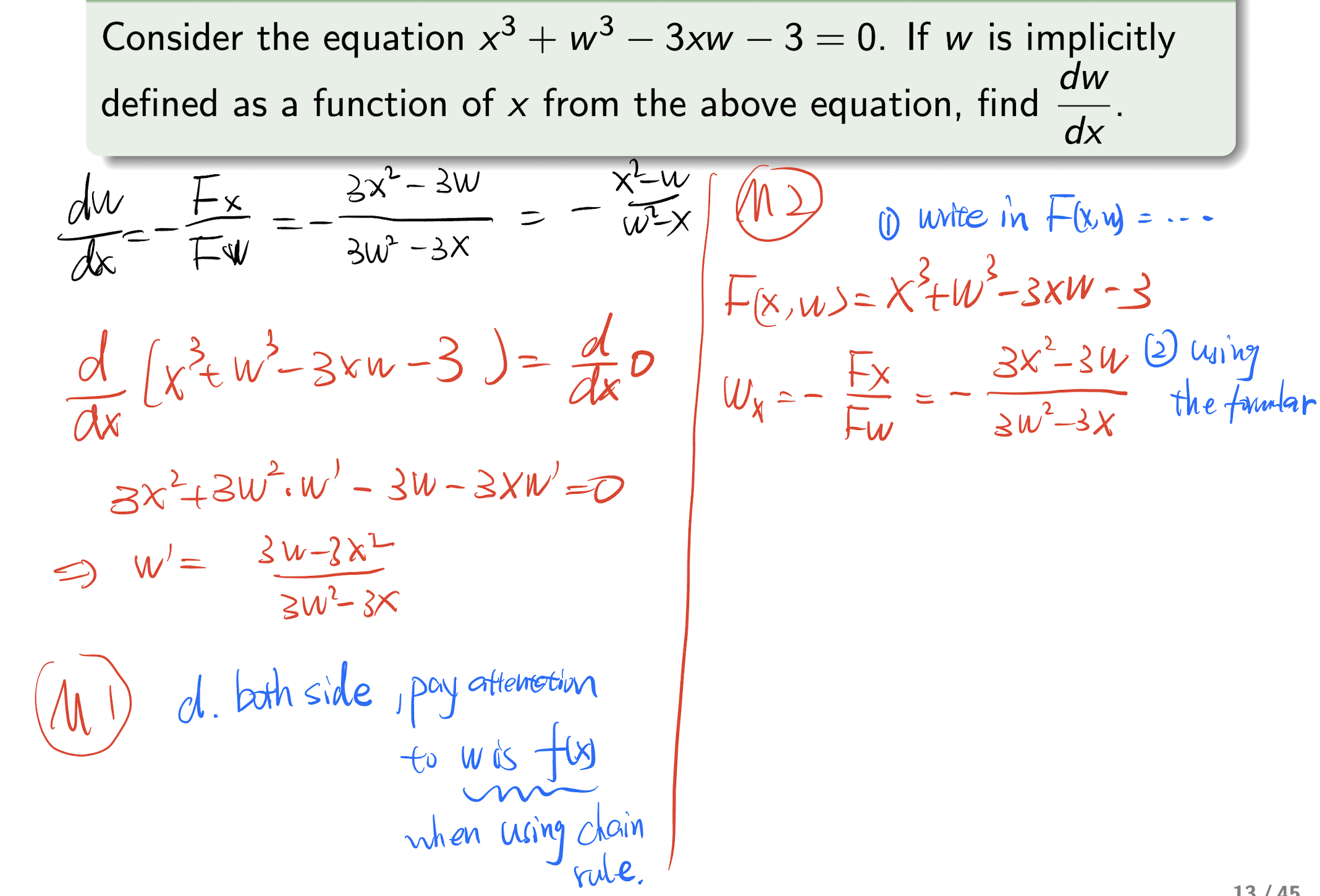

4.3 Implicit Differentiation

For $F(x,f(x))=0, f(x)=w$:

M1: differentiate both sides with respect to $x$:

M2:

[Example]

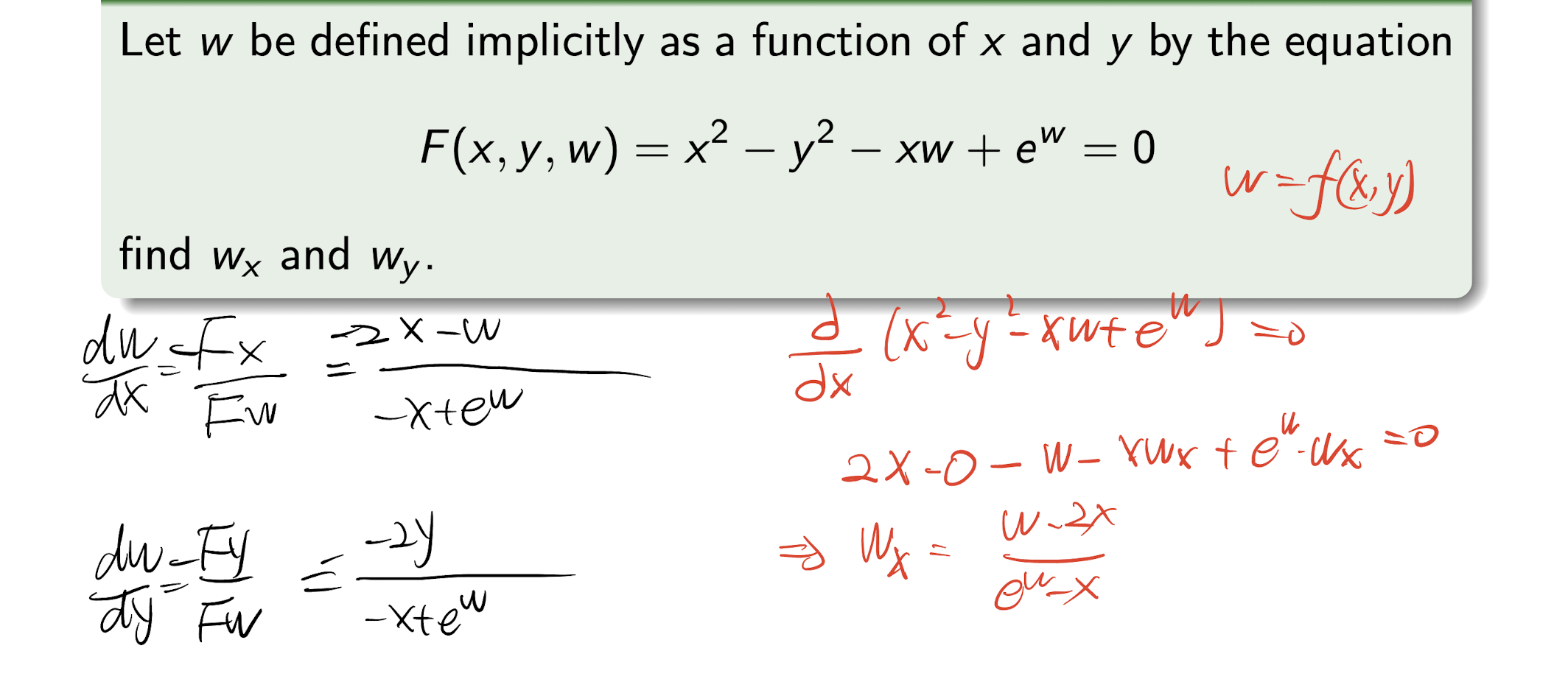

For $F(x,y,f(x,y)), f(x,y)=w$

M1: differentiate both sides with respect to $x$:

M2:

[Example]

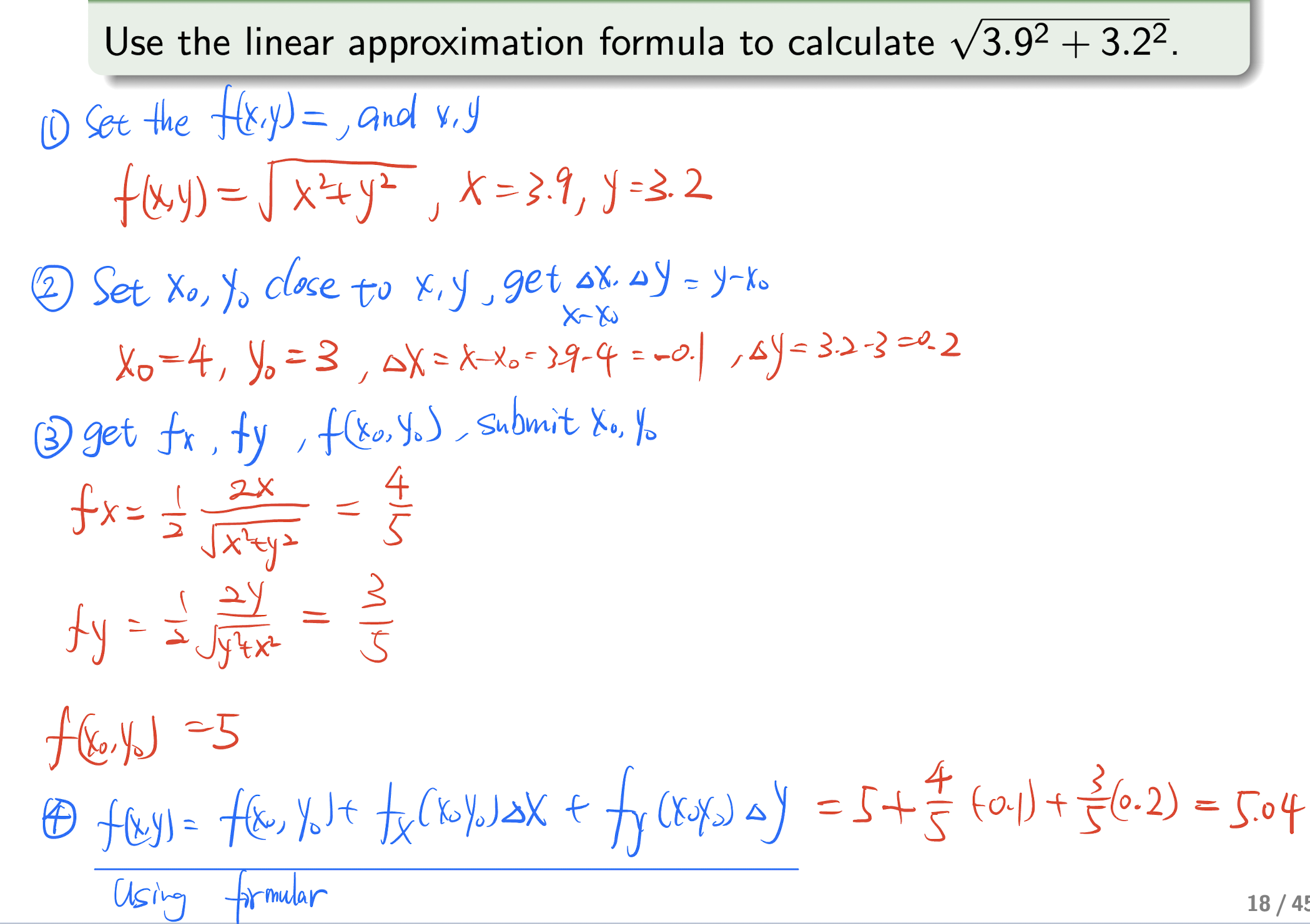

4.4 Linear Approximation

(Total Differential)

For get the value of $f(x,y)$:

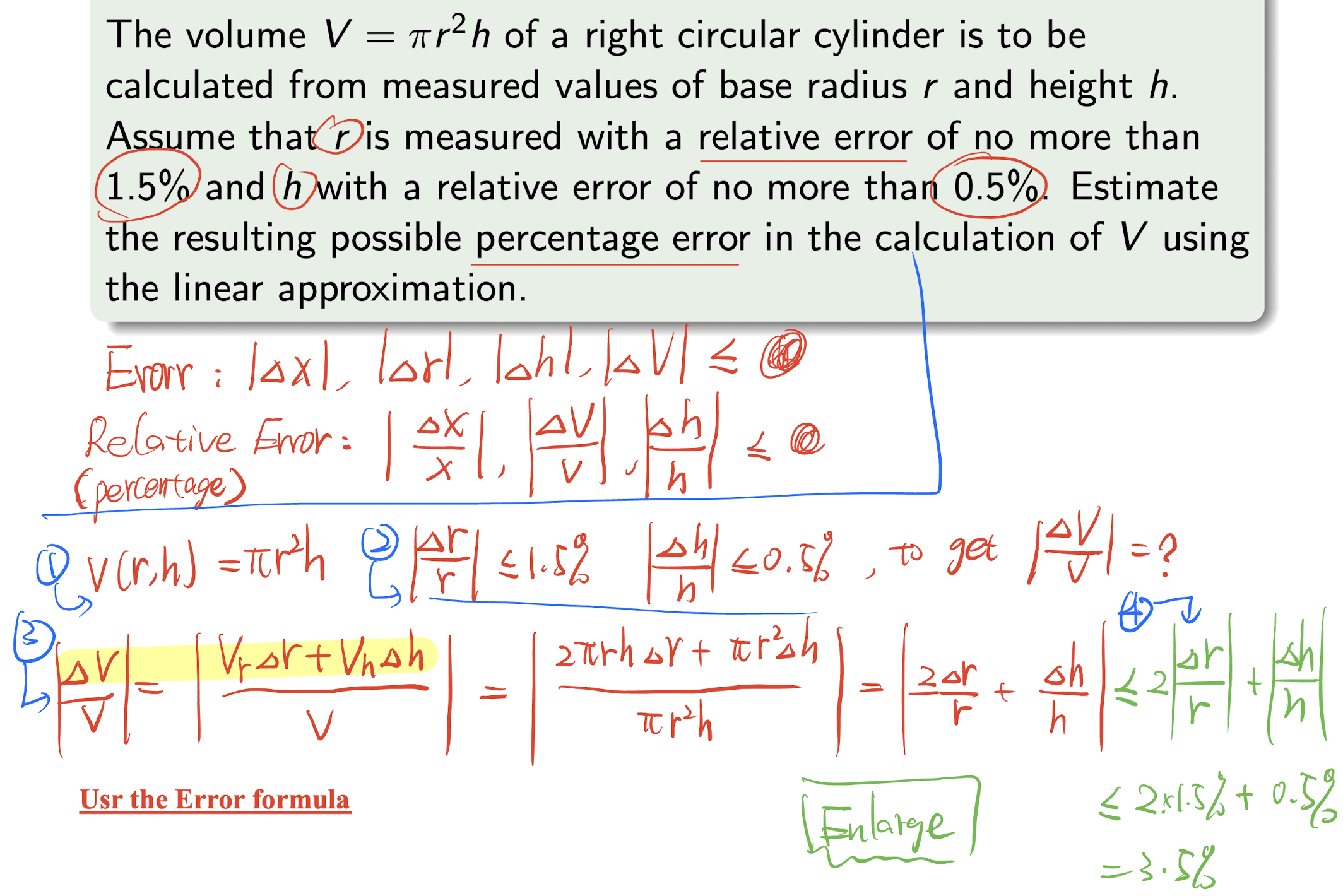

For get the error:

Steps:

- Set $f(x,y)$, get $x$, $y$ from question;

- Set $x_0$, $y_0$ close to $x$, $y$, and get $\Delta x=x-x_0$, $\Delta y=y-y_0$;

4.Submit to above formula to get $f(x,y)$.

[Example]

- Error: $|\Delta x|\le V$;

- Relative error: $|\frac{\Delta x}{x} |\le V$;

Steps:

- Get the function $f(x,y)$;

- Get the Relative errors and the target;

- Apply the error formula;

- Apply enlarge;

[Example]

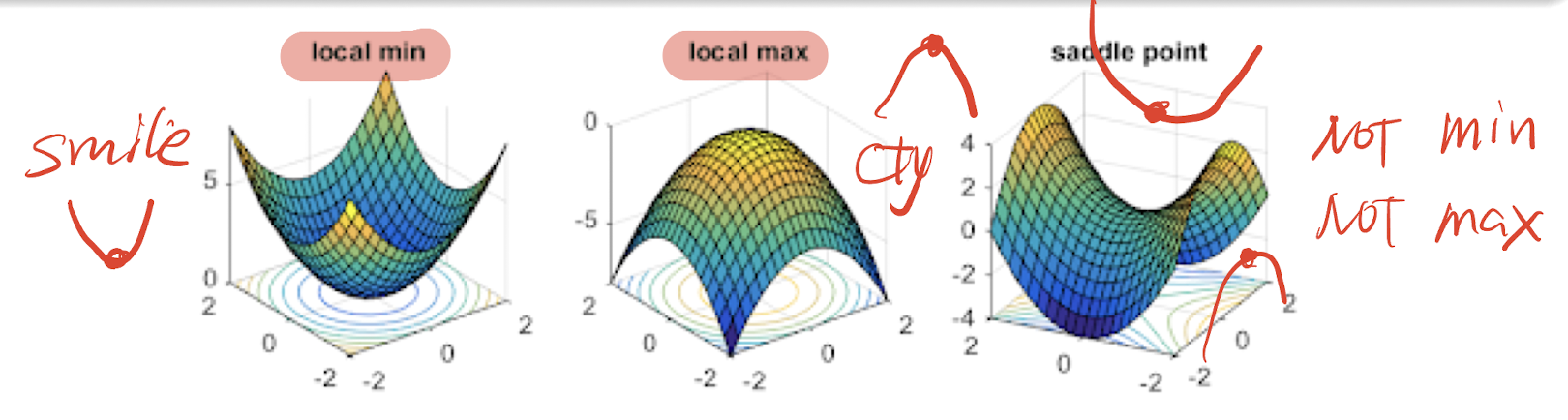

4.5 Relative Extremum

- relative minimum

- relative maximum

- saddle point

4.5.1 2nd Order Derivative Test

for $f(x,y)$, a point $(x_0,y_0)$, that

this point is the stationary point of $f(x,y)$

Then

if $H>0$, and

$(x_0,y_0)$ is a relative minimum (all >: smile face $\cup$)

if $H>0$ ,and

$(x_0,y_0)$ is a relative maximum (one <: cry face $\cap$);

- $H<0$: saddle point;

- $H=0$: inconclusive.

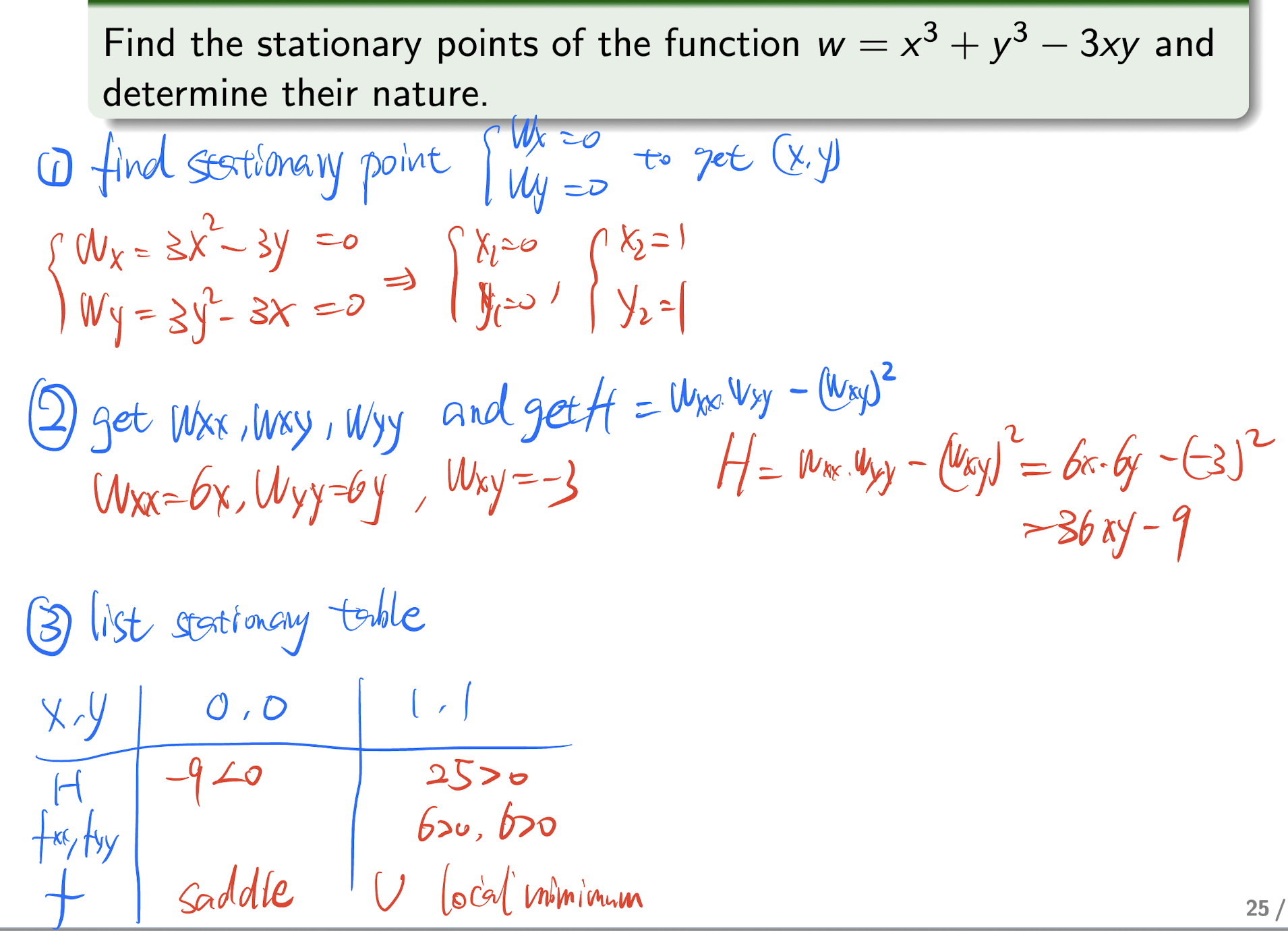

Steps:

- Find the stationary point, $f_x=0,f_y=0$ to get $(x_0,y_0)$;

- Get

and get

3.List the stationary table:

| $x,y$ | $x_0, y_0$ | … |

|---|---|---|

| $H$ | >0 or <0 | … |

| $f_{xx}$ | >0 or <0 | … |

| $f$ | local minimum or maximum or saddle | … |

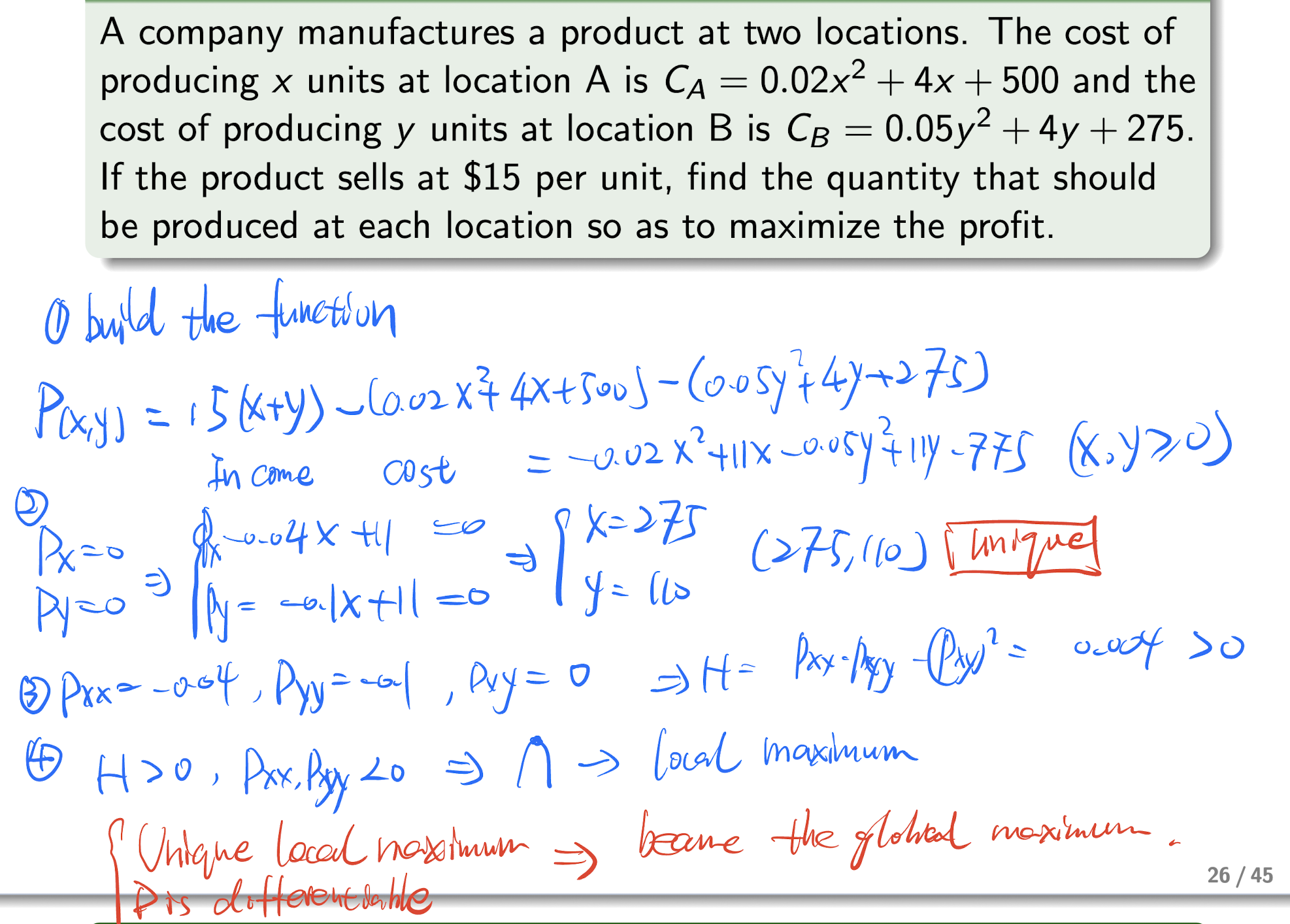

Note:

- Sometime need to build the equation first;

- If the stationary is unique, then the local one becomes the global one.

[Example]

[Example]

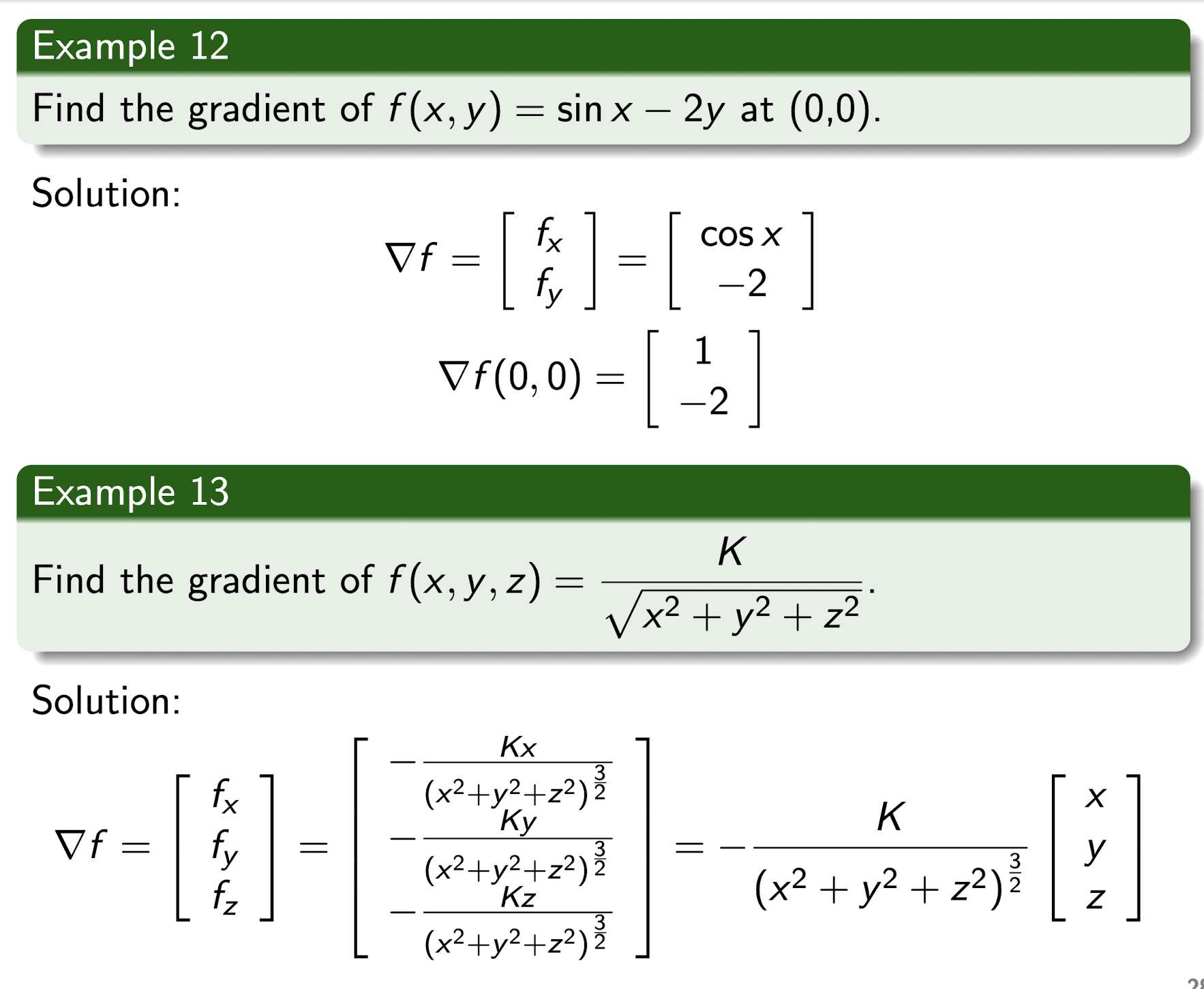

4.6 Directional Derivative

4.6.1 Gradient

For $f(x,y,x)$, the gradient is:

[Example]

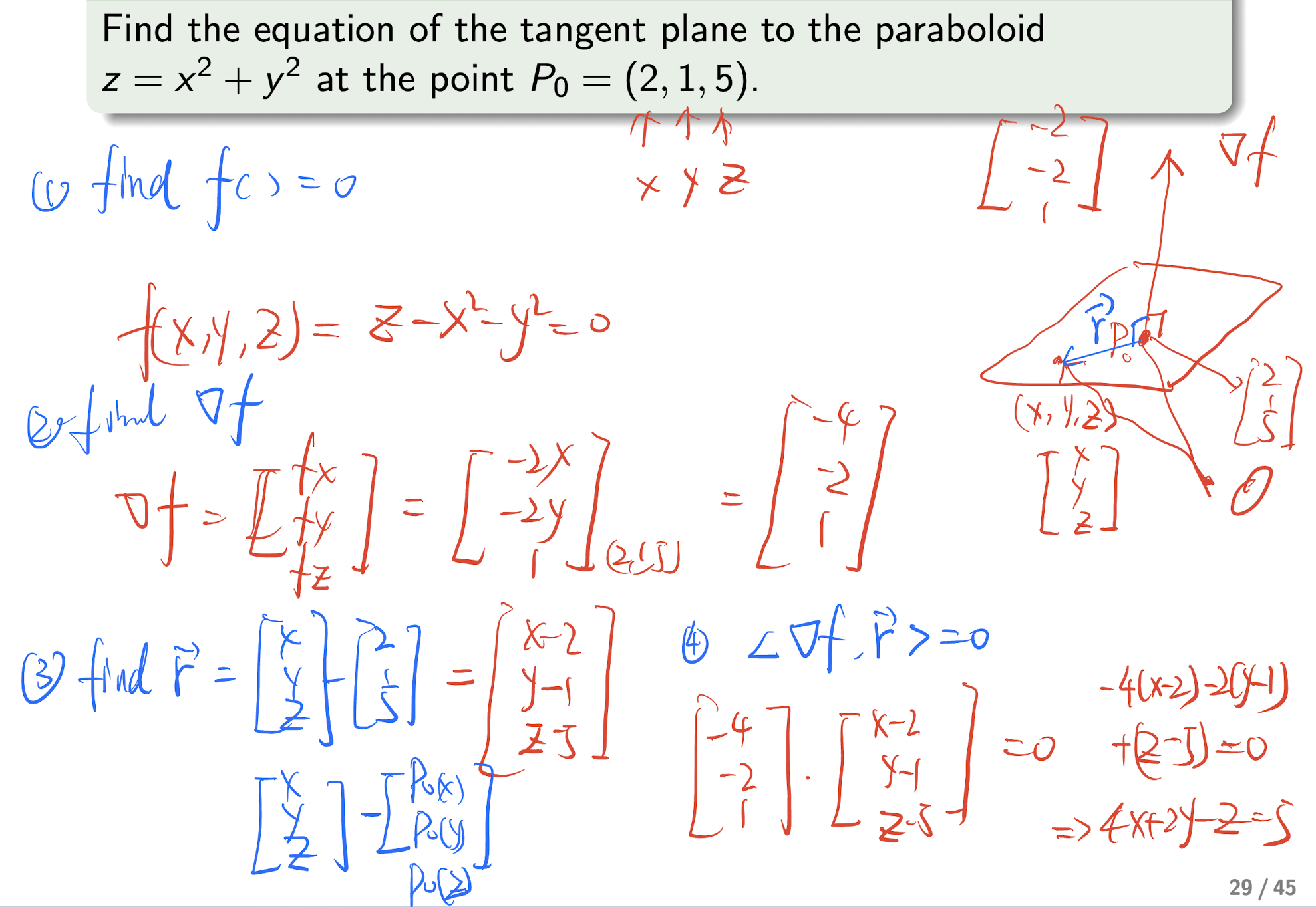

Steps for find tangent plane:

- Find the $f=0$, if $P_0=(a,b,c)$ then has 3 variables;

- Find the gradient, $\nabla f$;

- Find $\textbf{r}=P-P_0$;

- Let $<\nabla f, \textbf{r}>=0$, find the tangent plane;

[Example]

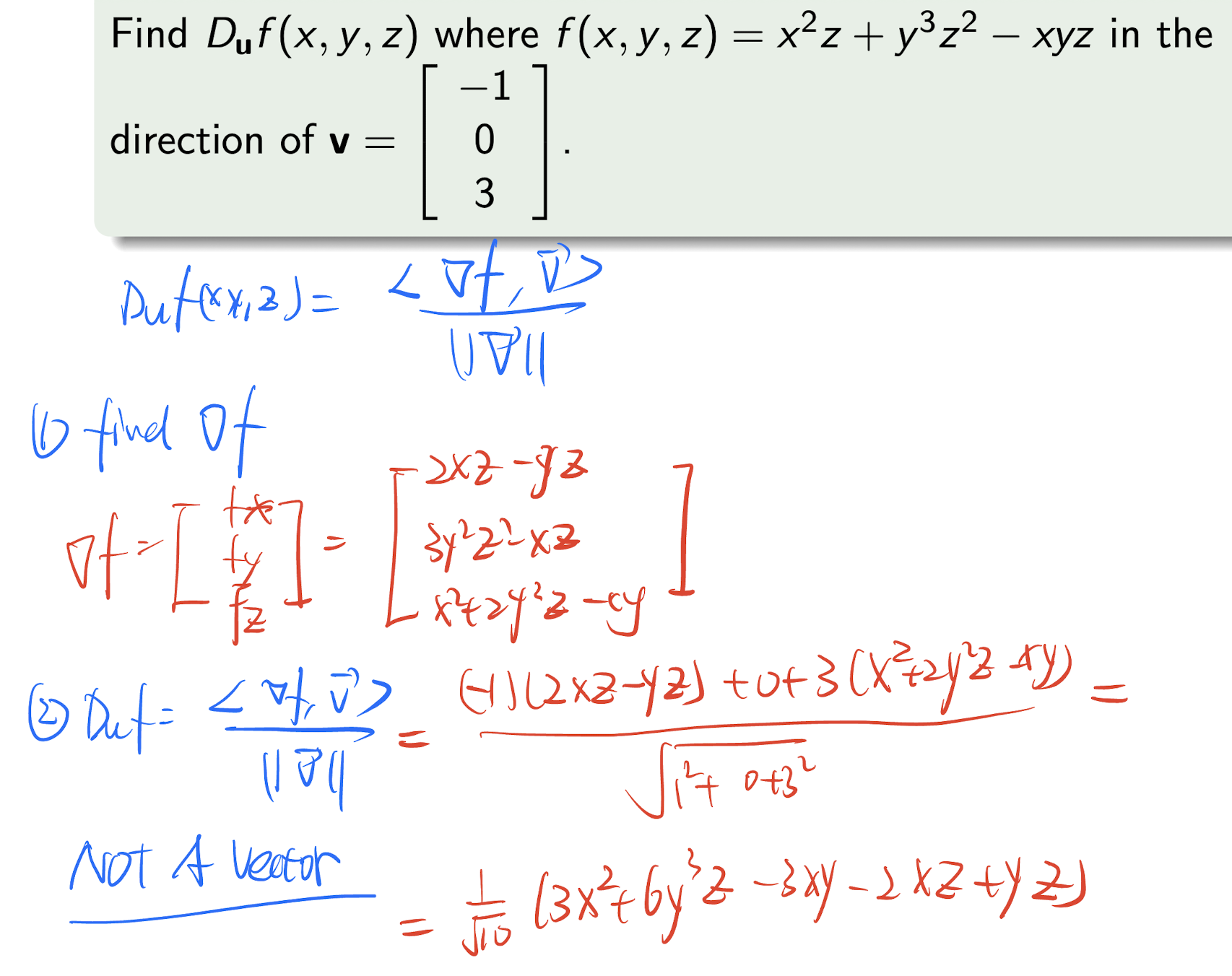

4.6.2 Directional Derivative

For $f(x,y,z)$, at a point

in the direction of a unit vector $\textbf{u}=\begin{bmatrix}a \ b\ c \end{bmatrix}$, the directional derivative is

where $\textbf{v}$ is a unit vector in the direction of $\textbf{u}$.

Steps:

- Find $\nabla f$;

- Find the $D_{\textbf{u}}f(x_0,y_0,z_0)=\frac{<\nabla f(x_0,y_0, z_0), \textbf{v}>}{||\text{v}||}$

[Example]

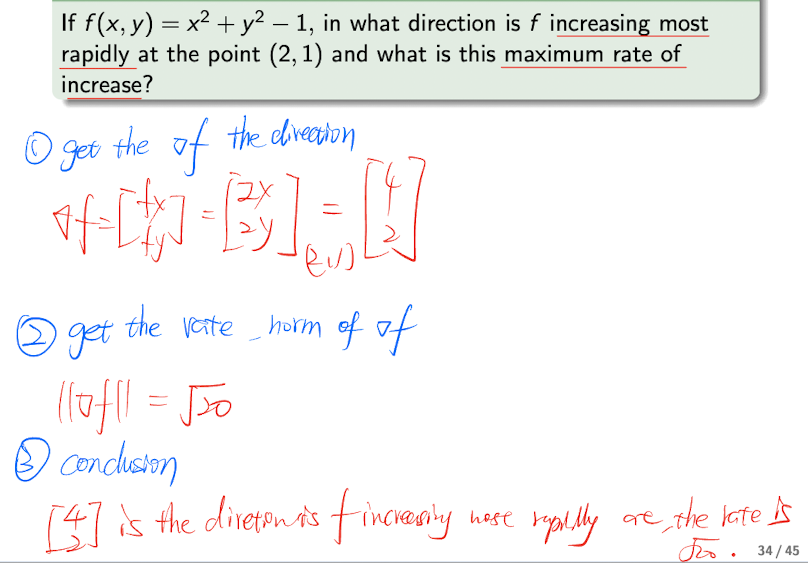

4.6.3 Change Mostly Rapidly

- Increase most rapidly, max rate of change ($D_{\textbf{u}}f$):

- Decrease most rapidly, min rate of change ($D_{\textbf{u}}f$):

Steps:

- Get the $\nabla f$, the direction;

- get the rate, $||\nabla f||$;

- Conclusion:

($\nabla f$) is the direction of the increase(decrease) most rapidly at the point (x,x), the rate of change is ($||\nabla f||$).

[Example]

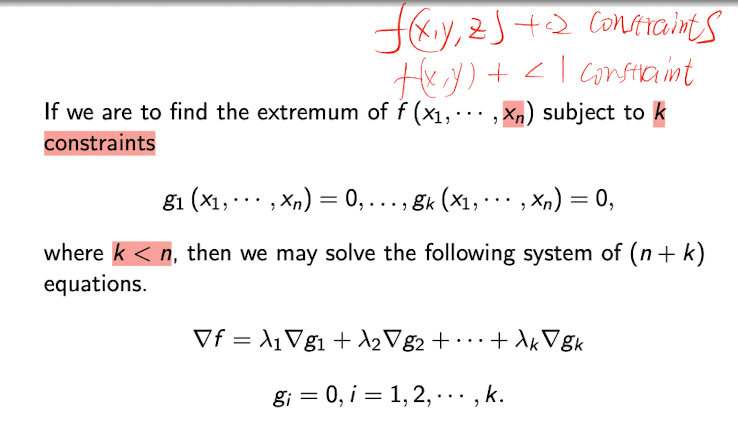

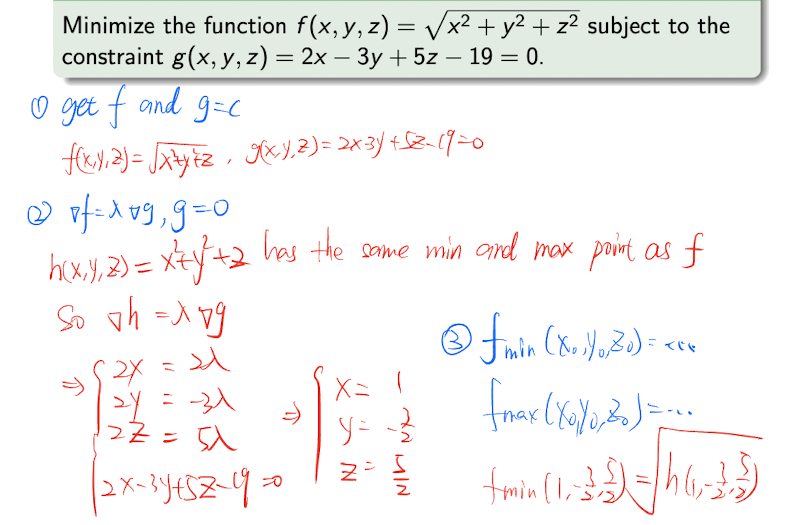

4.6.4 Lagrange Multipliers

For function $f(x,y)$ has stationary point $(x_0,y_0)$, and it subjects to the constraint $g(x,y)=0$, then it satisfies:

the $\lambda$ is called Lagrange multiplier.

4.6.4.1 General Lagrange Multipliers Method

Steps:

- Find $f$ and $g=C$;

- List $\nabla f=\lambda \nabla g,g=0$

- Solve the system, and get

or

[Example]

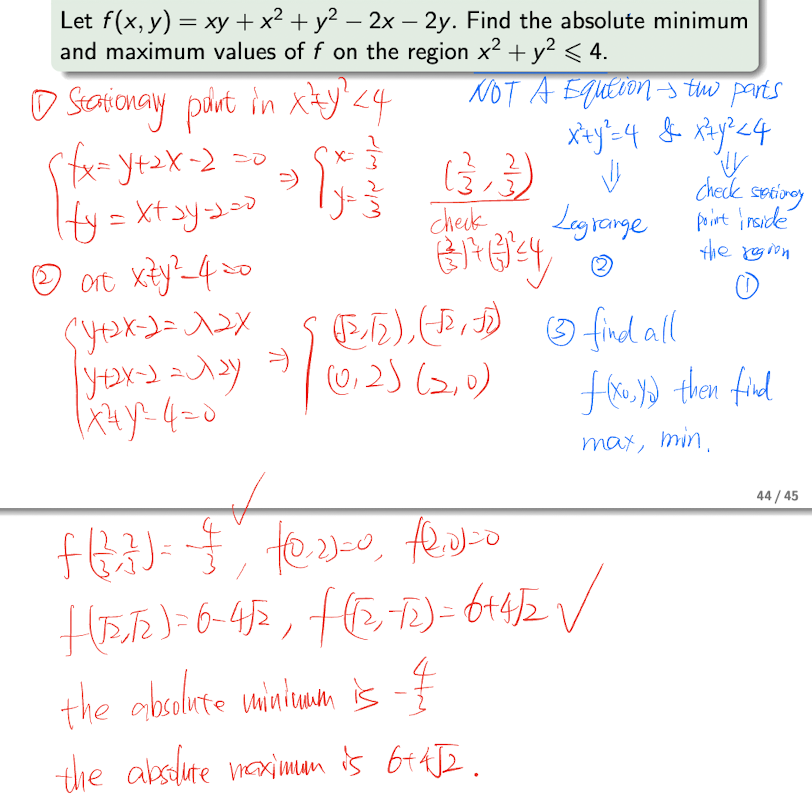

4.6.5 Absolute Extremum

Separate the region into two part, equation and inequality,

- then use the Lagrange Multipliers Method to the equation part,

- and use the stationary point to the inequality part.

[Example]

References

Slides of AMA2111 Mathematics I, The Hong Kong Polytechnic University.

个人笔记,仅供参考,转载请标明出处

PERSONAL COURSE NOTE, FOR REFERENCE ONLY

Made by Mike_Zhang