Computer Systems Security Course Note

Made by Mike_Zhang

Notice | 提示

PERSONAL COURSE NOTE, FOR REFERENCE ONLY

Personal course note of COMP3334 Computer Systems Security, The Hong Kong Polytechnic University, Sem2, 2023/24.

Mainly focus on Cryptography, Password Security , Authentication Protocols, Web Security, and App Security.个人笔记,仅供参考

本文章为香港理工大学2023/24学年第二学期 计算机系统安全(COMP3334 Computer Systems Security) 个人的课程笔记。

Unfold Study Note Topics | 展开学习笔记主题 >

1. Intro

1.2 Shifting mentality

- In prior (and future) courses, you learned (will learn) how things work and how to make them work

- Security is not yet another component that you need to learn how to operate

- It is baked inside everything you do

- You will reconsider how you learned the most basic things with a new perspective

1.3 Security pushes normal/expected boundaries

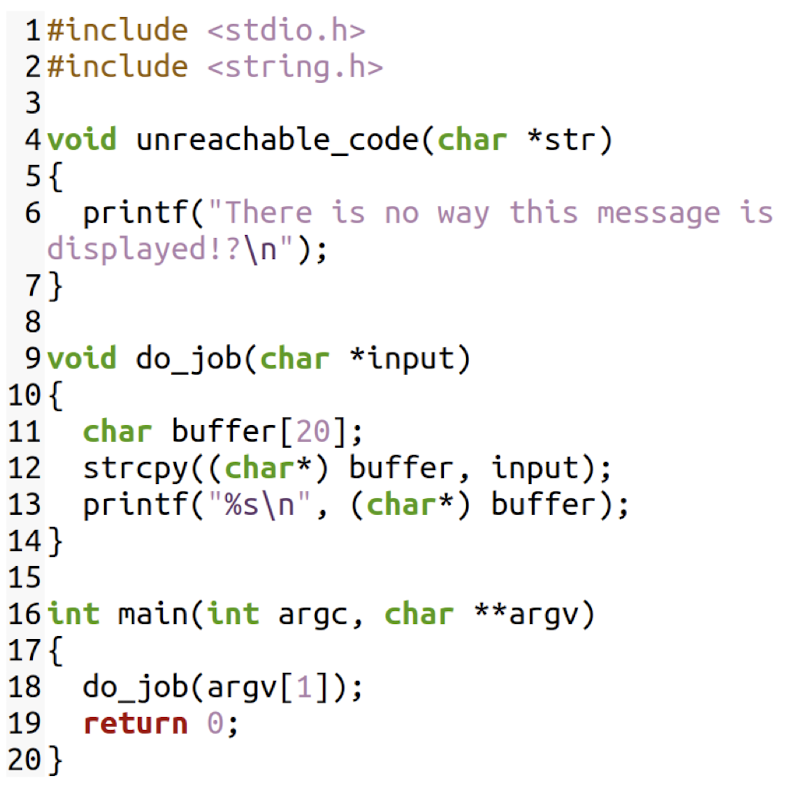

What is this C program supposed to do?

Can the function

unreachable_codebe reached?DEMO

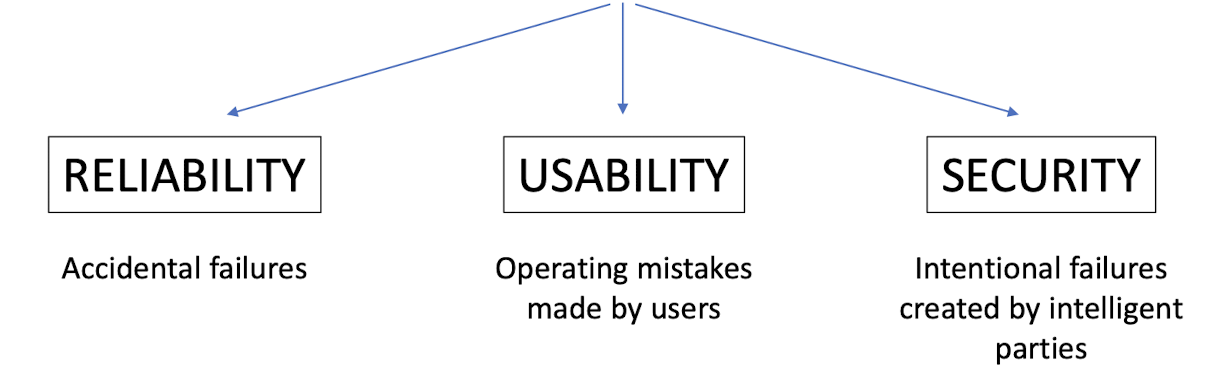

1.4 Failure

Systems may fail for many reasons

1.5 Security vs. correctness

- Security is difficult

System correctness: system satisfies specification

- For reasonable input, get reasonable output

System security: system properties preserved in face of attack

- For unreasonable input, output not completely disastrous

- Main difference: active interference from adversary

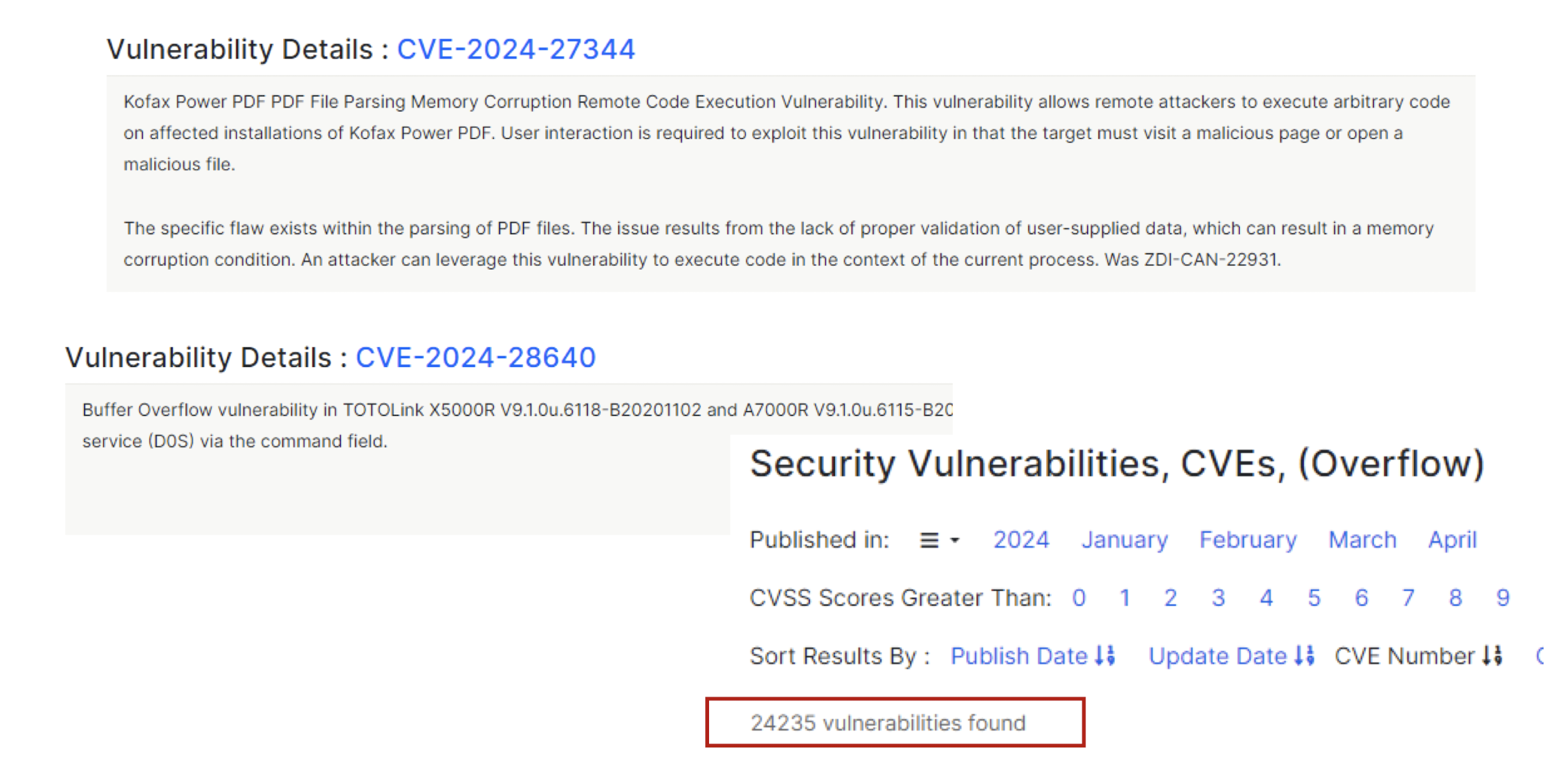

1.6 Bad news for security and privacy…

Security often not a primary consideration

- Performance and usability take precedence

Feature-rich systems may be poorly understood

- How many lines of code in Linux? Windows?

Implementations are buggy

Many attacks are non-technical in nature

- Phishing, social engineering, etc.

- Understand human aspects, psychology

1.7 What is cybersecurity?

The approach and actions associated with security risk management processes followed by organizations and states to protect confidentiality, integrity and availability (CIA) of data and assets used in cyber space. The concept includes guidelines, policies and collections of safeguards, technologies, tools and training to provide the best protection for the state of the cyber environment and its users.

Schatz, D., Bashroush, R., & Wall, J. (2017). Towards a more representative definition ofcyber security. Journal of Digital Forensics, Security and Law , 12 (2), 8.

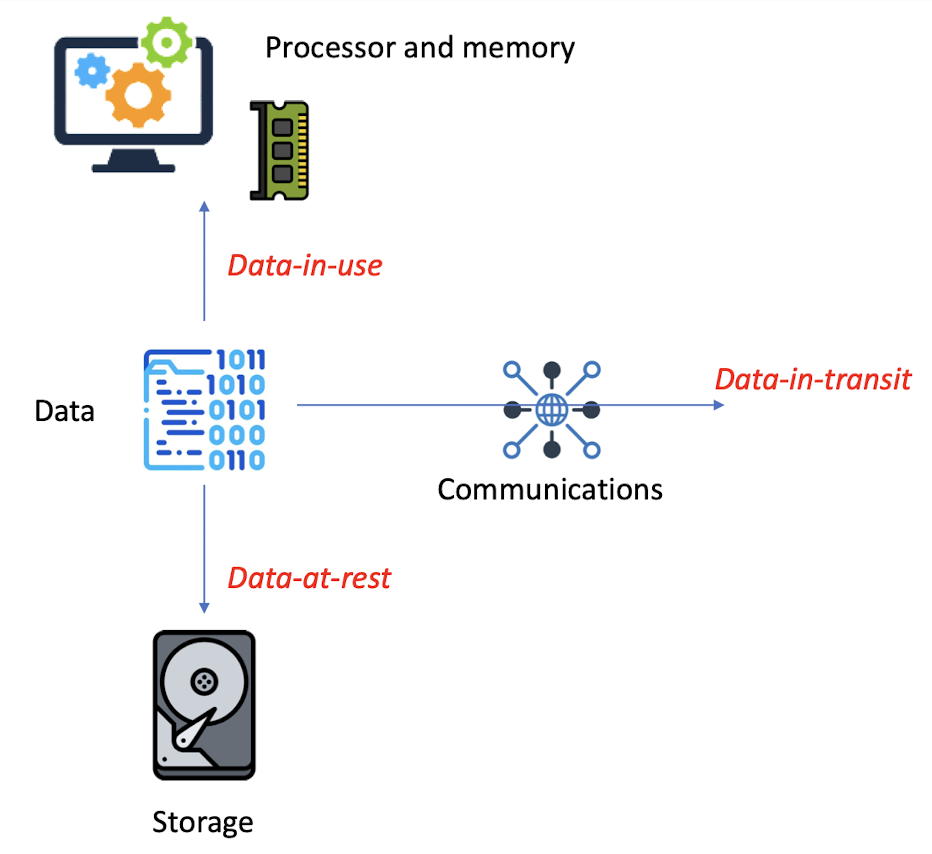

1.8 Different states of data

1.9 Confidentiality

Protecting data against unintentional, unlawful, or unauthorized access, disclosure, or theft

Mechanisms:

- Encryption

- Access control (Passwords, IP, …)

- Physical security

- Secure disposal

1.10 Integrity

Protecting data against improper maintenance, modification, or alteration (also includes data authenticity)

Mechanisms:

- Access control

- Logging/auditing

- Backupss

- Validation (hashing)

1.11 Availability

Protecting the accessibility and continuity of access to information

Mechanisms:

- Backups

- Redundant systems

- Monitoring

- Secure disposal (want to delete it)

1.12 Systems security

Information is manipulated by systems, they need to be secured to protect CIA goals

- Hardware

- Processors, secure elements, networking equipment

- Software

- Operating systems, applications, libraries

- Human operators

- Developers, end users

- Supply chain…

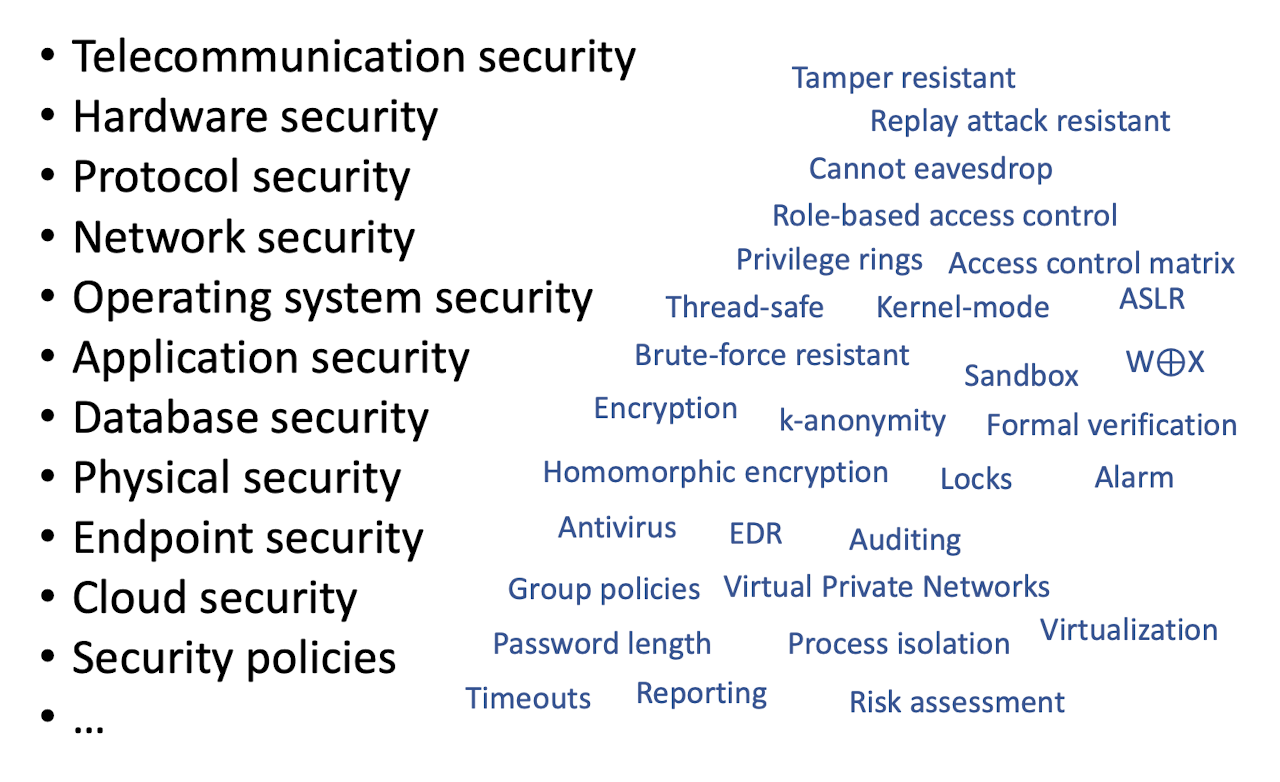

1.13 Everything security, and mechanisms

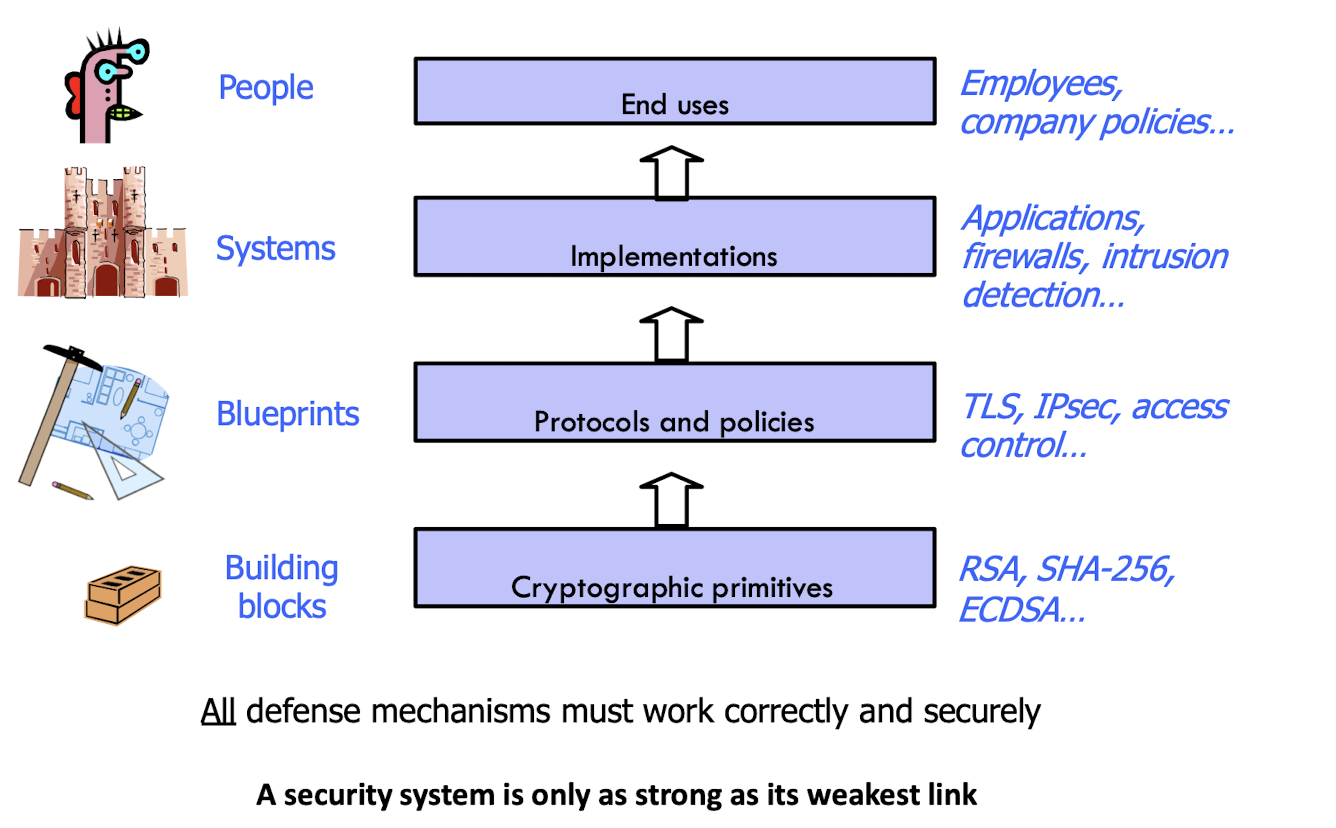

1.14 Security stack

A security system is only as strong as its weakest link

1.15 Security is based on trust

Is a system secure?

- For what?

- On which assumptions?

- That depends on what you trust

What can you trust?

What do you trust?

1.16 Trust what?

- Do you trust your operating system (OS)?

- Windows/Linux/macOS/Android/iOS

- Does your OS send your secrets to Microsoft/Apple?

- Does your OS send your password to someone?

- Does your OS have a special backdoor for “special users”?

- Where did you obtain your computer/OS?

- Did you compile the sources of your Linux kernel by yourself?

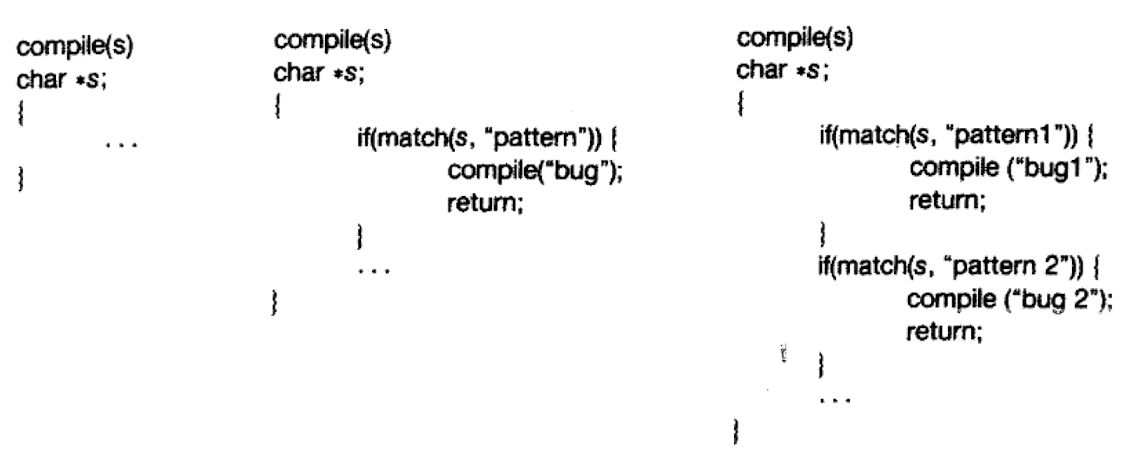

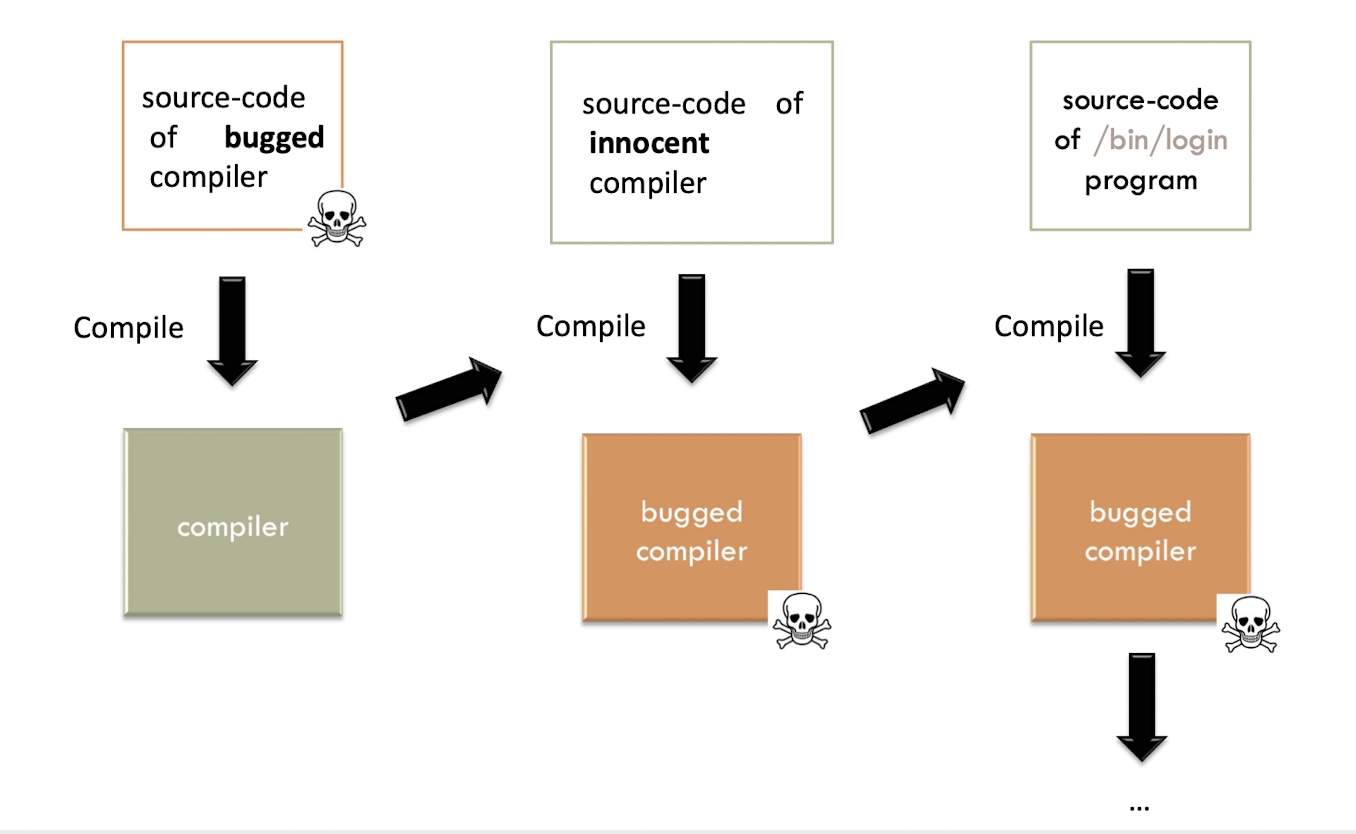

1.17 Trust the compiler?

- Who wrote the compiler?

Consider a “backdoored” compiler:

- Compiler looks for source code that is in charge of the login process, inserts backdoor into it

OK, inspect the source code of the compiler… Looks good? Recompile the compiler!

- Does this solve the problem?

Trust?

- By Ken Thompson

- Turing Award

- Invented the B programming language

- Creator of the UNIX operating system with Dennis Ritchie (1941-2011)

1.18 Reflections on Trusting Trust

Turing Award Lecture

- Communications of the ACM (August 1984)

- https://dl.acm.org/doi/abs/10.1145/358198.358210

The C compiler is written in C.

- A bugged compiler

1.19 What happens?

1.20 Moral of the story

“The moral is obvious.You can’t trust code that you did not totally create yourself. (Especially code from companies that employ people like me.) “

“No amount of source-level verification or scrutiny will protect you from using untrusted code. “

1.21 Is this a real problem?

Yes, it happened to a Delphi compiler in 2009!

Win32/Induc-A

What about nowadays? Who knows…

1.22 Other concerns

- Do you trust your processor?

- Cryptographic operations within your processor?

- AES-NI, RDRAND

- Firmware/BIOS?

- Hardware-level remote management

- Intel vPro, AMT

- Cryptographic software code?

1.23 Trustworthiness

Trustworthiness: A characteristic of an entity that reflects the degree to which that entity is deserving of trust

A system/tool/person/organization might be trusted for multiple reasons, but is it trustworthy?

- Transparency

- Benevolence/goodwill

- Integrity

- Track record

- Integrity

- Verification

1.24 Threat modeling

1.24.1 Threat modeling

- Understand what you are trying to protect, and against whom you wish to protect it

- The reason why you need the protection

Threat modeling can find security strengths and weaknesses, discover vulnerabilities, and provide feedback into the security life cycle of a product

Which threats?

- Only consider dangers that are reasonably likely to occur

- Subjective

- Look for likely attackers and patterns that allow the mitigation of a bunch of different attacks by defeating the techniques that they all have in common

- Only consider dangers that are reasonably likely to occur

1.24.2 Example of threat model

Threat: Ex-girlfriend/boyfriend breaking into your email account and publicly releasing your correspondence with the My Little Pony fan club

Solution: Strong passwords, two-factor authentication

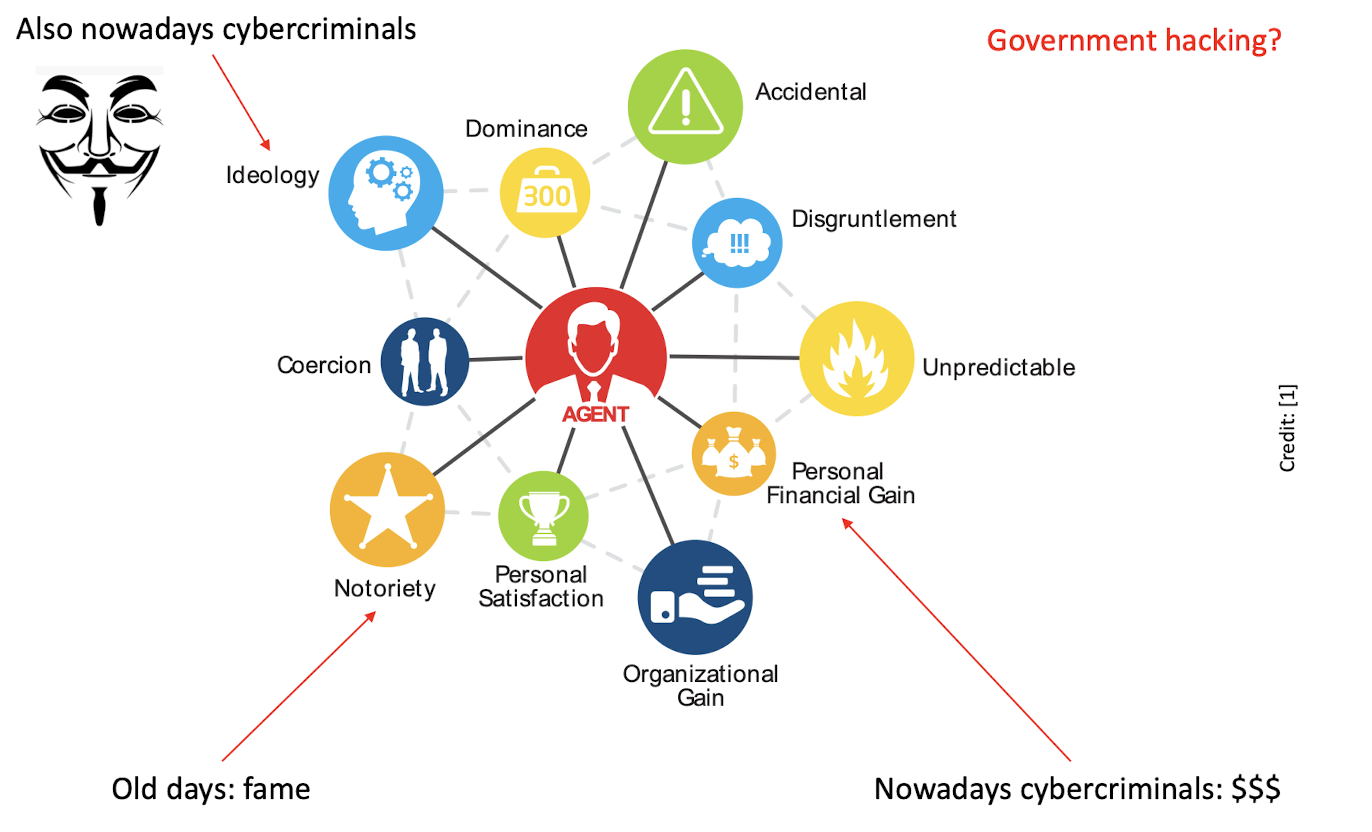

1.24.3 Attacker’s motivations

1.24.4 State-sponsored adversaries

Your threat model may consider powerful/un-bounded adversaries who can:

- Stealthily influence and weaken encryption standards

- E.g., NSA’s BULLRUN program, weakened Dual_EC_DRBG

- Collaborate with hardware manufacturers to insert backdoors

- Discover and weaponize unknown vulnerabilities

- Called zero-day vulnerabilities

- E.g., NSA/CIA/Mossad’s Stuxnet malware

- Access mind-boggling computing power and storage

- E.g., NSA’s 1.5B $USD Utah Data Center

- E.g., NSA’s XKeyscore tool could record all Internet traffic for 3-5 days

1.24.5 NSA Tailored Access Operations (TAO)

- Here, intercept a network equipment package

- Installation of “beacon implants” directly into targets’ electronic devices

- Equipment then re-packaged and placed back into transit to the original destination

1.25 Backdoors

1.25.1 TSA keys

- Post-9/11: TSA inspects your suitcase without breaking locks

- TSA locks can be opened by special “TSA keys” no matter whether someone has the correct code

- Keys got leaked at some point

- https://theintercept.com/2015/09/17/tsa-doesnt-really-care- luggage-locks-hacked/

1.26 End notes

1.26.1 The Security Mindset

https://www.schneier.com/blog/archives/2008/03/the_security_mi_1.html

Uncle Milton Industries has been selling ant farms to children since 1956. Some years ago, I remember opening one up with a friend. There were no actual ants included in the box. Instead, there was a card that you filled in with your address, and the company would mail you some ants. My friend expressed surprise that you could get ants sent to you in the mail.

I replied: “What’s really interesting is that these people will send a tube of live ants to anyone you tell them to.”

- This kind of thinking is not natural for most people. It’s not natural for engineers. Good engineering involves thinking about how things can be made to work; the security mindset involves thinking about how things can be made to fail. It involves thinking like an attacker, an adversary or a criminal .You don’t have to exploit the vulnerabilities you find, but if you don’t see the world that way, you’ll never notice most security problems.

1.26.2 The immutable laws of security (Microsoft)

- If a bad actor can persuade you to run their program on your computer, it’s not solely your computer anymore.

- If a bad actor can alter the operating system on your computer, it’s not your computer anymore.

- If a bad actor has unrestricted physical access to your computer, it’s not your computer anymore.

- If you allow a bad actor to run active content in your website, it’s not your website anymore.

- Weak passwords trump strong security.

- A computer is only as secure as the administrator is trustworthy.

- Encrypted data is only as secure as its decryption key.

- An out-of-date antimalware scanner is only marginally better than no scanner at all.

- Absolute anonymity isn’t practically achievable, online or offline.

- Technology isn’t a panacea.

https://learn.microsoft.com/en-us/security/zero-trust/ten-laws-of-security

1.26.3 Expectations on students

- Be disciplined and study hard

- Submit your assignment/project on time

- Must never use any attack tools for “unethical” purposes

- Never use them for personal gain

- Also consider legal aspects

- Make the world a better place!

2. Cryptography I

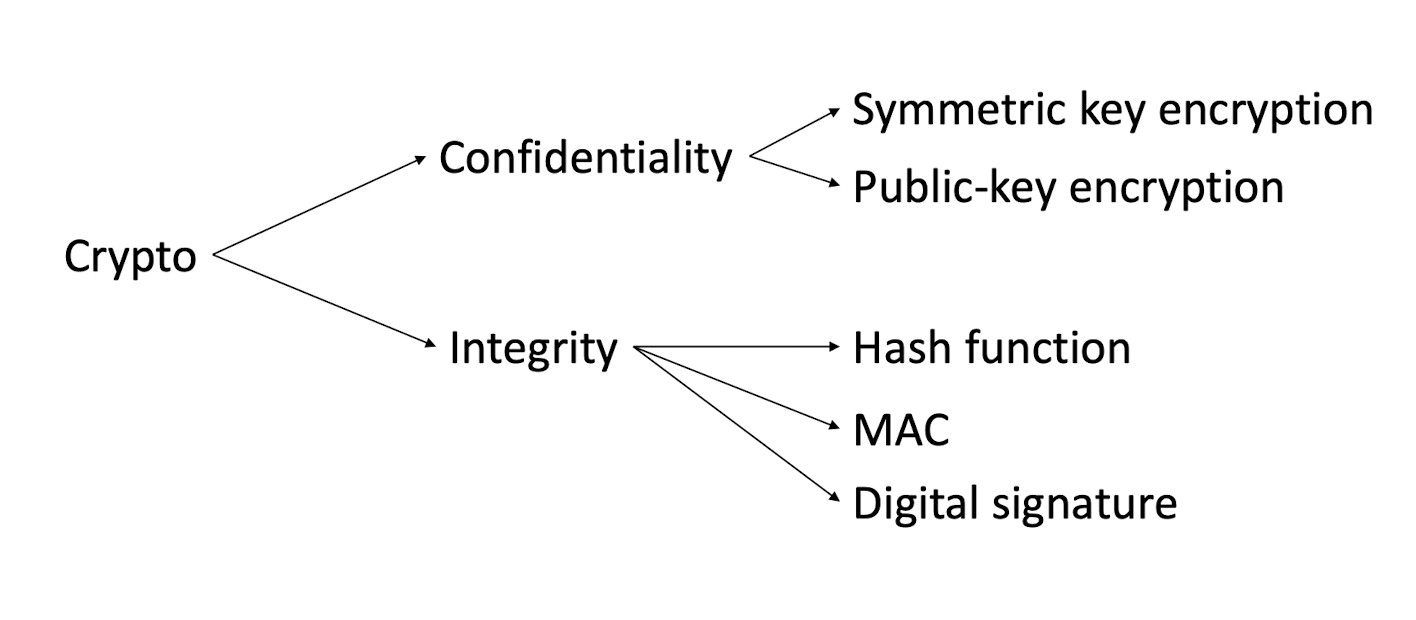

2.1 Cryptography – Definition

“Cryptography is the study of mathematical techniques related to aspects of information security such as confidentiality, data integrity, entity authentication, and data origin authentication.

Cryptography is not the only means of providing information security, but rather one set of techniques.” (HAC, Definition 1.1, pg. 4).

2.2 Goals of cryptography

Confidentiality – how do you prevent others from reading a message?

- E.g.: This transaction should not be eavesdropped

Integrity – how do you know someone didn’t replace or modify a message?

- E.g.: This transaction should not be modified

Data origin authentication – how do you know a message originated from a certain source?

- E.g.: Was this transaction from Alice or Bob?

Entity authentication – how do you know that a person is who they claim to be?

- E.g.: Was the Alice in this transaction, actually Alice?

Non‐repudiation – how to prevent someone from denying past commitments or actions?

- E.g.: Alice claimed she didn’t do this transaction, but here is the proof she did

2.3 Keep in mind

Cryptography and security are NOT the same

Always think from three perspectives (at least)

- Defenders’ view point

- Attackers’ view point

- They are as capable as you are (or more!)

- Your opponents are humans, not machines

- How much it will cost – to protect & to attack

What is the threat model?

- Assumptions about the system & operating environment

Security is an arms race

- You just need to stay one step ahead

2.4 Where is cryptography used?

- OCTOPUS card

- Credit card

- Web browsing

- Login to Facebook, X, WeChat, …

- Cellphone call

- Passport

- Software update

- Starting up your computer/smartphone

- War

2.5 Cryptology

- Greek: “krypto” = hide

- Cryptology: science of hiding

Cryptography + cryptanalysis = cryptology

- Cryptanalysis

- Study of code breaking (breaking of crypto systems)

- Good cryptographers know cryptanalysis

- Must know how systems are broken if you’re going to create secure systems

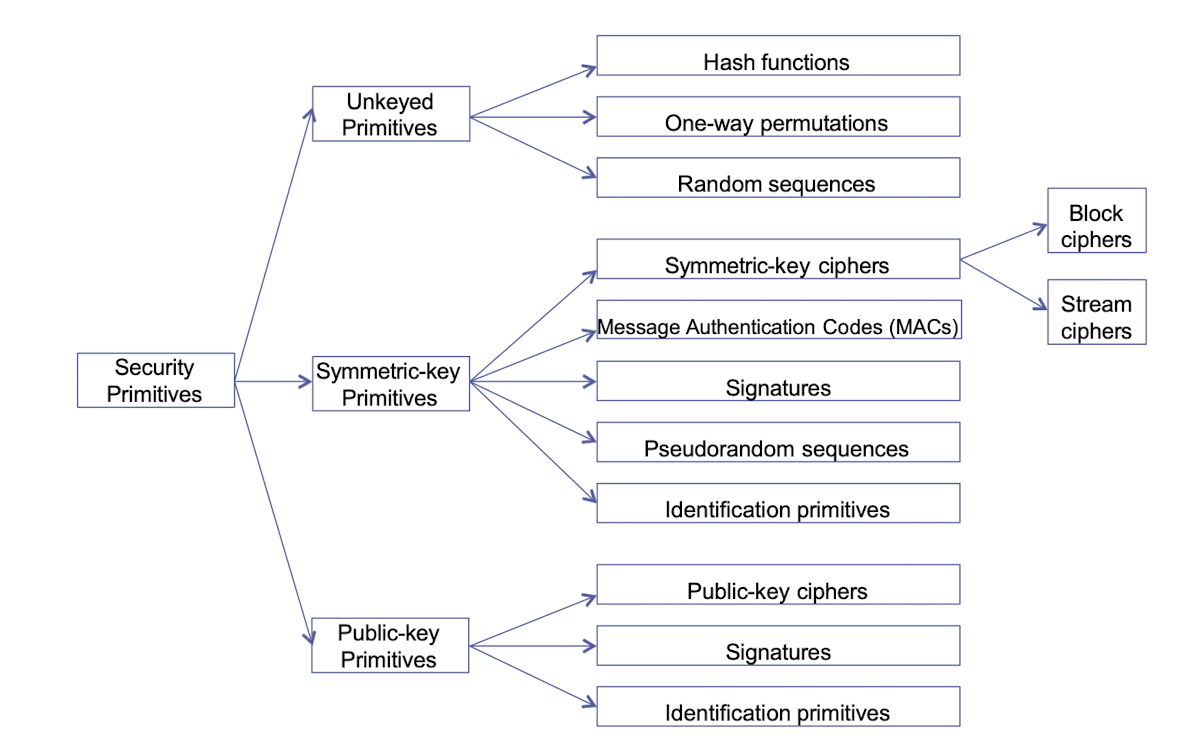

2.6 Crypto tools

2.7 Cryptography: simplified view

2.8 Encryption

Communications in the clear can be intercepted

- Radio, postal service…

Encryption enables confidentiality of communications

- Message is scrambled in a way that only Alice and Bob can understand

- Decryption: reverse the scrambling

2.8.1 Goals of encryption

- Data confidentiality

- Protect a large amount of data with “short” secrets

- Similar to physical lock with keys?

- Make it “difficult” for those without the secret key

- But efficient retrieval with the correct key

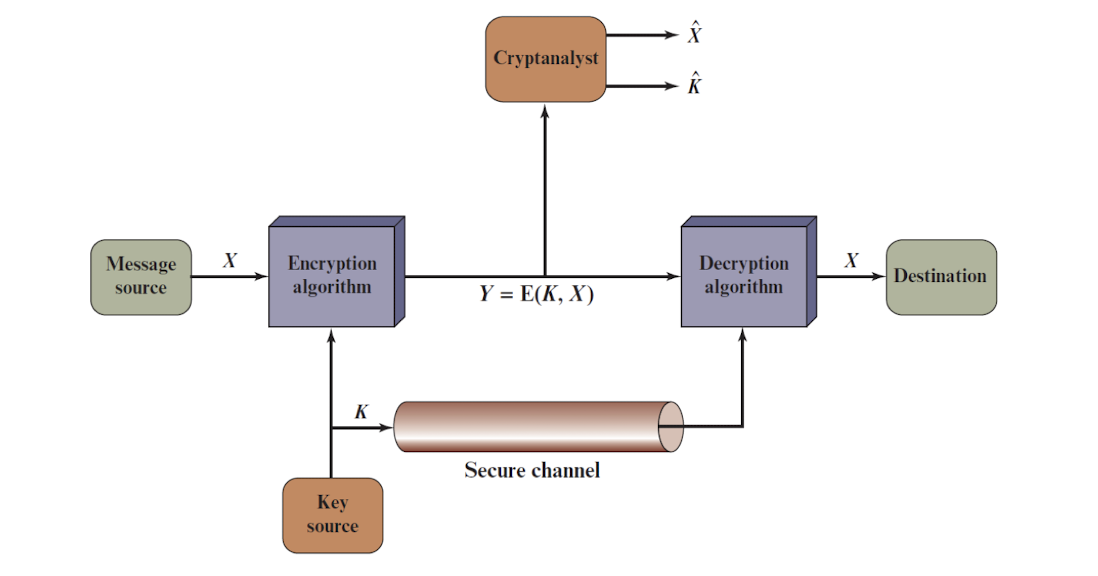

2.9 Attacker model

- Passive vs. active attacker?

- What computational resources the attacker has?

- What does the attacker know about a system?

- cryptosystems used, protocols

- What are the assumptions?

- encryption keys are shared via a secure channel

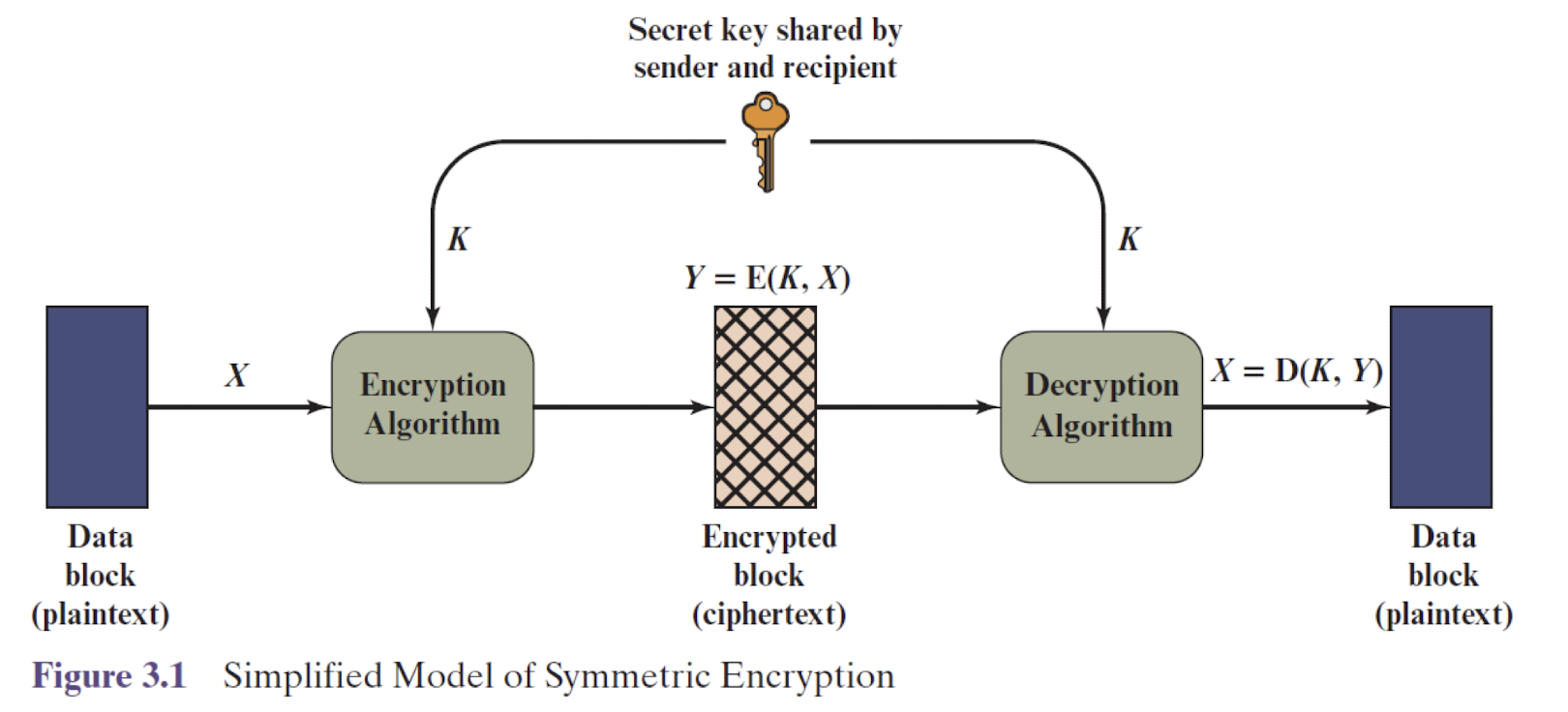

2.10 Symmetric encryption

- Encryption is a mathematical operation that depends on a key

- Symmetric encryption: same secret key to encrypt/decrypt

- It is computationally infeasible to recover $M$ without $k$

- What to hide: key + plaintext

2.10.1 Terminologies

- Plaintext: the original message

- Ciphertext: the encoded message

- Cipher: algorithms for transforming plaintext to ciphertext and vice-versa

- Key: info used in cipher known only to sender/receiver

- Encipher (encrypt): converting plaintext to ciphertext

- Decipher (decrypt): recovering ciphertext from plaintext

2.10.2 Key sharing

- Key shared over a “secure channel”

- Considered safe, cannot eavesdrop

- Exchange in person in private.

- Why not talk over a secure channel all the time then?

- Considered safe, cannot eavesdrop

2.10.3 Symmetric ciphers

There are two famous classical symmetric ciphers

- Caesar cipher, NO

- One-time pad, NO

Modern symmetric ciphers (covered later) include:

- DES (deprecated), Triple-DES, NO

- AES, YES

- Chacha20, YES

- Some less known/used ones:

- SM4, SM9 (Chinese), Magma/GOST (Russian)

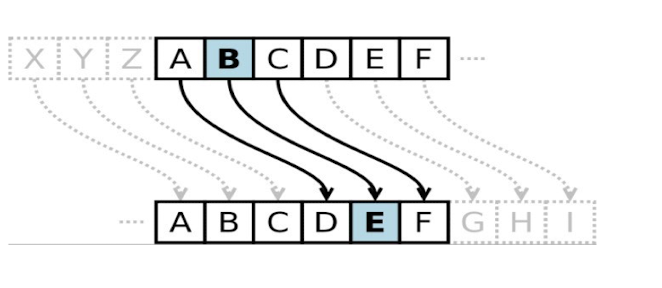

2.11 Caesar Cipher

2.11.1 Shift/Caesar cipher

- Simple substitution cipher

- substitution – each element is mapped into another

- Each letter is replaced by another

- Shift the alphabet

- Caesar cipher: shift by 3

- How to break this?

- How many keys are there?

- Plaintext language

2.11.2 Caesar cipher algorithm

- Can define transformation as:

1 | |

- Mathematically give each letter a number

1 | |

- Algorithm can be expressed as:

- A shift may be of any amount, so that the general Caesar algorithm is:

- Where $k$ takes on a value in the range 1 to 25; the decryption algorithm is simply:

2.11.3 Meaning of mod ( 1 /3)

Can be used as a binary operator or congruence relation

As a binary operator:

- $n$ is called the modulus

- Remainder of the Euclidean division of $a$ by $n$

- E.g. 7 mod 3 = 7 − 2 × 3 = 1

If $r = a \; mod \; n$,

- then $a = k \times n + r$

- with $k, r$ integers

2.11.4 Modulo in programming

- In C

1 | |

- In Python

1 | |

2.11.5 Meaning of mod (2/3)

As a congruence relation:

- Mod expresses that two arguments have the same remainder with respect to a given modulus

- $a \equiv b \; mod \; n$

- $n$ divides $a-b$

- implies $a \; mod \; n = b \; mod \; n$

$7 \equiv 4 (\; mod \; 3)$ expresses that both 7 and 4 have a remainder of 1 when divided by 3

$− 11 \equiv 17 (\; mod \; 7)$

17 = 2 7 = 14 + 3

-11 = (-2) 7 = -14 + 3

-11 mod 7 = -11 - floor(-11/7) 7 = -11 - (-2) 7 = -11 + 14 = 3

More properties:

- If $a \equiv b \; (mod \; n)$ then $b \equiv a \; (mod \; n)$

- $a \equiv a \; (mod \; n)$

- if $a \equiv b \; (mod \; n)$ and $b \equiv c \; (mod \; n)$ then $a \equiv c \; (mod \; n)$

Exercise at home

- Decrypt the following message:

- URYYB QRNE FGHQRAGF

- (HELLO DEAR STUDENTS)

- With the following key: 13

- Then, instead of decrypting the message, encrypt it again

- Same key: 13

- What happens?

- ROT 13: rotation 13, it is a loop

2.11.6 Kerckhoffs’ Principle (1883)

The security of a cryptosystem should not depend on keeping the cryptographic algorithm secret

Security should depend only on the key

- Don’t assume enemy won’t know the algorithm Can capture machines, disassemble programs, etc. Too expensive to invent new algorithm if it is compromised

In fact, most ciphers are publicly defined in open specifications: DES, Triple-DES, AES…

- Security by obscurity doesn’t work

- Look at history of examples; A5/1, Enigma …

- Better to have open scrutiny by experts

2.11.7 How to attack encryption

- Cryptanalysis

- Search for weaknesses in the algorithms

- (partial) knowledge about plaintext, ciphertext

- Brute-force (aka exhaustive key search)

- Try all possible keys (N) – on average N/2 trials are required

- Many others – malware, key/screenlogger, physical and side-channel attacks, implementation bugs, key backdoor, legal means

2.11.8 Brute force key search

- Given: ciphertext $c$ , and the cipher used

- $K$ is the key space (all possible keys), size of $K$ is $|K|$

For each key $k$ in $K$

- Calculate $m^\prime = D(k, c) = D_k(c)$

- Does $m^\prime$ look like a real message?

- Yes —> done!

- No —> continue

Expect to try $| K |/2$ keys before correct match

2.11.9 Language redundancy & cryptanalysis

- Monoalphabetic ciphers retain their relative letter frequencies!

- Count relative letter frequencies

- Make guesses based on plaintext language frequencies

- Frequency analysis

2.12 Vernam cipher / One-Time Pad

2.12.1 Reminder: AND operator

- AND is a binary operator that takes two equal-length binary numbers

- If the two bits at the same position in both numbers are equal to 1 , the resulting bit will have a value 1 at that position

- Otherwise, the resulting bit will have a value 0 at that position

10100110 & 1001011

1 | |

2.12.2 Reminder: OR operator

- OR is a binary operator that takes two equal-length binary numbers

- If the two bits at the same position in both numbers are equal to 0 , the resulting bit will have a value 0 at that position

- Otherwise, the resulting bit will have a value 1 at that position

10100110 | 1001011

1 | |

2.12.3 Reminder: XOR operator

- XOR is a binary operator that takes two equal-length binary numbers

- If the two bits at the same position in both numbers are equal, the resulting bit will have a value 0 at that position

- Otherwise, the resulting bit will have a value of 1 at that position

10100110 ^ 1001011

10100110 ⊕ 1001011

1 | |

2.12.4 Vernam cipher

The Vernam Cipher is a stream cipher defined on the alphabet $A = {0,1}$.

A binary message $m_1, m_2, …, m_t$ is operated on by a binary key string $k_1, k_2, …, k_t$ of the same length to produce a ciphertext string $c_1, c_2, …, c_t$ where:

- $c_i = m_i \oplus k_i$

- $1 \le i \le t$

- Decryption?

- Apply XOR again on the ciphertext to get the plaintext

2.12.5 One-time Pad (OTP)

Vernam cipher “can” use any bit source as a keystream:

- E.g., music, picture, or video files.

But for real security it should be:

- Random (what is randomness?)

- Never used again

Vernam cipher with such a keystream is called a one‐time pad

- The one-time pad is provably secure!

2.12.5.1 OTP

One-time pad

- Two spies decided on a key of 10110

- Spy A sends message 11011 to spy B

- Spy B decrypts the ciphertext with the key

2.12.5.2 One‐time pad: keystream

Very important that keystream is:

- Not re‐used – security is gone as soon as second encryption performed with same key.

- Random – if attacker can guess the key or limit the possibilities, then they could discover the plaintext.

Challenge: how do you keep getting new and random keys that are as long as your message?

- If easy, we can communicate securely!

- Could pre-send them (e.g., a SD card containing only random bits)

- practical?

Exercise

Definition can be generalized to any alphabet size

- It becomes a Ceasar cipher with a shift index picked at random for each letter

Encrypt the message: HELLO WORLD with the one-time pad: POLYUROCKS

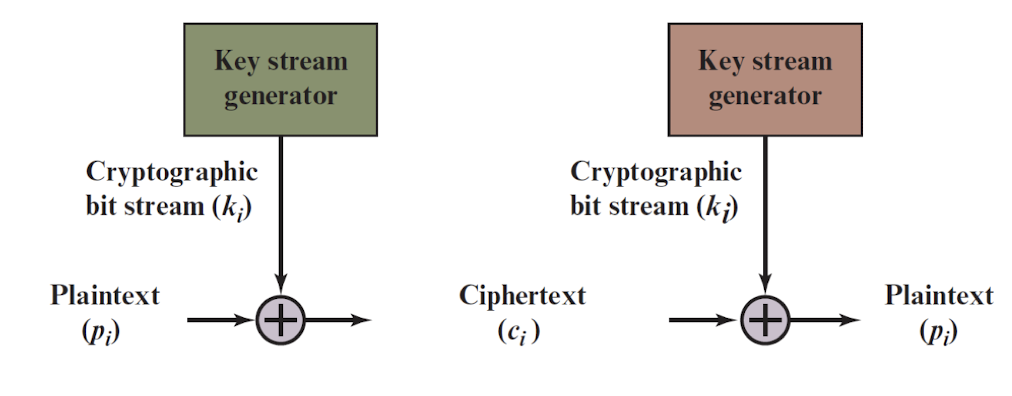

2.13 Stream ciphers

2.13.1 Block and stream ciphers

Block cipher

- plaintext message is broken into fixed-length blocks before encryption

- one block is processed at a time

- most modern ciphers are block ciphers

Stream cipher

- block length is one (bit or character)

- requires only limited buffering of data

- Ceasar, Vigenere, Vernam, OTP are stream ciphers: letter by letter

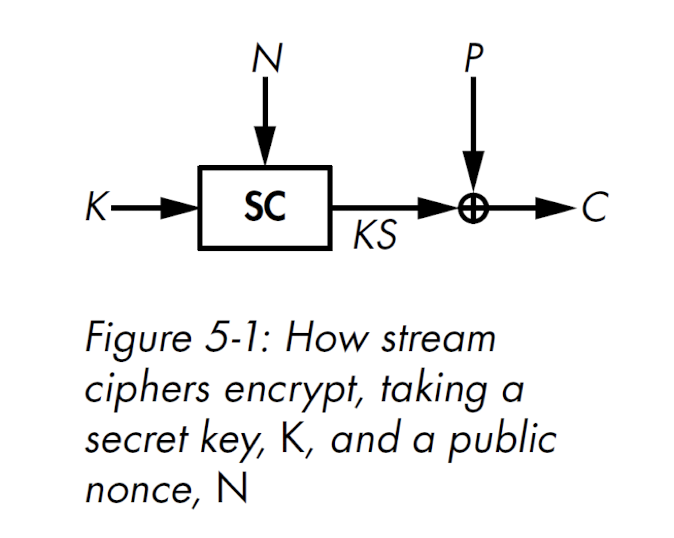

2.13.2 Stream cipher

A stream cipher computes $KS = SC ( K , N )$, encrypts as $C = P \oplus KS$, and decrypts as $P = C \oplus KS$.

- $KS$ is called key stream

- $SC$ is the stream cipher algorithm

- $N$ is a “nonce”: “number used only once“

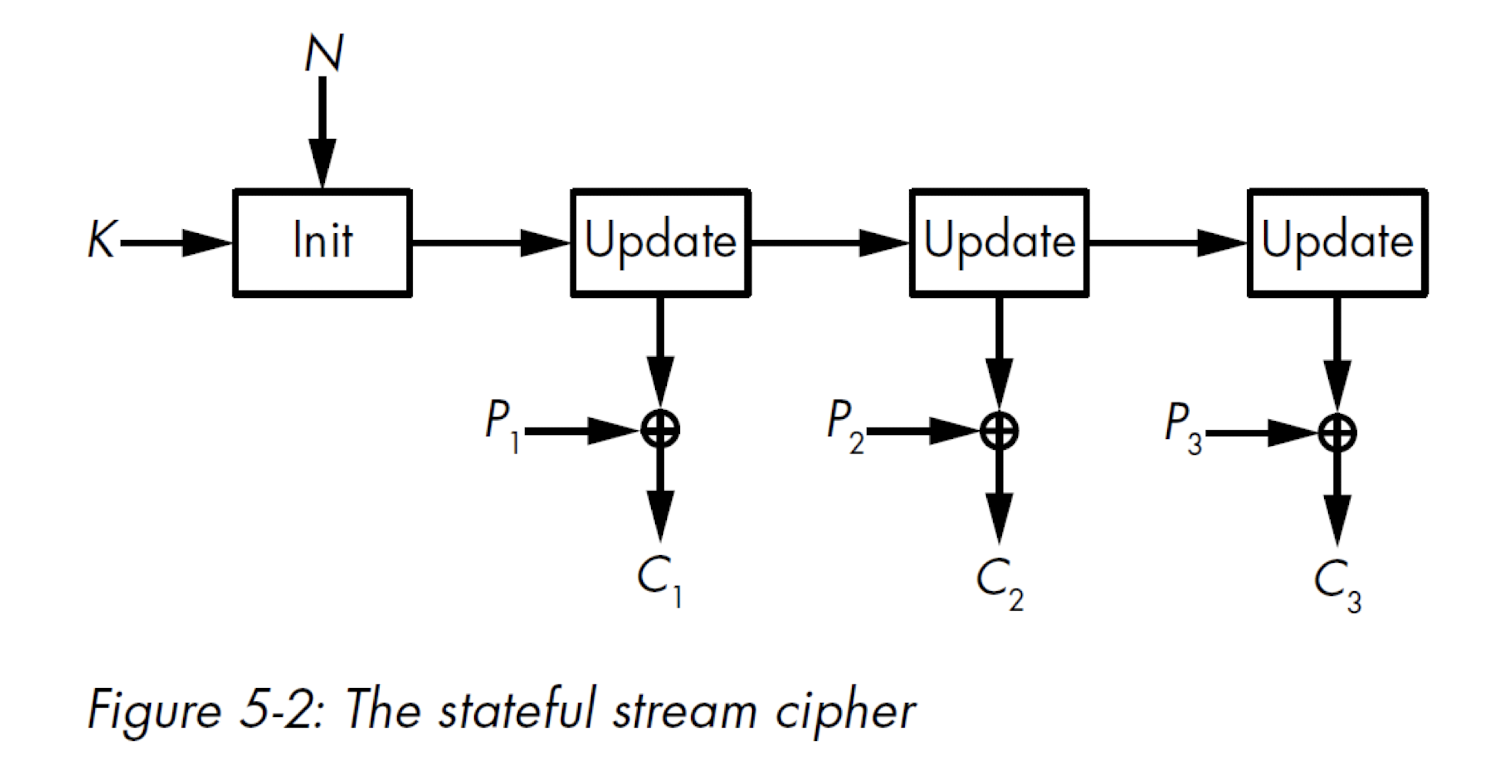

2.13.3 Stateful stream cipher

- the KS must be generated step by step (stateful), can not just get one KS.

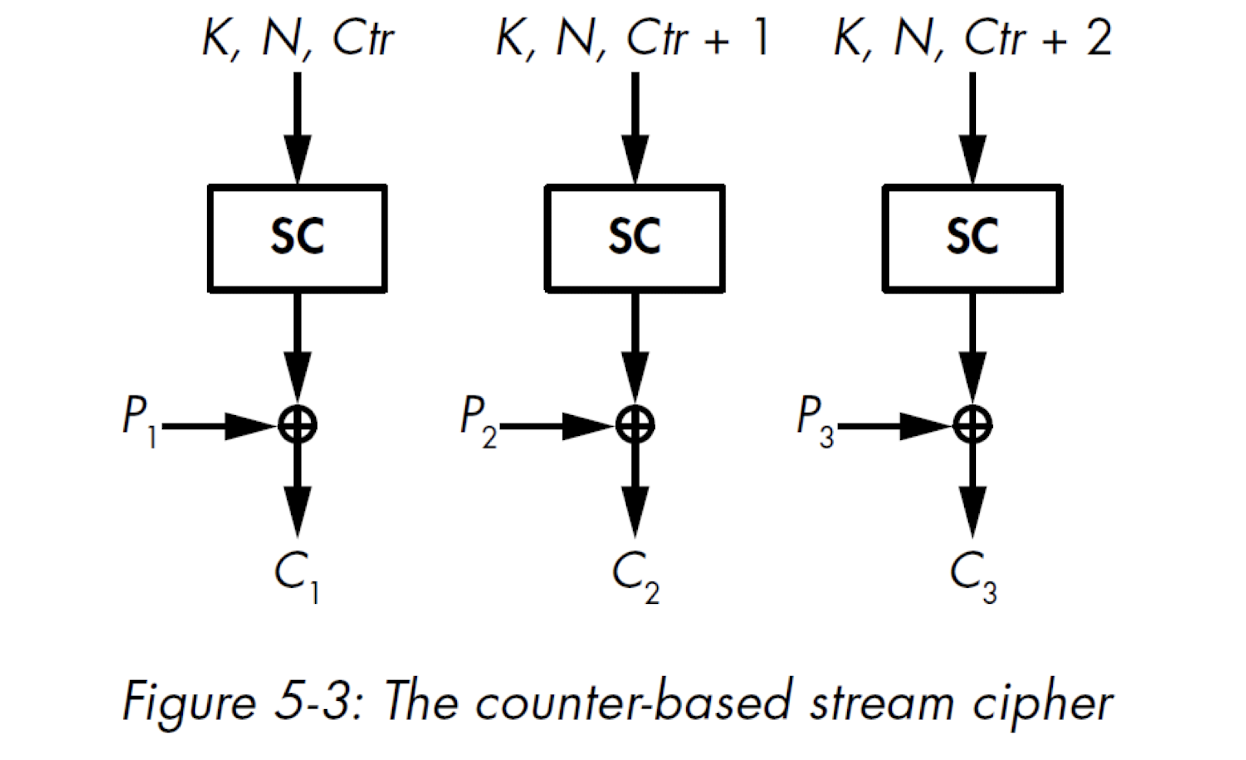

2.13.4 Counter-based stream ciphers

- get the KS by index, no need to start from the start.

2.13.5 Stream ciphers – synchronization

Synchronous stream ciphers

- keystream is generated independently of plaintext and of ciphertext

- error in the ciphertext propagates to the end of the decrypted plaintext.

Self-synchronizing stream ciphers

- Key‐stream is generated as a function of the key and a fixed number of previous ciphertext digits

- depends on the previous ciphertext digits

- will not loss all ciphertext if there is an error in the ciphertext

2.13.6 Examples of stream ciphers

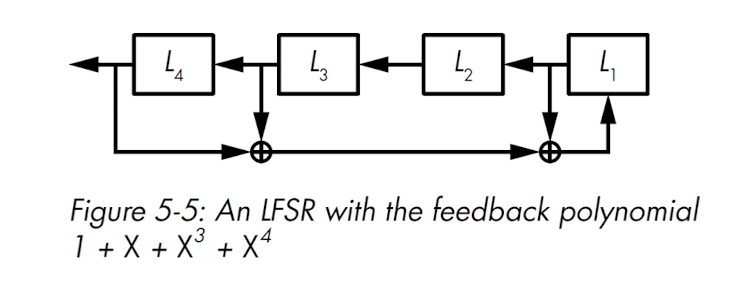

- Linear Feedback Shift Registers

RC4

- Designed in 1987 by Ron Rivest of RSA Security, then reverse engineered and leaked in 1994, RC4 has long been the most widely used stream cipher.

- Very simple algorithm, byte-oriented, fast in software implementation

- Used with SSL/TLS, WPA, WEP, … but now broken

Salsa20/ChaCha20

- 2005

2.13.7 Key scheduling algorithm of RC4 – Init

1 | |

2.13.8 Key scheduling algorithm of RC4 – Keystream

1 | |

Exercise

- Implement the RC4 cipher (see previous slides for the keystream)

- Encrypt the string “We love crypto at PolyU!” using the key “superlongkey”

- The output will not be printable in plain letters, encode it in hexadecimal

- The output should start with 0xB3

2.14 Randomness

Randomness is everywhere in cryptography

Remember $E_k(m)$? What is $k$?

- Typically key space is $2^{128}$

- $k$ is often a random key

- Big enough so that 2 persons don’t generate the same key

- Ensures an attacker cannot easily re-generate the key

- Generation of secret keys, and other import values

- Is the bit-string 011010101100101001001 random?

- Depends on what generated it

2.14.1 Randomness: about predictability

True

- Coin flipping

- Dice rolling

- Roulette

- Weather

- Quantum mechanics

- Radioactive decay

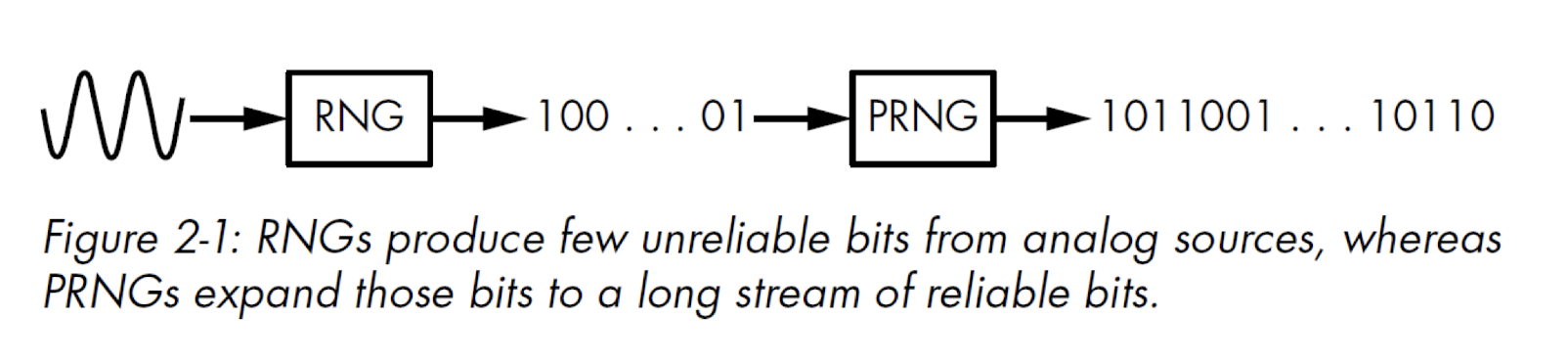

2.14.2 ==Pseudo randomness==

- Since computers are deterministic entities

- An algorithm hardly can “invent” randomness

True randomness from analog events is difficult to collect, and too slow

Mouse movements, PID of process, exact time (μs)?

- Poor randomness

- Solution: combine deterministic algorithm with true randomness

- PRNG(

seed) = random-looking string (Pseudo Random Number Generator) - If

seedis unpredictable, the output too seed= electrical noise in the computer, mouse movement…

- PRNG(

2.14.3 RNG + PRNG

- Noise from the CPU

2.14.4 CSPRNG

Normal PRNG is not so strict, easy to predict

(Cryptographically Secure Pseudo Random Number Generator)

A PRNG is said to be “Cryptographically Secure” if:

- It is computationally infeasible to predict the next bit given a complete history of past bits (next‐bit test)

- The number of 1’s and 0’s should be equal (balanced)

- Many other tests (non-linearity, …)

- No way to reconstruct the key

Computational security:

- level of computation required to defeat it (using best attack known) far outweighs the computational resources of the adversary

2.14.5 CSPRNG use cases

Most stream ciphers substitute a keyed “cryptographically-secure pseudo-random” keystream for a random keystream

- E.g., RC4 and others

Keys themselves are preferably random, or derived from specific information (e.g., passwords)

- See Lecture 5

2.14.6 Sources of entropy

CPU support

Online services

- random.org (supports HTTPS)

- Should not be trusted for any security applications!

- Cloudflare

2.14.7 Bad entropy sources

==DO NOT USE THEM==

- NOT good for cryptographic operations

C/C++

srand(time(NULL));rand();

Java

Random randomGenerator = new Random();int randomInt = randomGenerator.nextInt(100);

Python

random.randint(1, 10)

2.14.8 Good entropy sources

- Cryptographically-secure PRNG

- C/C++

- Use APIs, like

CryptGenRandom()on Windows

- Use APIs, like

- Java

SecureRandom random = new SecureRandom();random.nextBytes(bytes);

- Python

os.urandom(n)

3. Cryptography II

3.1 Use cases

- One of the most used crypto tool

- Used for:

- Data confidentiality (encrypt data)

- MACs (protect integrity, see next lecture)

- PRNGs (pseudorandom number generators)

- Authentication

- Very efficient software and hardware implementations

- Can be used in low-resource devices

3.2 History

- During the Cold War , the US and Soviets developed their own ciphers

- The US government created the Data Encryption Standard(DES)

- Adopted as a federal standard (1979-2005),

- The KGB developed GOST 28147-89 (Magma)

- An algorithm kept secret until 1990 and still used today

- In 2000, the US-based National Institute of Standards and Technology (NIST) selected the successor to DES, called the Advanced Encryption Standard (AES)

- An algorithm developed in Belgium and now found in most electronic devices

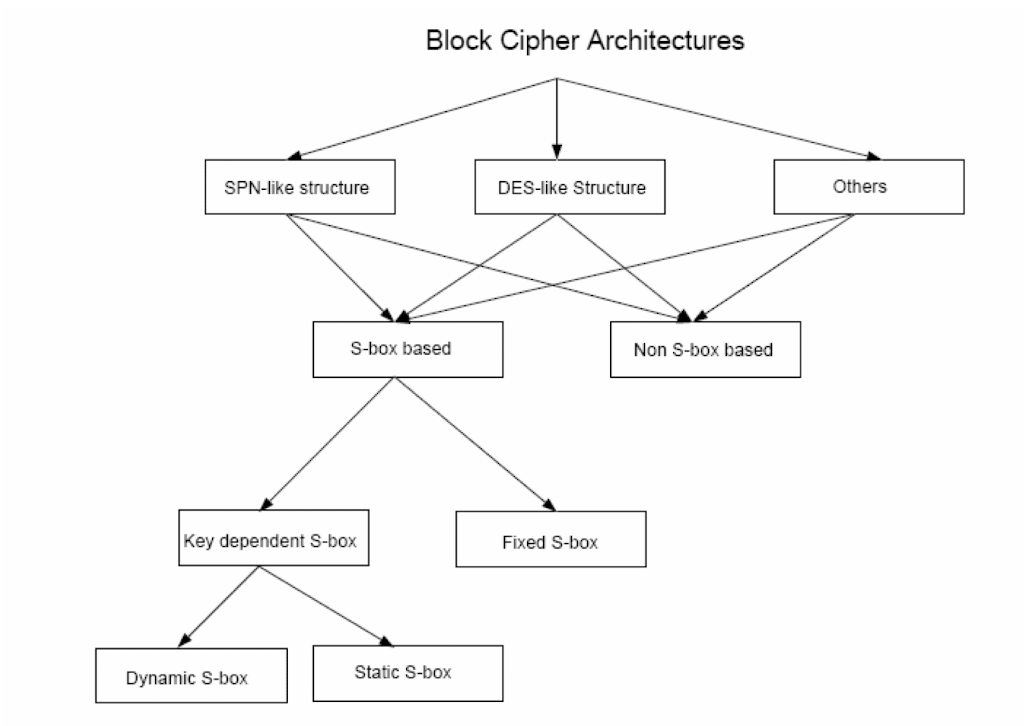

- AES, DES, and GOST 28147-89 are all block ciphers, a type of cipher that combines a core algorithm working on blocks of data with a mode of operation, or a technique to process sequences of data blocks

3.3 Definition

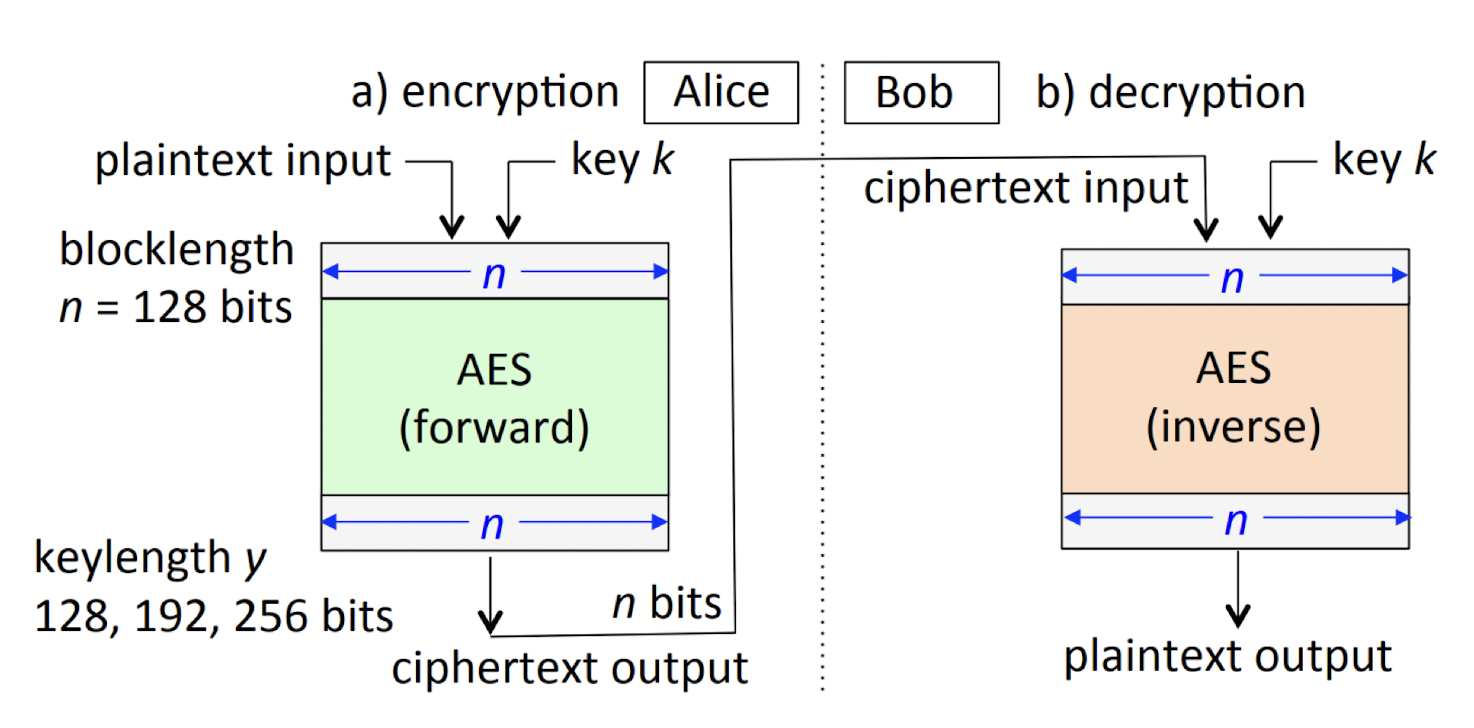

- A function that maps $n$‐bit plaintext blocks to $n$‐bit ciphertext blocks

- $n$ is called the blocklength (or block size)

- DES: 64 bits, AES: 128 bits

Parameterized by $k$‐bit key $K$ (chosen at random)

- $k$ is called the keylength

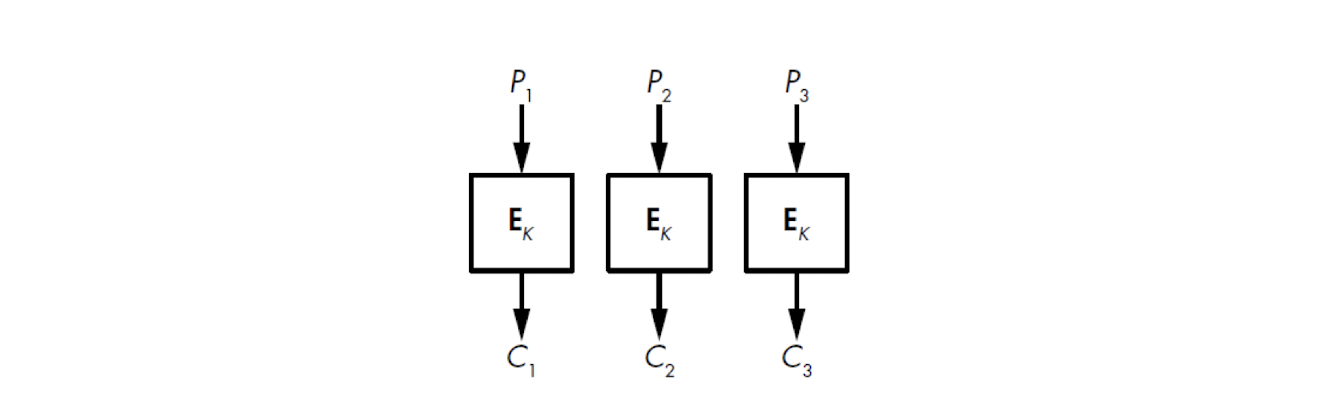

Encryption: $C = E(K, M)=E_k(M)$

- Decryption: $M = D(K, C)=D_k(C)$

If $K$ is $k$ bits long, the number of keys is $2^k$

- How many bits are required to represent a 3-digit PIN?

The encryption function must be one-to-one, why?

- The encryption function is a bijection , defining a permutation on $n$‐bit vectors

Each key potentially defines a different bijection

If each $k$‐bit key is equi-probable , each defines a different bijection, the entropy of key space is $k$

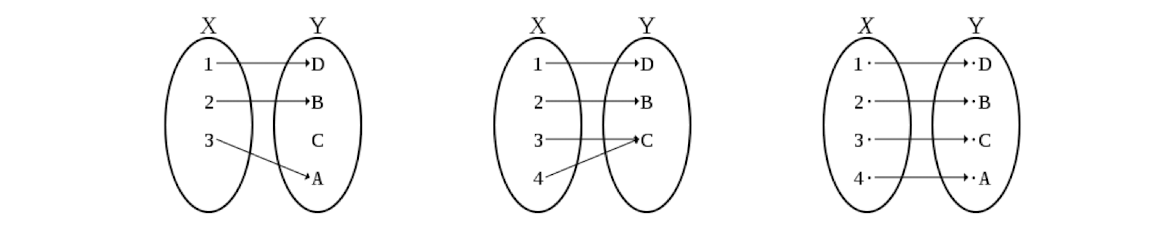

3.4 Bijection

$E_k$ must be a bijection as the process must be reversed (decryption)

- Bijection : one-to-one and onto

- One-to-one: each element in X maps to at most one element in Y (X=plaintext, Y=ciphertext), also called injectio

- Onto: each element in Y maps to at least one element in X, also called surjection

3.5 Security requirements

- For a block cipher to be secure, it must be a pseudorandom permutation (PRP)

As long as the key is secret, an attacker shouldn’t be able to compute an output of the block cipher from any input

As long as $K$ is secret and random from an attacker’s perspective, the attacker should have no clue about what $E_k(P)$ looks like, for any given $P$ (no clear link between them)

More generally, attackers should be unable to discover any pattern in the input/output values of a block cipher

3.6 Test

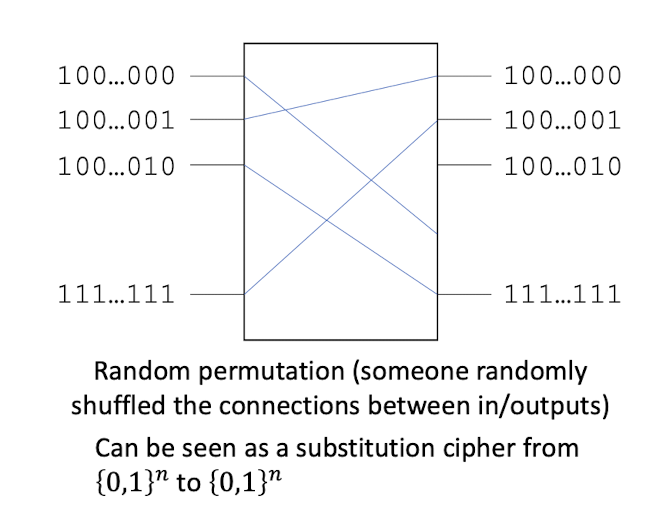

It should be impossible to tell a block cipher from a truly random permutation, given black-box access to the encryption and decryption functions for some fixed and unknown key

Standard assumptions (in addition to Kerckhoffs’):

- Attacker has access to all transmitted ciphertext

Random permutation (someone randomly shuffled the connections between in/outputs)

Can be seen as a substitution cipher from ${0,1}^n$ to ${0,1}^n$

3.7 Consequences of the requirements

Attackers should be unable to recover a secure block cipher’s secret key; otherwise, they would be able to use that key to tell the block cipher from a random permutation

Of course that also implies that attackers can’t predict the plaintext that corresponds to a given ciphertext produced by the block cipher

3.8 Block cipher encryption/decryption, e.g., AES

3.9 What’s inside the cipher?

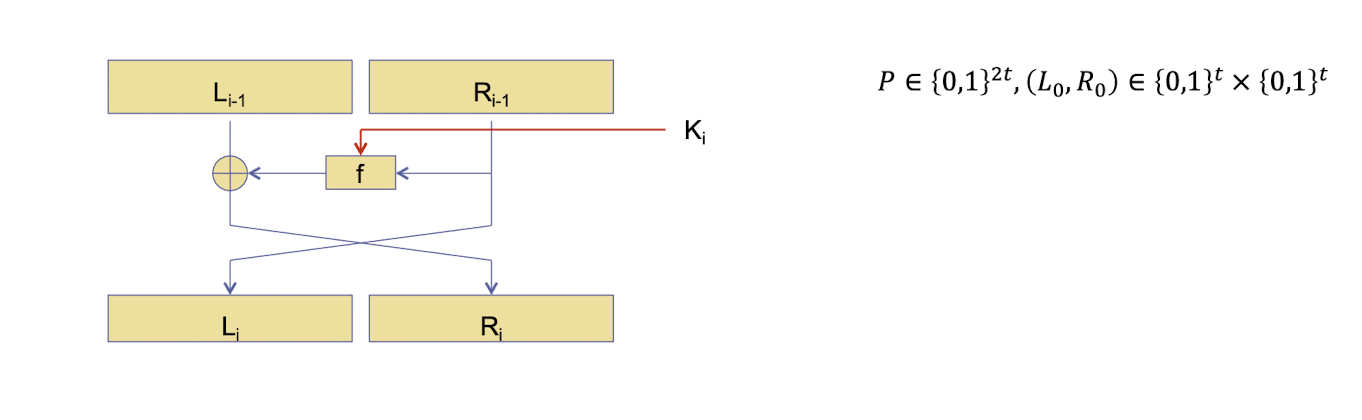

3.10 Feistel cipher

- Cipher that maps $P=(L_0, R_0)$ to $(L_n, R_n)$ using $r$ rounds

- Each round defines the transition $(L(i-1), R(i-1)) \rightarrow (L_i, R_i)$ as

- With $K_i$ being subkeys derived from the $K$

3.11 DES

- DES is based on a Feistel network

- 16 rounds

- DES has a 56-bit key

- In 1998, the EFF build a $250K DES Cracker that breaks a DES encryption in 56 hours through brute-force

- Specialized chips in parallel

- Ideas to fix DES:

- Triple-DES: key is now 3x bigger

3.12 Too hard to compute?

Imagine a key space of 32 bits (4 bytes)

- $2^32$ possibilities = 4,294,967,

- A lot? No, seconds to compute

- $2^32$ possibilities = 4,294,967,

What about 64 bits? (8 bytes)

- $2^64$ possibilities = 18,446,744,073,709,551,

- A lot? Yes, too large

- $2^64$ possibilities = 18,446,744,073,709,551,

3.13 ==Standard about what’s too hard==

- NIST

- National Institute of Standards and Technology

- Standards body in the United States

- ==Currently defines infeasibility as $2^{112}$ or greater==, for all computers on earth for a very long time (millions of years?)

3.14 Advanced Encryption Standard

- DES was getting old

- On January 1997 , the NIST announced the initiation of the AES development effort to replace DES

- On September 1997 , NIST made a formal call for AES candidates submission

- In 1999, out of 15, the selection was narrowed to 5 candidates:

- AES finalist: MARS (IBM), Twofish (Counterpane), RC6 (RSA) Rijndael (University, Belgium) and Serpent (University, Israel+England)

- On October 2000 , NIST announced the selection of Rijndael as the AES (invented by Joan Daemen and Vincent Rijmen)

- Rijndael supports key and block lengths of 128 to 256 bits in steps of 32 bits

- Rijndael versions with a block length of 128 bits, and key lengths of 128,192 and 256 bits have been adopted as the (AES)

- February 2001 , FIPS 197 (AES) was published for public review and comments

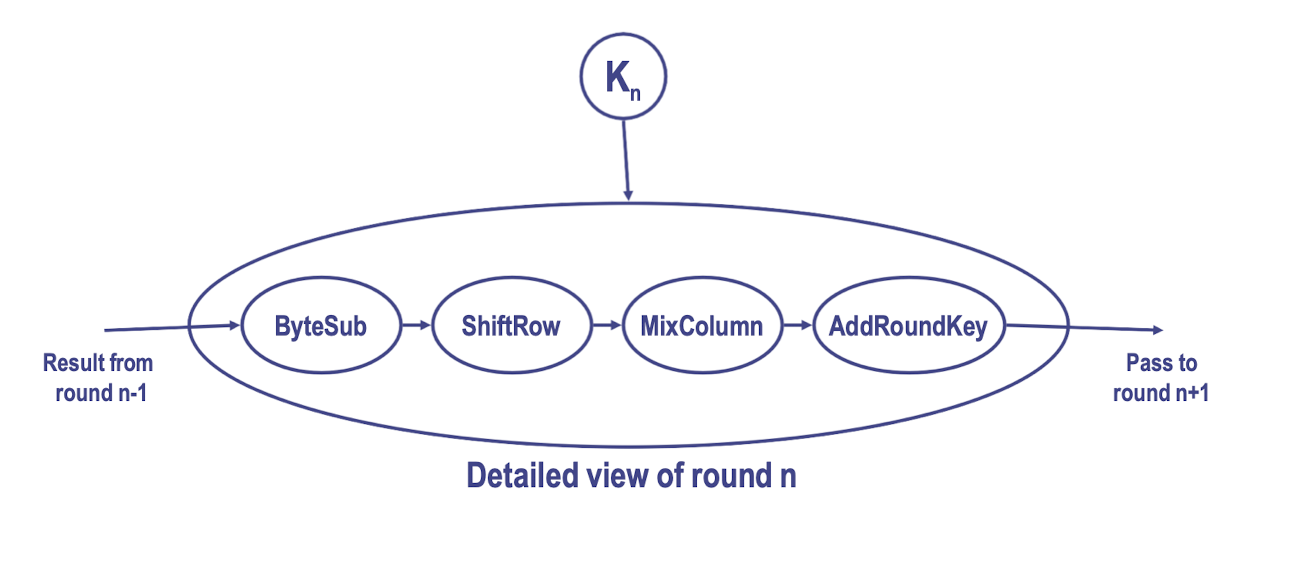

3.15 High-level view of AES

3.16 Important properties of block ciphers

- Confusion: means that the input (plaintext and encryption key) undergoes complex transformations

- Depth

Diffusion: means that these transformations depend equally on all bits of the input

- Breadth

In the design of a block cipher, confusion and diffusion take the form of substitution and permutation operations, which are combined within substitution–permutation networks (SPNs)

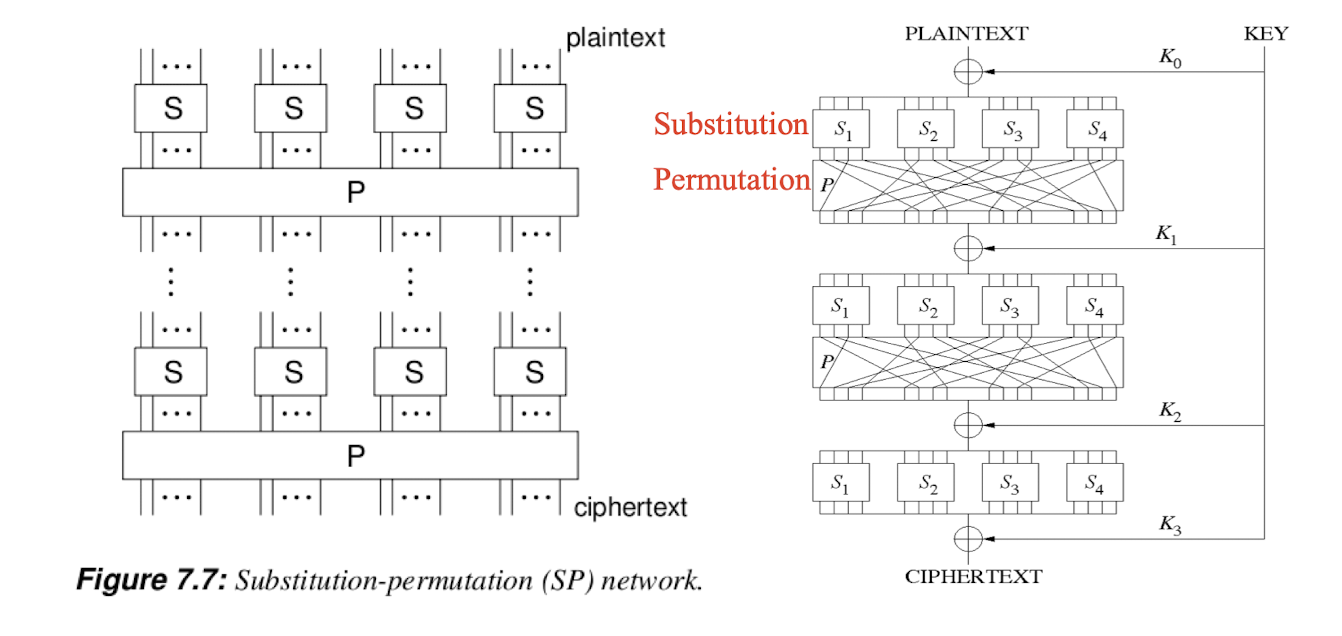

3.17 Substitution-permutation network (SPN)

A permutation is essentially a simple transposition

In multi-processor CPUs: SP is faster than Feistel

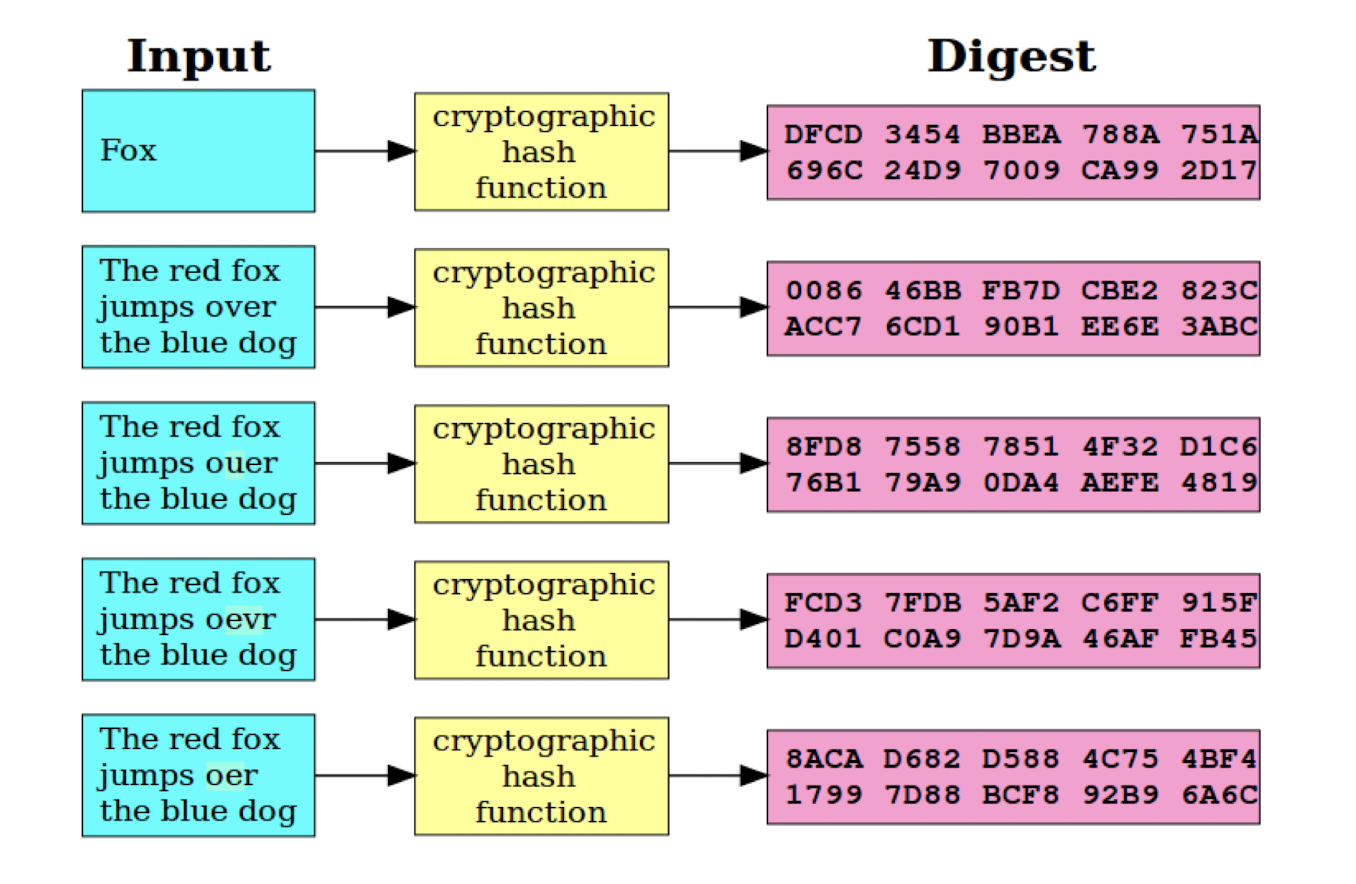

3.18 ==Avalanche effect==

==Slight change in input (e.g., flipping a single bit) significantly changes the output (e.g., half the output bits are flipped)==

Strongly desired property of:

- Block ciphers

- Cryptographic hash functions (next lecture)

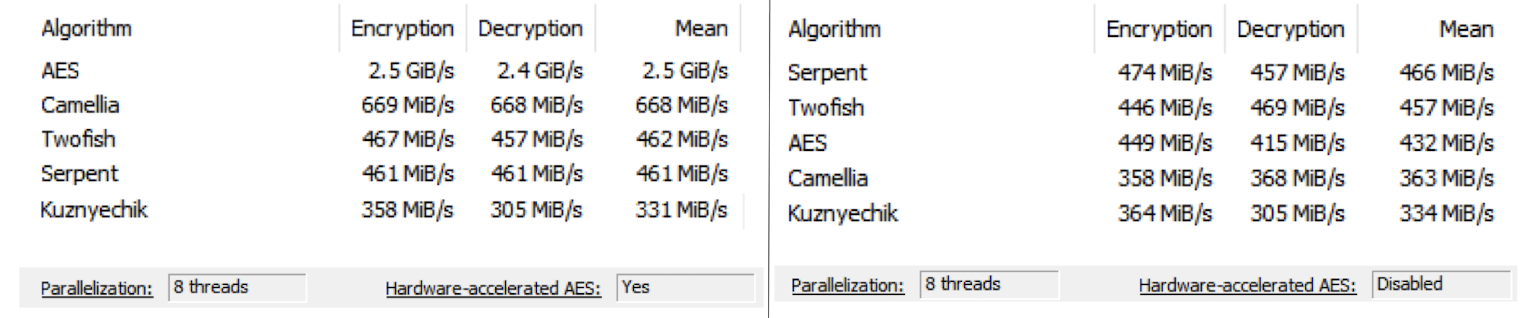

3.19 Performance

- Hardware acceleration for AES in Intel and AMD CPUs

- Advanced Encryption Standard (AES) New Instructions (AES-NI)

In memory tests, Camellia also benefits from AES-NI acceleration, tests conducted with VeraCrypt

3.20 AES in Python (several caveats, do not use)

1 | |

3.21 Arbitrary input

- What happens if you try to encrypt a message of 15 bytes?

Block ciphers operate on full blocks (with exceptions)

- Meaning $|input| = 0 \;mod \; \text{block length}$

What if it is not the case? $\to$ Padding

- See lab 1

3.22 Encryption today

- Symmetric encryption ciphers

- Stream ciphers

- RC4, NO

- ChaCha20, YES

- Block ciphers

- DES, NO

- 3DES, NO

- AES-128, AES-192, AES-256, YES

- Stream ciphers

- Key length

- NIST: To ensure security past 2030: 128 bits

3.23 Modes of operation

3.23.1 Modes of operation

- Block ciphers are rarely used as it is

- Because length of data > block size

- Block ciphers are sequenced according to a mode of operation

- Basic mode: Electronic Codebook (ECB)

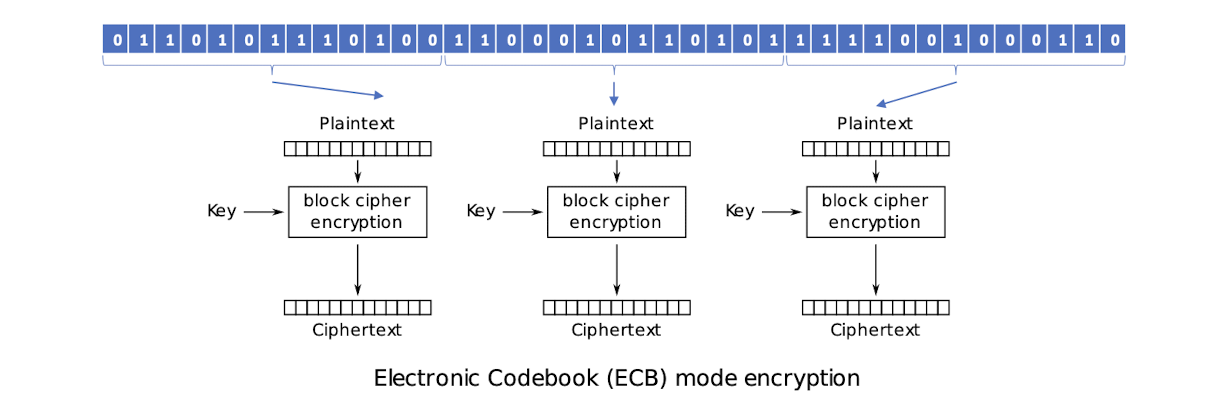

3.23.2 Electronic Code Book (ECB)

- Identical plaintext (under the same key) result in identical ciphertext

- ==Blocks are enciphered independently of other blocks==

- Bit errors in a single ciphertext affect decipherment of that block only

3.23.3 Modes of operation

- How to employ block ciphers for large messages

- Dividing messages

- Padding the last block

- Basic modes: ECB, CBC, OFB, CFB

- Standardized for DES operations (1981)

- More modes defined for AES

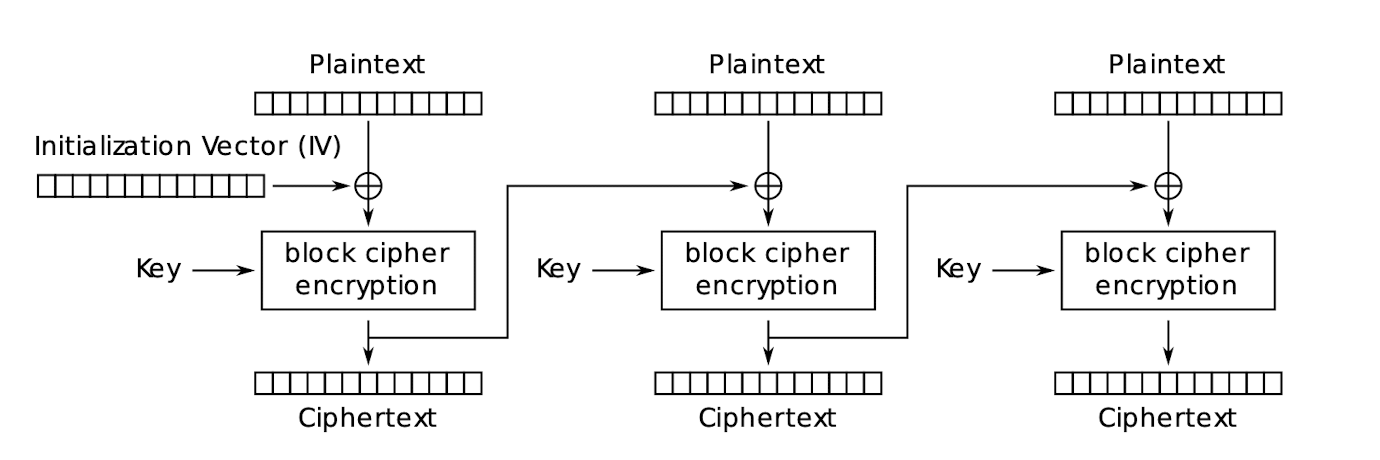

- Initialization Vector (IV):

- A block of data used in addition to the input message

- Randomize the encryption process

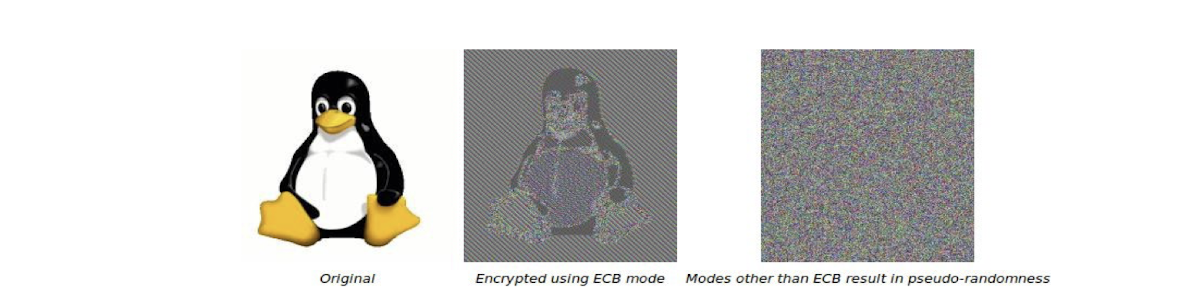

3.23.4 Weaknesses of ECB

- Does not hide ==data patterns==

- Malicious substitution of ciphertext is possible

- When can we use this mode?

- When not to use it?

- Multi-block messages

- Keys are reused for more than a single block

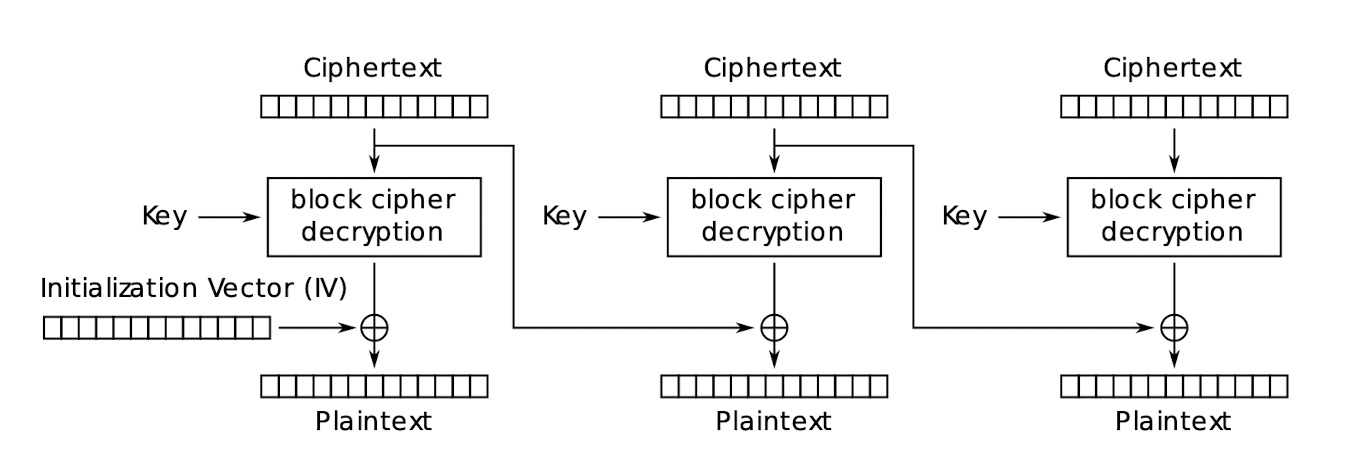

3.23.5 Cipher Block Chaining (CBC)

CBC mode encryption:

CBC mode decryption:

3.23.6 CBC – Properties

- Chaining causes ciphertext $C_i$ to depend on all preceding plaintext

- Only requires ==one step== to ==decrypt== any block, can parallelize

- But requires ==all previous ciphertext blocks (multiple steps)== to ==encrypt== any block, can not parallelize

- Random access to encrypted data is not possible, X

- IV must be integrity-protected

- Otherwise, attackers may make predictable bit changes to the 1st block

- The IV is not the secret, it is public; but the key is the secret.

- The same key, IV, and plaintext results in identical ciphertext

- A single bit error in $Ci$ affects decryption of blocks $C_i$ and $C{i+1}$

- Self‐synchronizing

- error (including loss of blocks) in $Ci$ but not in $C{i+1}, C_{i+2}$

- $C_{i+2}$ is correctly decrypted

- ==Decryption of each block in CBC mode only depends on the current block and the previous ciphertext block. Therefore, the corruption does not propagate beyond $C_{i+1}$==.

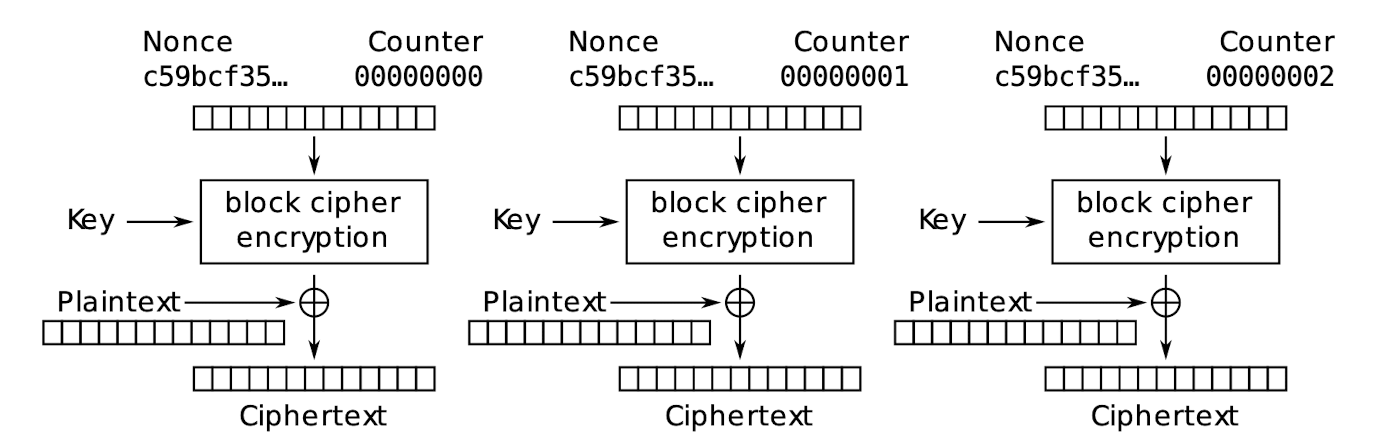

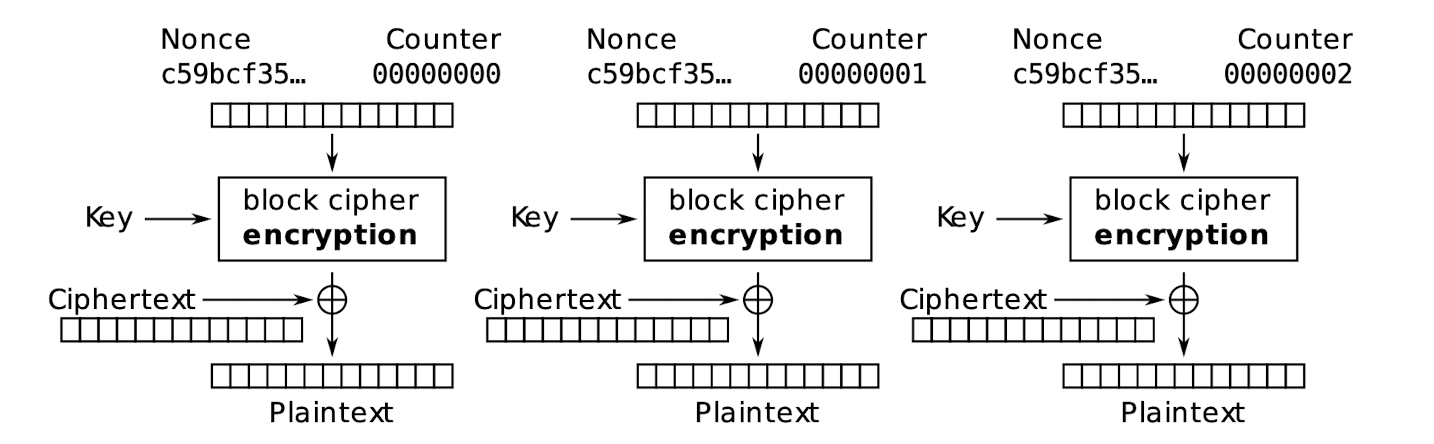

3.23.7 ==Counter (CTR) mode==

Counter (CTR) mode encryption:

Counter (CTR) mode decryption

- When decryption is the same as encryption to get the key steam, ==no decryption function is needed==.

- The Nonce is not the secret, it is public; but the key is the secret.

3.23.8 CTR mode – Properties

- Included with AES in 2001

- Proposed: Diffie & Hellman in 1979

- Counter must be different for each block

- An IV/nonce value is also used with the counter for uniqueness

- ==Concatenated or XORed with the counter==

- Software and hardware efficiency:

- Different blocks can be encrypted in parallel (multi‐processor CPUs)

- Preprocessing: the encryption part (to get the key steam, no need the plain text) can be done offline and when the message is known, just do the XOR

- Random access: decryption of a block can be done in random order, very useful for hard-disk encryption

3.23.9 Other modes

XTS mode: efficient processing of consecutive blocks within a data unit, random access, no IV

- Used for disk encryption

None of the modes presented so far ensure data integrity or authenticity: attacker can try to manipulate cipher-text

3.23.10 Recall modes of operation

ECB

$C_i=E_k(P_i)$

$P_i=D_k(C_i)$

CBC

CTR

$C_i = E_k(Nonce, Counter_i)\oplus P_i$

$P_i = E_k(Nonce, Counter_i)\oplus C_i$

3.23.11 ==Exercise==

A sequence of plaintext blocks $P_1, …, P_8$ is encrypted using AES into a sequence of ciphertext blocks. Where an IV is used, it is numbered $C_0$. A transmission error occurs and one bit in ciphertext block $C_3$ changes its value. As a consequence, the receiver obtains after decryption a corrupted plaintext block sequence $P_1^{\prime}, …, P_8^{\prime}$. For the discussed modes of operation (ECB, CBC, CTR), how many bits do you expect to be wrong in each block $P_i^{\prime}$?

ECB: all bits in $P_3^{\prime}$

==CBC==: all bits in $P_3^{\prime}$ and one bit in $P_4^{\prime}$

$P_3^{\prime} = D_k(C_3^{\prime}) \oplus C_2$

$P_4^{\prime} = D_k(C_4) \oplus C_3^{\prime}$

CTR: one bit in $P_3^{\prime}$

4. Cryptography III

4.1 Hash functions

4.1.1 Hash function: Intuition

Not the hash function used in data structures

- Hash tables, remember?

- $index = hash \; \% \; array\; size$

- Error checking (CRC)

Cryptographic hash function

- More complicated…

- Compression or sponge construction

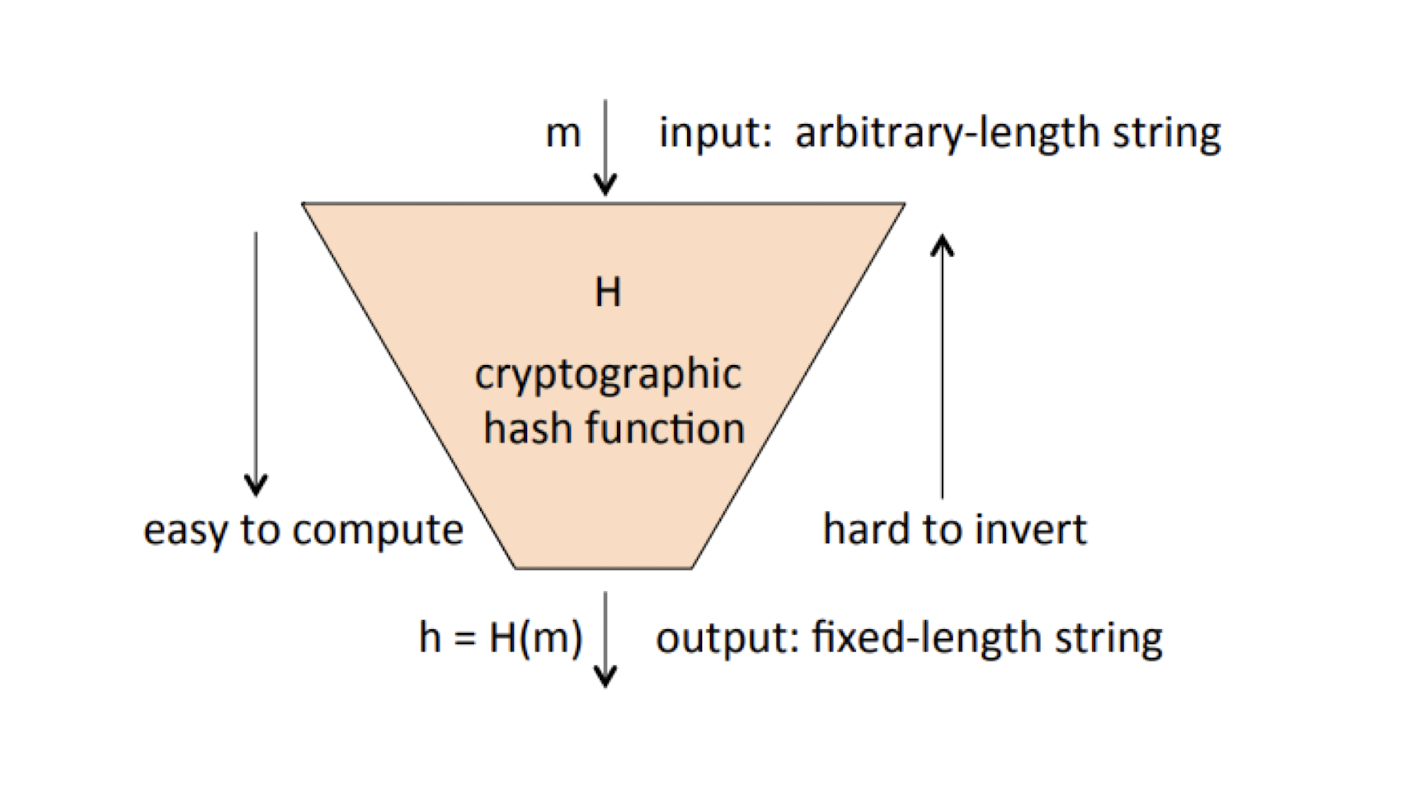

4.1.2 Hash function

$y$: Output, ==image==, “hash”, digest, fingerprint

$H$: Hash function, “hash”

$x$: Input, message, ==pre-image==

$H: {0,1}^* \to {0,1}^n$

- ==Unlimited input size==

- Could be very large, e.g., entire hard drive

- ==Fixed output size==

- $n$: small

4.1.3 Hash function illustrated

4.1.4 Hash functions

Publicly-known algorithm

- Nothing “secret” here

- ==Fully determined==

==Deterministic==

- Same input $\to$ same output

- Several algorithms

- MD4 X

- MD5 X

- SHA1 X

- SHA2

- SHA244, SHA256 (256-bit output (32 bytes)), SHA384, SHA512

- SHA3

- BLAKE/BLAKE2

4.1.5 Examples

1 | |

4.1.6 Use cases

- Fingerprint data

- Hash the same data twice (with the same hash function), get same result, compare

- Compare files without sending them

4.1.7 Desired properties

Security properties of cryptographic hash functions

- 3 main properties

- ==Pre-image resistance==

- ==Collision resistance (2 types)==

Other desired properties or consequences

- Output looks “random”

- Flipping one bit in the input changes half of the output

- ==Avalanche effect, similar to block ciphers==

- We want the hash function to be fast to compute

- Not for all applications! See next lecture

4.1.8 Fun fact: Bitcoin network computing power

The Bitcoin network is composed of 15-20k nodes

Each node has either lots of GPUS or hardware specialized in computing hash functions (SHA256)

Total computing power of the Bitcoin network as of Feb 2024:

$2^{78.2}$hashes per 10 minutes

~$2^{69}$hashes per seconds

4.1.9 ==Pre-image resistance==

==For a given hash $y$, it is computationally infeasible to find any value $x$ s.t. $h(x)=y$==

==Output is given==

- Computationally infeasible

- Input space is too big to try all possible inputs

- Note: If input space is small, property doesn’t hold!

- Example: h(“Yes”), h(“No”)

- Called one-way property: cannot “reverse” hash

- Practical world: you cannot create a new document from scratch and have its hash match whatever you like

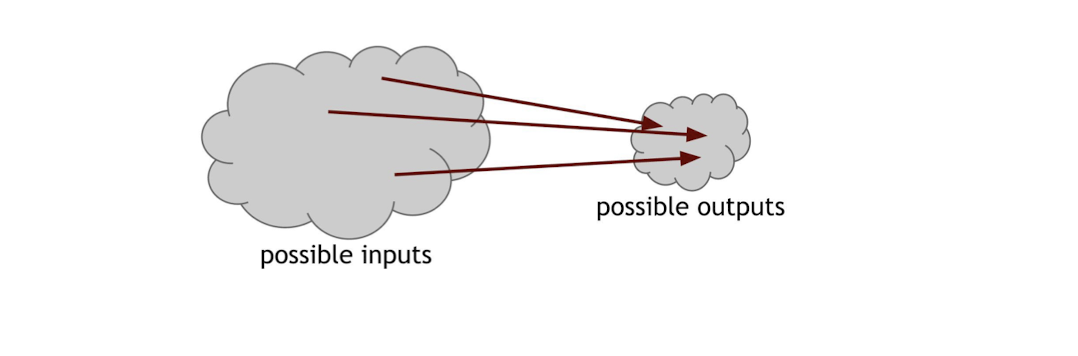

4.1.10 Collision resistance: Intuition

- Input space is much bigger than output set

- Some input values will be “mapped” to the same output value, e.g., $x\neq x^{\prime}$

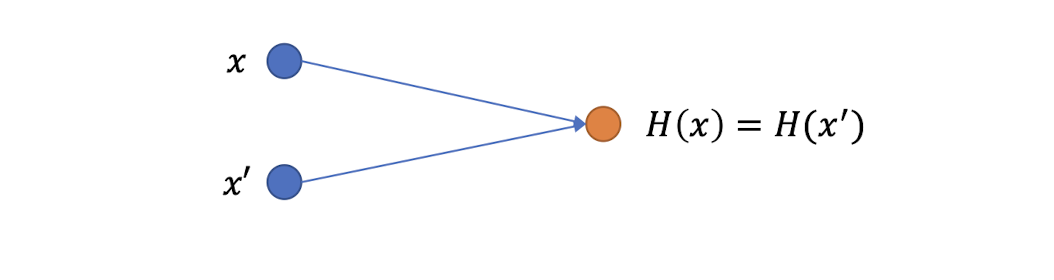

4.1.11 Collision resistance: types

- Collisions are exploited when attacking the hash function

- Two types of collision resistance:

- Either $x$ (and therefore $y$) is already chosen, find $x^{\prime}$

- HARD to do!

- Or both $x$ and $x^{\prime}$ (and $y$) can be chosen

- Simpler to break, but still difficult

4.1.12 Collision resistance: Weak

Case 1: $x$ (therefore $y$) is already chosen, find $x^{\prime}$

- HARD!

- Called “weak collision resistance“:

- weak because easy property in the design, but stronger to break

- Also called “second pre-image resistance”

==For a given input $x$ (and output $y$), it is computationally infeasible to find any value $x^{\prime} \neq x$ s.t. $h(x)=h(x^{\prime})$==

==One input is given, to find another one==

Practical world: you ==cannot alter== a document and have its ==hash stay the same==

4.1.13 Collision resistance: Strong

Case 2: Both $x$ and $x^{\prime}$ can be chosen

- Simpler to break, but still difficult

- Called “(strong) collision resistance”: Strong b/c difficult to achieve, therefore easier to break

==It is computationally infeasible to find any two distinct values $x$ and $x^{\prime}$ s.t. $h(x)=h(x^{\prime})$==

==Two are given==

- Practical world: you ==cannot create two== documents which have the ==same hash==

4.1.14 Collision resistance: “Strong”

- The strong collision resistance of MD5 has been broken in 2007, practically in 2009

- MD5 X

- Collision on SHA1 was estimated to “cost [in 2015] between 75K$ and 120K$USD”

- Achieved in 2017 by Google (see https://shattered.it/)

- SHA1 X

4.1.15 General-purpose cryptographic hash functions

You can use those hash functions for hashing content with guarantees of collision resistance and pre-image resistance:

- SHA-2 family

- SHA-3 family (Keccak, standardized in 2015), preferred

- BLAKE2 family

SHA-2 comes in several versions:

- SHA-224, 224-bit output

- SHA-256, 256-bit output

- SHA-384, 384-bit output (slightly preferred among SHA2)

- SHA-512, 512-bit output

SHA-3 also comes in different versions: SHA3-224, SHA3-256, SHA3-384, and SHA3-512

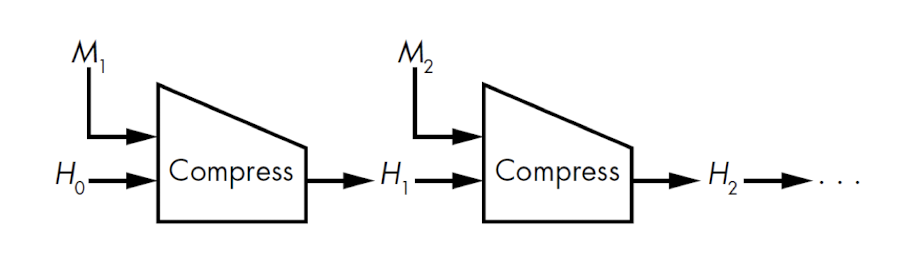

4.1.16 Internal designs

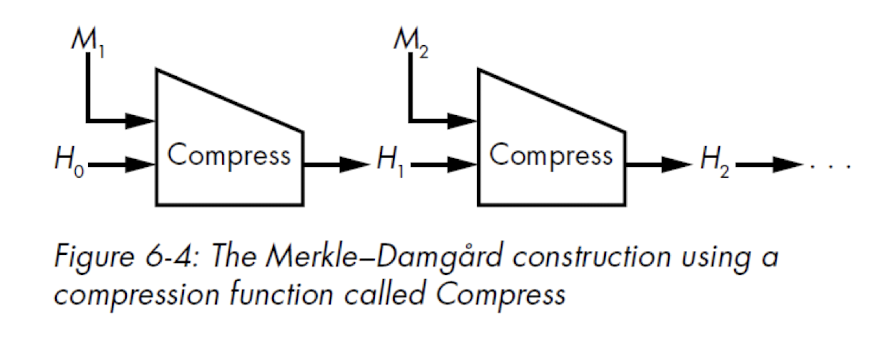

- MD5, SHA-1 and SHA-2 are based on a Merkle–Damgård construction

- Based on a collision-resistant one-way compression function, chain it up to hash the entire input

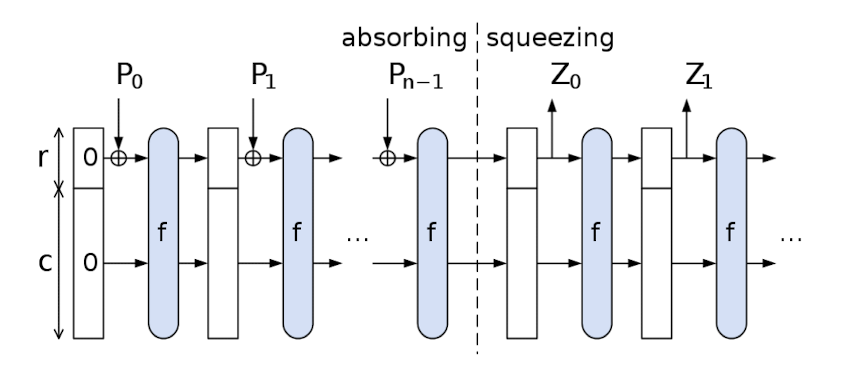

- SHA-3 uses a sponge construction

- data is “absorbed” into the sponge, result is “squeezed” out

4.1.17 Attacking collision resistance

- Weak collision resistance

- Remember: $x$ is fixed, find $x^{\prime}$

- Best attack if hash function is secure, is exhaustive search

- If input space is bigger than output space, exhaustive search of output space is the best way

- For SHA256, output space size is $2^{256}$

- After computing $2^{256}$+1 hashes, we have 100% chance to have a collision

- Unfeasible!

- After computing $2^{256}$+1 hashes, we have 100% chance to have a collision

- Collision resistance

- Remember: choose $i$ and $i_x$

- Much easier due to the birthday paradox

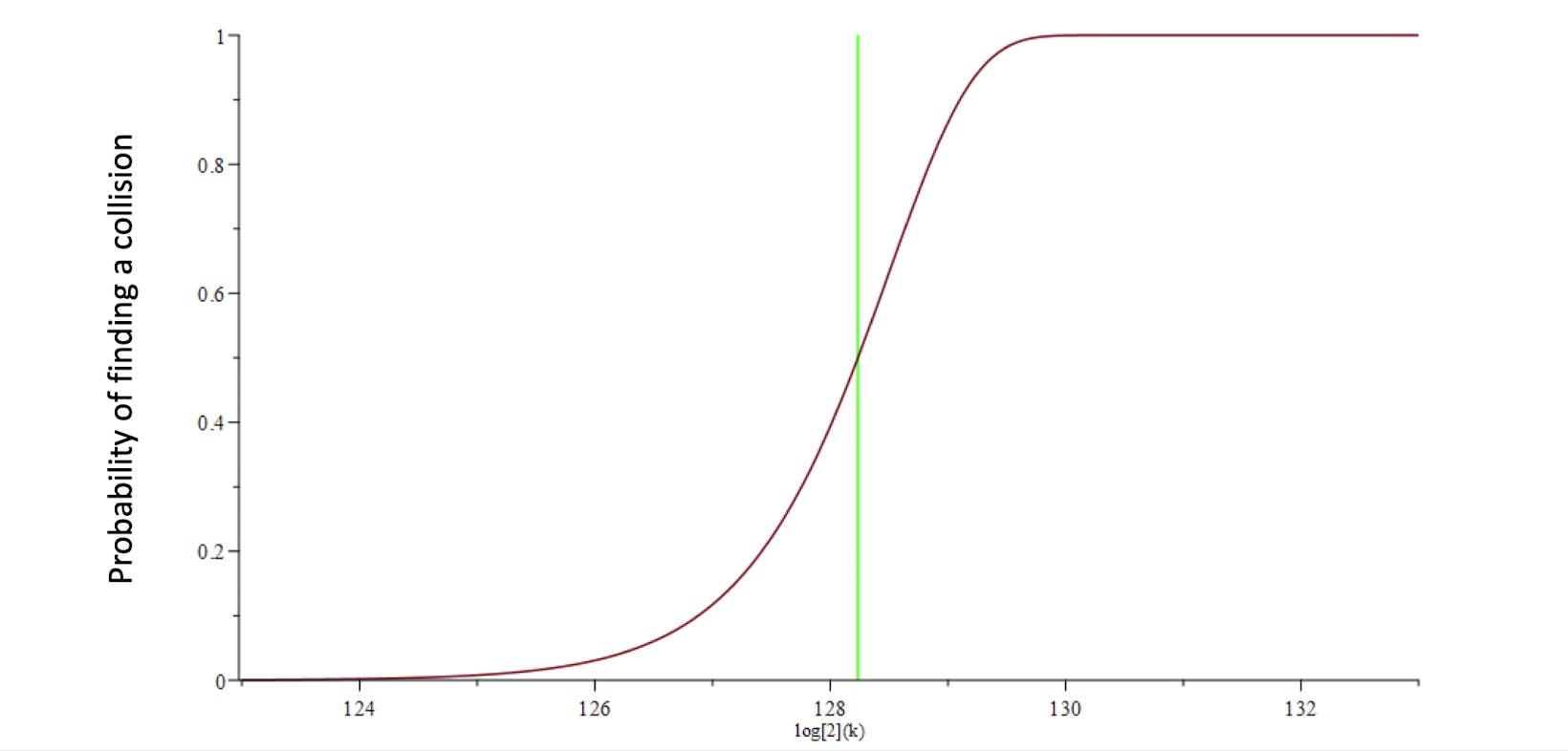

- ==For SHA256, complexity is “only” $\sqrt{2^{256}} = 2^{128}$==

- Still OK because $2^{128} \gt 2^{112}$

4.1.18 Probability of collision

- For 256-bit output (assumed equi-probable), the probability distribution of finding a collision after $k$ hashes is as follows:

4.1.19 Exercise at home

Find a “near collision” on SHA-256, with only the first $n$ bits of the output that are the same (instead of the full 256 bits)

- Hint: Store prefixes into buckets

- Try $n$ = 34

4.2 Message Authentication Code (MAC)

4.2.1 Encryption doesn’t give integrity guarantees

Common misconception:

- If Bob decrypts a meaningful message with shared key $k$, it must have originated from Alice

Consider:

- Blocks or packets of message can be re-ordered

- In some cases, plaintext does not have “meaning” or redundancy (think of a key)

4.2.2 Message authentication

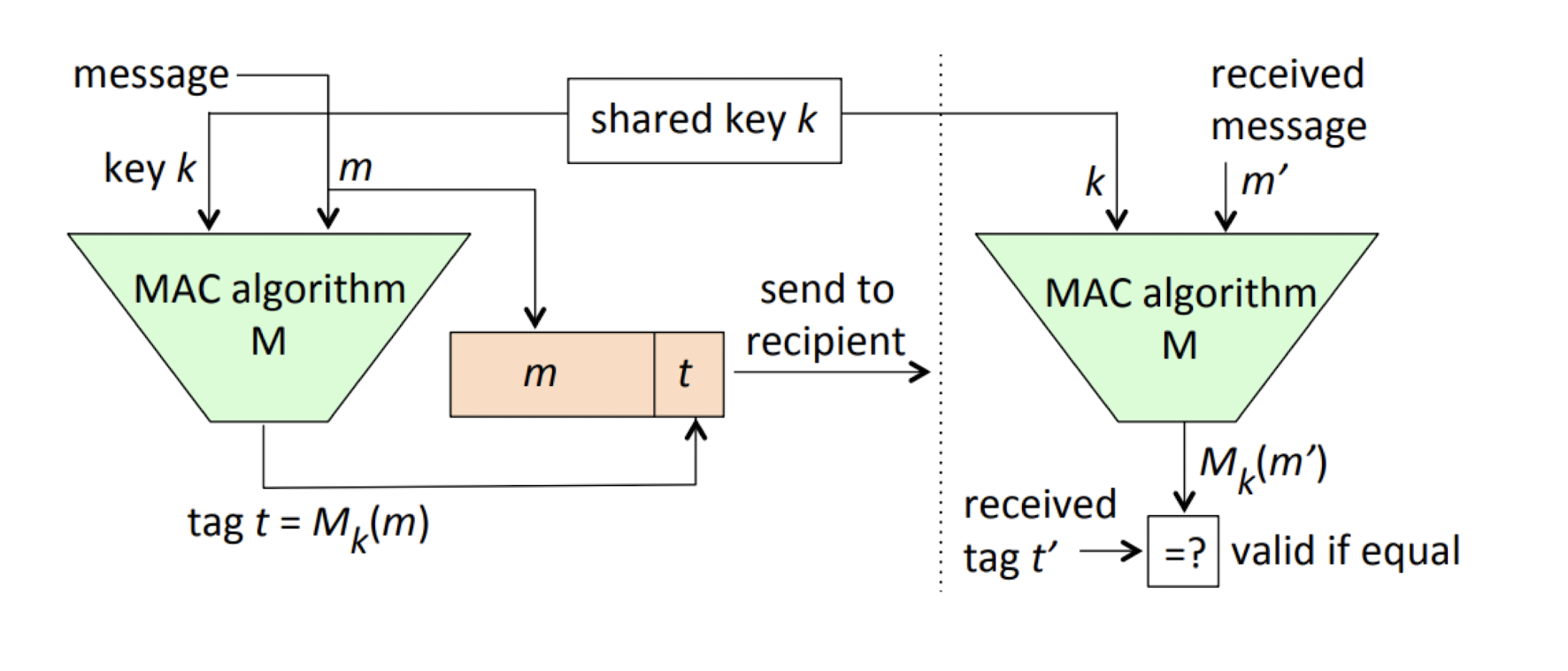

- Message authentication is the service of assuring the ==integrity of data== (i.e., that it has not been altered) and the identity of the party that ==originated the data==, i.e., data origin authentication

- This is done by sending a special data value, or tag, called a message authentication code (MAC), along with a message

- The algorithm computing the tag, i.e., the MAC function, is a special type of hash function whose output depends not only on the input message but also on a secret number (secret key)

4.2.3 MAC

- Who knows the key and the mesage is not being altered

4.2.4 Use case

- Alice sends a message to Bob, Bob wants to make sure the message has not been modified by an adversary, and truly comes from Alice

- Alice and Bob pre-share a secret $k$

4.2.5 Security properties

- An adversary who ==does not know $k$== will be:

- unable to forge a ==correct tag $t$== for a given message $m$

- unable to generate ==any new pair $(m, t)$== of a matching message and tag (for an unknown $k$ in use)

- ==MACs do not provide non-repudiation==

- non-repudiation: I can not deny that I sent the message

- Do not know who exactly sent the message

4.2.6 MAC

A MAC is often a keyed hash function

- Can be based on a block cipher too

Different possible constructions:

- MAC = $h(k||m)$, $||$: concatenation

- but hash extension attacks on most hash functions

- Given a hash, we could compute the hash of a longer message

- e.g. Given $h(k||m)$, we can calulate $h(k||m||m_2)$

- but hash extension attacks on most hash functions

- MAC = $h(m||k)$

- but has other security issues

- In practice: ==Hash based MAC==:

- HMAC = $h((k^{\prime} \oplus \text{opad})|| h((k^{\prime} \oplus \text{ipad})||m))$

- ipad: inner padding, opad: outer padding

- SHA1, SHA2

- $K^{\prime}=h(k)$ if $k$ is larger than block size, $k$ otherwise

- Prevents hash extension attacks: no way to extend the outer part

- Two calls to h: inefficient?

- HMAC = $h((k^{\prime} \oplus \text{opad})|| h((k^{\prime} \oplus \text{ipad})||m))$

- MAC = $h(k||m)$, $||$: concatenation

4.2.7 HMAC in practice

- Used for ==authenticated encryption==

- Encryption alone does not provide authentication

Used for ==key derivation== (from password)

- PBKDF2 (WiFi, WPA/WPA2)

No need to use the HMAC construction with SHA3 (not vulnerable to hash extension)

- MAC = $SHA3(k||m)$

- Could but not necessary

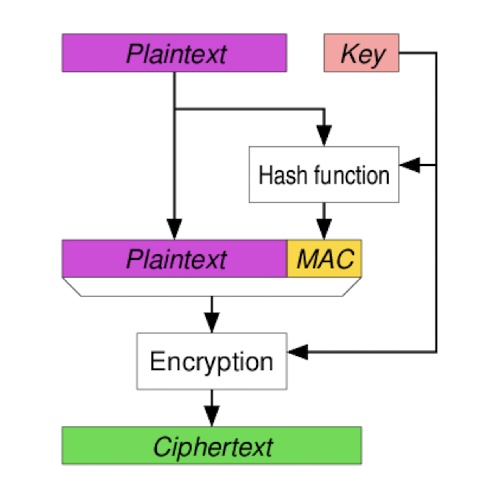

4.2.8 Authenticated encryption constructions

How to use MACs along with encryption?

MAC-then-Encrypt (MtE)

- HMAC the plaintext

- Encrypt the plaintext with the HMAC E

Send the result

used in SSL/TLS

- $h_{k1}(m)$

- $E{k2}(m||h{k1(m)})$

- Two different keys for encryption and MAC

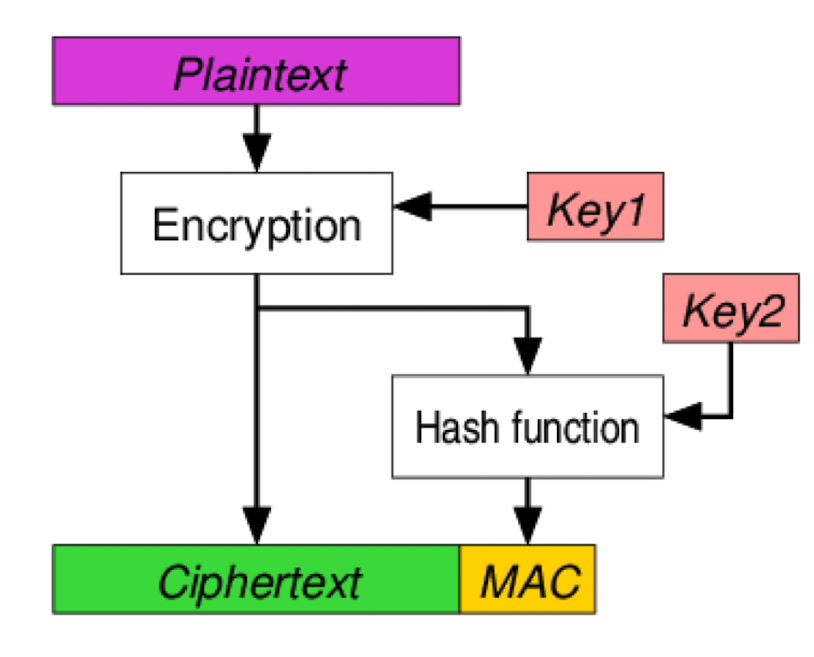

- Encrypt-then-MAC (EtM)

- Encrypt the plaintext E

- HMAC the ciphertext ℎ

- Send both E

- used in IPsec, TLS 1.2+

- $E_{k2}(m)$

- $h{k1}(E{k2}(m))$

- $E{k2}(m)||h{k1}(E_{k2}(m))$

- Usually preferred

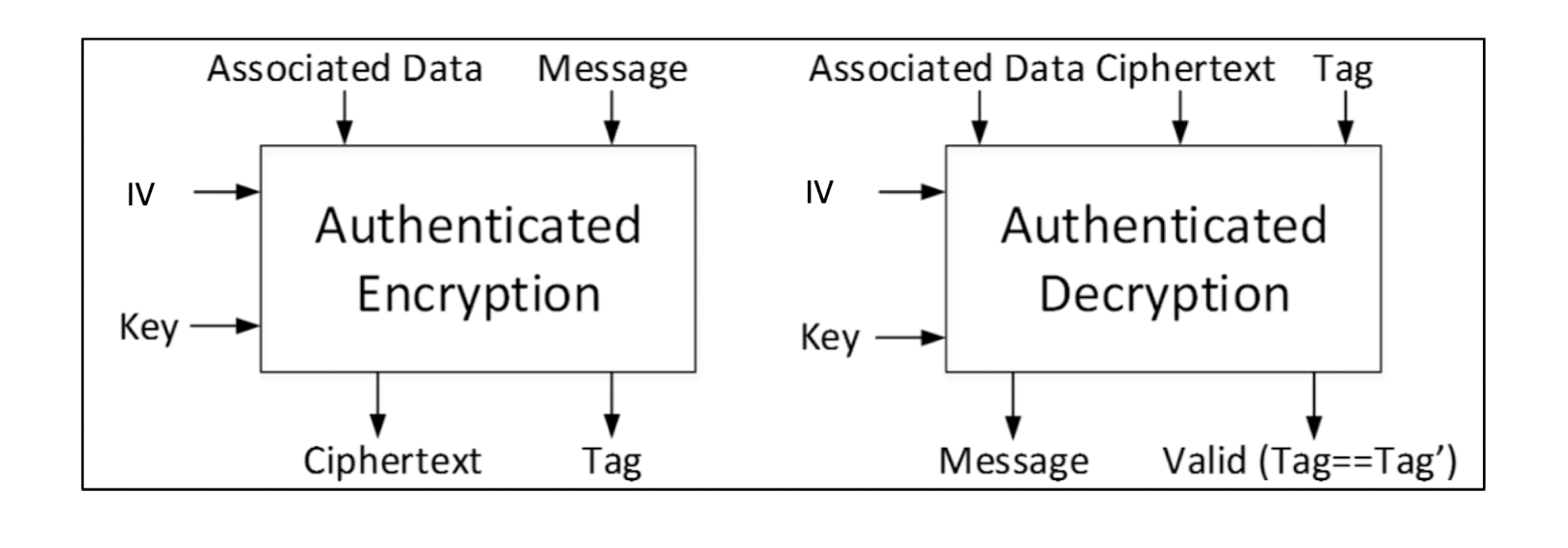

4.2.9 Authenticated encryption modes

- Encryption alone ensures confidentiality of a message, but who sent it? Was it modified?

- You saw what can happen in CBC: how to prevent it?

- AE produces a ciphertext + authentication tag = encryption + MAC, all-in-one

4.2.10 AEAD

- To authenticate data along with the authenticated encryption, some ciphers support associated data

- Authenticated Encryption with Associated Data

- Associated Data

- Not for encryption, but for authentication, like a public data

- Like a context

- Examples

- GCM mode of operation

- Sensitive to IV reuse

- CCM mode of operation

- GCM mode of operation

5. Password Security

5.1 Passwords

Users cannot remember very long secrets such as keys used in the protocols seen previously

- Passwords are often used for user authentication (e.g., ilovesuperbowl, Lmb7f5*0aDt/|V@)

Lot of research to replace passwords but no successful alternative yet

- Other factors (biometrics, hardware tokens) have failed to replace passwords completely

- They are mostly used as second factors

5.2 Advantages of passwords

- Familiar to people

- You can have many different ones

- Difficult to coerce

- In court: please reveal your password to your encrypted disk?

- Nothing to carry

- Easy to revoke / replace

- Accessibility

- Easy to deploy

- Low cost

- No proprietary aspects / patents

- Doesn’t require a trusted third party

- Not linked to an individual

5.3 Authentication

- Something you know

- Password or PIN

- Something you have

- Smart card

- Private key (of a public-private key pair)

- Phone (running particular software)

- Something you are

- Biometrics (e.g., iris or fingerprint)

- Somewhere you are

- Location-limited channels

- Someone you know (social authentication)

- Someone vouches for you

- You can identify people you should know

- Some system vouches for you

- Single sign-on

- PKI Certificate Authorities

5.4 Disadvantages of passwords

- Predictability

- 123456

- iloveyou

- 5201314

- Interference between multiple passwords

- Limits of human memory

- Requiring a large portfolio of passwords

- Easy to deploy incorrectly / naively

- System administrators

- Users

5.5 Deploying passwords

Logging into an online or local account

/etc/shadowon Linux%windir%\system32\config\{SAM,SYSTEM}on Windows- Hashed passwords in database

Encrypting a local file using a password

- Password-Based Key Derivation Functions (PBKDF)

- Key used to encrypt data often stored in file

5.6 How fast is SHA-256?

Inefficient single-threaded SHA-256 computations

- 368,824 H/sec

1x NVIDIA Geforce RTX 3090

- 9,502,700,000 H/sec

210x Bitcoin Miner S19 XP Hyd. (255 TH/s)

- 53,550,000,000,000,000 H/sec

5.7 Dictionary attack

Can we find $pass^\prime$ s.t. $h(pass^\prime)=h(pass)$?

- Cannot “invert” the hash function

Can only “brute-force”

8 lowercase characters => $26^8$ ≈ 208 billion hashes to compute or store (super large!)

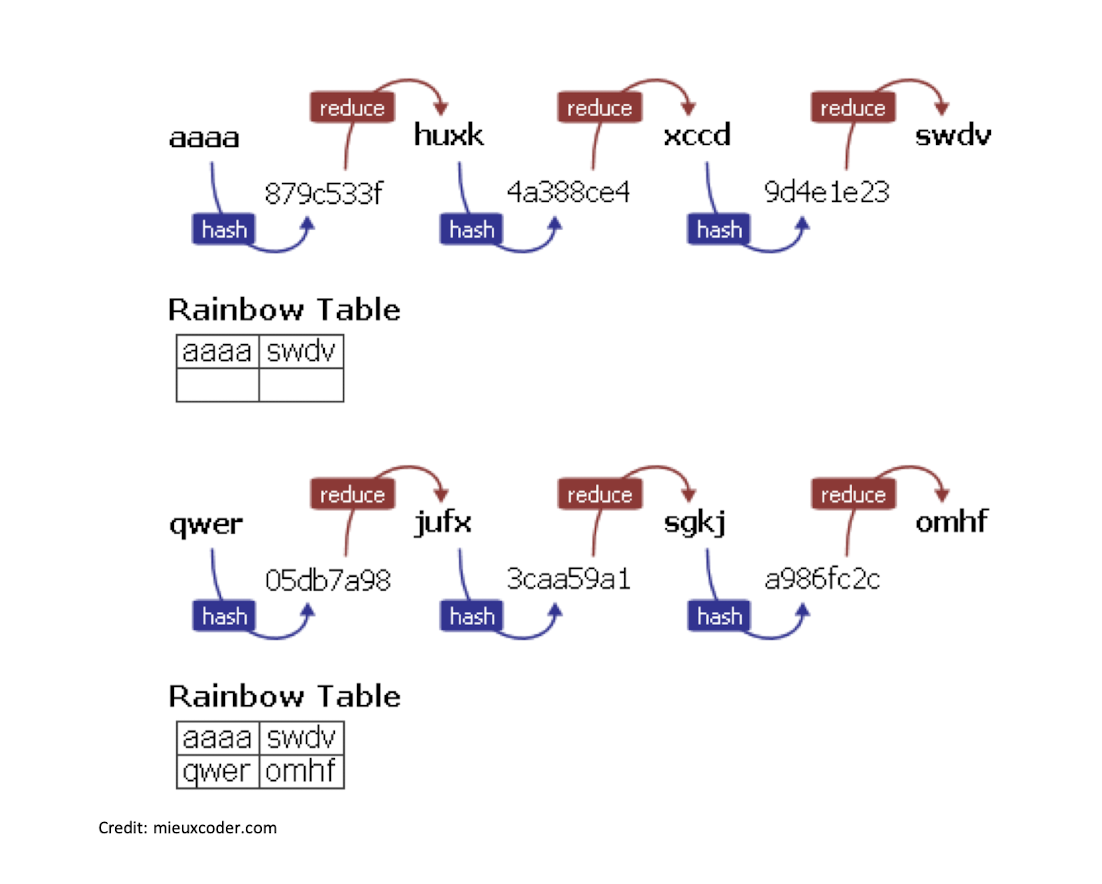

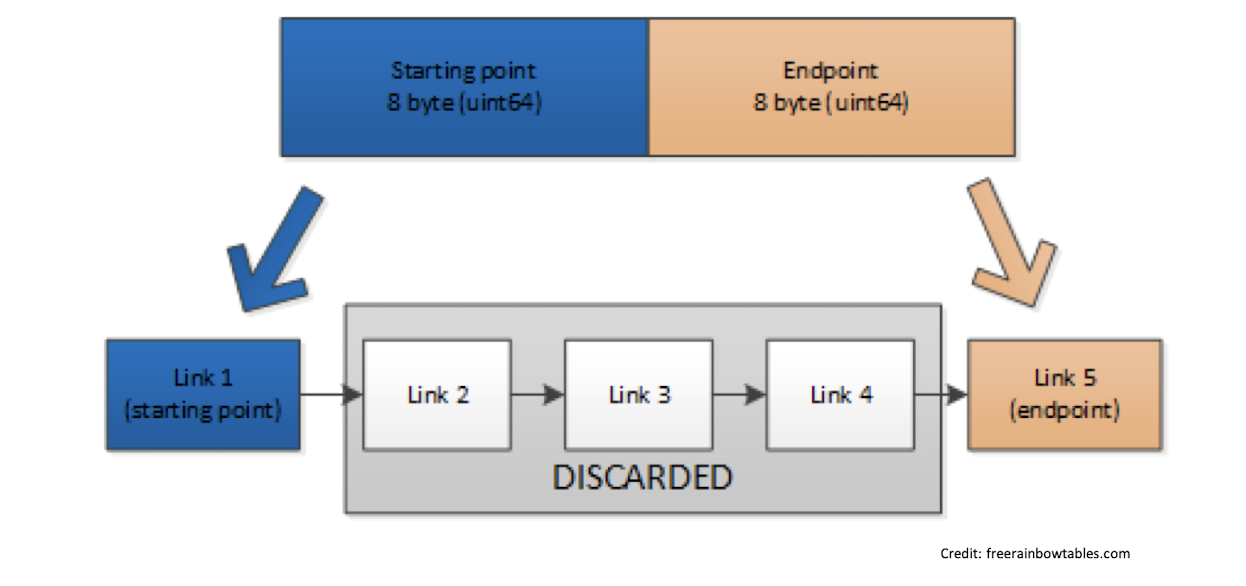

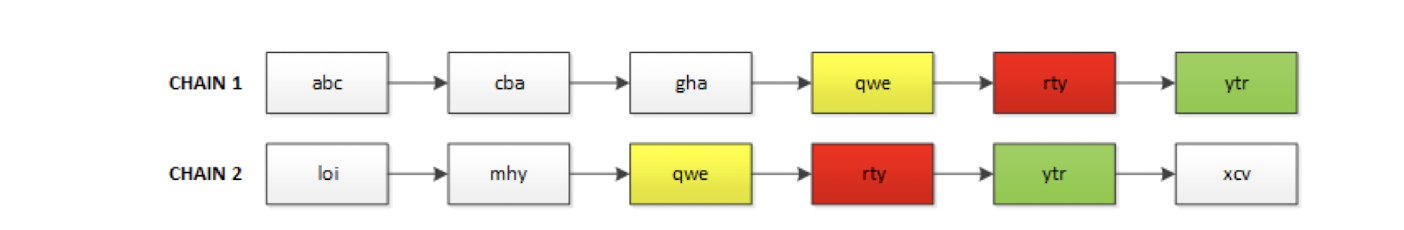

5.8 Rainbow tables (1/4)

- ==Tradeoff between space and time==

- Storing the daata + do some of the computing.

.rt (RainbowCrack) format uses 8 bytes

Merging (=> ==index-based== reduction)

- Due to the ==Collisions== when reducing the hash,

- reduce function is not collision-resistant

- To avoid collisions, we may use the different reduction functions base on the different index in the chain.

False alarms (inevitable)

- abcdef $\to$ 20DEE8 $\to$ baheyt $\to$ C076CB $\to$ pamvnc $\to$ ACCD5E $\to$ hellow

- abcdeg $\to$ B1DC42 $\to$ pamvnc $\to$ ACCD5E $\to$ pocade $\to$ 0AA22C $\to$ oiebsf

- Due to different hash reduce to the same value. lead to the wrong chain.

- I thought the image is found, but it is not.

Credit: freerainbowtables.com

5.9 Counter brute-force and rainbow tables

- Use salt

- $\text{hash} = h(\text{salt}||\text{password})$

- $\text{salt}$ is ==random and not a secret==

- Can be database-specific or user-specific

- Difference?

5.10 Best practices for storing passwords

- Passwords should never be stored in plaintext!

- Passwords should be hashed

- How? Which hash function? MD5?

- Hash functions designed for efficiency (e.g., MD5, SHA256)

- Not good

- The computationis ==too fast==, the attacker can compute the hash very fast

- Password-specific hash functions (e.g., ==bcrypt==, scrypt, PBKDF2, ==Argon2==)

- ==Slow, hard to compute==

5.11 Bcrypt

- BCrypt hashed passwords and secrets have a ==72 character limit==!

- If data is longer, it will be ==truncated==

- Better ==hash with, e.g., SHA-256 or SHA3-256, before bcrypt==

- PHP

1 | |

- Python:

1 | |

1 | |

5.12 Entropy (random passwords)

- For random passwords, information entropy is defined as:

Consider alpha-numerical ==random== passwords (lowercase/uppercase letters and digits, character set size of $N = 26 + 26 + 10 = 62$) of length $L = 8$.

There are $N^L = 62^8 = 2.2 \times 10^{15}$ possible passwords of this type.

- The information entropy of such a password is $H = \log_2(N^L) = 47.6$ bits.

e.g.

111111: low information, can be compresseda1b2c3d4: high information, can not be compressed easily

==But this is only suitable for the random passowrd, the the human is not good at generating the random password, but more meaningful.==

- we may use the ==guessability==.

5.13 More issues

In most cases, password protection is as strong as the password itself.

What can make a password weak?

- Users ignore the real risks, choose simple ones

- Users think “nobody can find this password”

- Too many passwords to memorize $\to$ password reuse

- Big companies do not always store passwords securely, and

- Databases leak: not using the bcrypt

- Cracking speed increases with time in offline attacks: just using the hash, not the online website

- Cracking algorithms are getting more efficient

- Botnets can be used for online attacks

- …

5.14 How to improve the strength of user-chosen passwords?

Password policies

- Force users to comply with stringent requirements that are supposed to prevent weak passwords, but annoy users.

Password-strength meters

- Show a meter to users that evaluates the strength of their password and encourages better score.

5.15 Are password meters effective?

- Consequence on passwords:

- Practical implementations:

- Very naïve algorithms

- Inconsistent among websites

- Often tell a password is strong when it is not

Good password meters: ZXCVBN, by Dropbox

5.16 Threats to password security

- Phishing attacks

- Shoulder surfing (observation)

- Poor implementation / deployment

- Online attack against live system

- Rate-limiting, e.g. CAPTCHA

- Attack against password-protected file

- Offline attack against stolen database

5.17 Password Authentication

Typical issues that need to be addressed:

- how to get the password to the user,

- Forgotten or reset passwords,

- password guessing,

- protection of the “password knowledge” on the system side

- Dangers

- User accounts without passwords.

- Unchanged default passwords.

- Badly chosen passwords – dictionary/brute force attacks.

- Passwords stored or transmitted in the clear.

- System used as “oracle” to provide correct password

- Users forget must reset passwords

- infrastructure for re-issuing passwords can be quite expensive if it has to be truly secure

- If many users must reset at once can be major failure

5.18 Password File Access Control

- can block offline guessing attacks by denying access to encrypted passwords

- make available only to privileged users

- often using a separate shadow password file

- still have vulnerabilities

- exploit O/S bug

- accident with permissions making it readable

- users with same password on other systems

- access from unprotected backup media

- sniff passwords in unprotected network traffic

5.19 Limit validity of password

- Limit usage of easy passwords

- ==Set default password==

- ==Change default password to unique, unguessable value==

- Limit password validity

- Expiry dates for passwords forces users to change passwords regularly

- Practice now deprecated!

- Limit attempts of testing password validity:

- Monitor unsuccessful login attempts and react by locking user account (completely or for a given time interval) to prevent or discourage further attempt

- Inform users

- display time of last login and number of failed login attempts since

5.20 Limitations impacts Usability

- Default passwords are “printed” in system manual

- Hard to make them different for every system!

- Users are best at memorizing passwords they use regularly but not when used only occasionally

- Do not change passwords before weekends or holidays

- Limits apply to all users simultaneously

- individual failures become massive failures

- Do not change all users passwords on the same day

5.21 Bootstrapping authentication

- Passwords are secrets shared between user and system

- “The” user is whoever knows the secret

- How do you bootstrap a system so that the password ends up in the right places, but nowhere else?

- In an enterprise, users can collect their password personally.

- In Web applications you want to deal with remote users.

5.22 Resetting Passwords

- When setting up a new user account some delay in getting the password may be tolerated.

- If you have forgotten your password but are in the middle of an important task you need instant help.

- The procedures for resetting a password are the same as mentioned previously, but now instant reaction is desirable.

- In global organisations a hot desk has to be available round the clock.

- Proper security training has to be given to personnel at the hot desk

- e.g. call back

5.23 Spoofing Attacks

- When the user cannot check who will receive the password, spoofing attacks are possible:

- Attacker starts a program that presents a fake login screen and leaves the computer.

- Next user coming to this machine enters username and password; these are stored by the attacker.

- Login is aborted with a (fake) error message and the spoofing program terminates.

- Control is returned to the operating system which now prompts the user with a genuine login request.

5.24 Countermeasures

- Mutual authentication

- The system has to authenticate itself to the user.

- Easier to do if the “user” is not a human but a program working on behalf of the user

- Trusted path

- guarantees that user communicates with system (e.g. the operating system and not with a spoofing program)

- E.g. secure attention key CTRL+ALT+DEL in Windows invokes the operating system logon screen.

- guarantees that user communicates with system (e.g. the operating system and not with a spoofing program)

- Log monitoring

- Displaying number of failed (or successful) logins may tell the user that something he didn’t intended has happened.

5.25 Key Observations

- A password does ==not authenticate a person==.

- Successful authentication only implies that the user knew a particular secret.

- There is no way of telling the difference between the legitimate user and an intruder who has obtained that user’s password.

- There is a case of computer misuse where somebody has logged in using your username and password.

- Can you prove your innocence?

- Can you prove that you have not divulged your password?

- You cannot log in for some reason but there is an important task to do that requires authentication

- Can your secretary can log in for you and do all boring tasks as if he was you?

- If you are wounded in combat can you pass the password to the second in command so he can take your place?

5.26 TPM

TPM: Trusted Platform Module

A hardware-based standardized security solution

Identification of devices: Prior to the release of the TPM specification, devices were mostly identified by MAC addresses or IP addresses—not security identifiers.

Secure generation of keys: Having a hardware random-number generator is a big advantage when creating keys. A number of security solutions have been broken due to poor key generation.

- True random number generator

Secure storage of keys: Keeping good keys secure, particularly from software attacks, is a big advantage that the TPM design brings to a device.

NVRAM storage: When an IT organization acquires a new device, it often wipes the hard disk and rewrites the disk with the organization’s standard load. Having NVRAM allows a TPM to maintain a certificate store.

Device health attestation: Prior to systems having TPMs, IT organizations used software to attest to system health. But if a system was compromised, it might report it was healthy, even when it wasn’t.

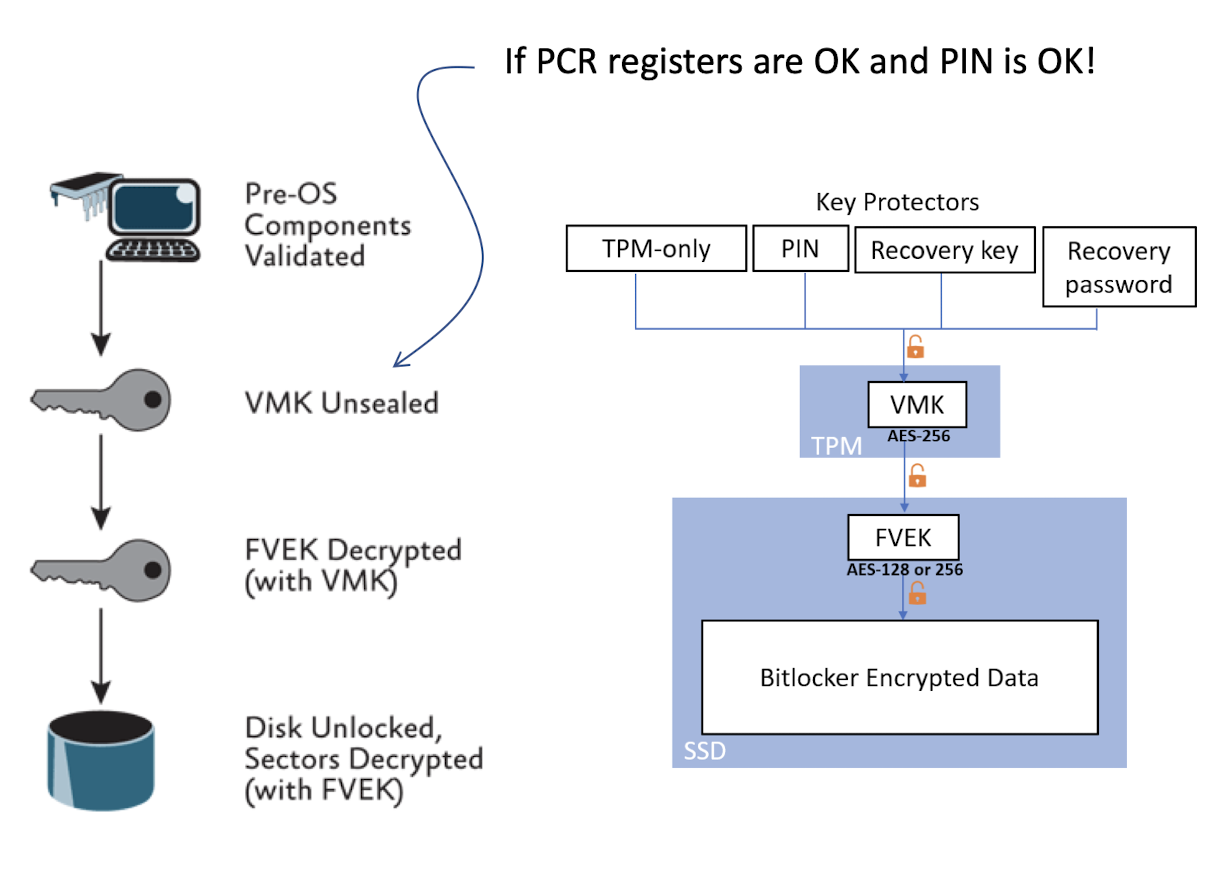

seal/unseal operations (based on the computer status + PIN) to encrypt/decrypt the data

5.27 BitLocker

BitLocker hashes the user-specified personal identification number (PIN) by using SHA-256, and the first 160 bits of the hash are used as authorization data sent to the TPM to seal the volume master key. The volume master key is now protected by both the TPM and the PIN. To unseal the volume master key, you are required to enter the PIN each time the computer restarts or resumes from hibernation.

If ==PCR registers== are OK and ==PIN== is OK!

- The PIN may be short, the TPM will lock the device after a few wrong attempts, so the PIN is still secure.

5.28 More about passwords

- Multi-factor authentication

- Biometrics

- Single sign on

- FIDO

- Next slide deck

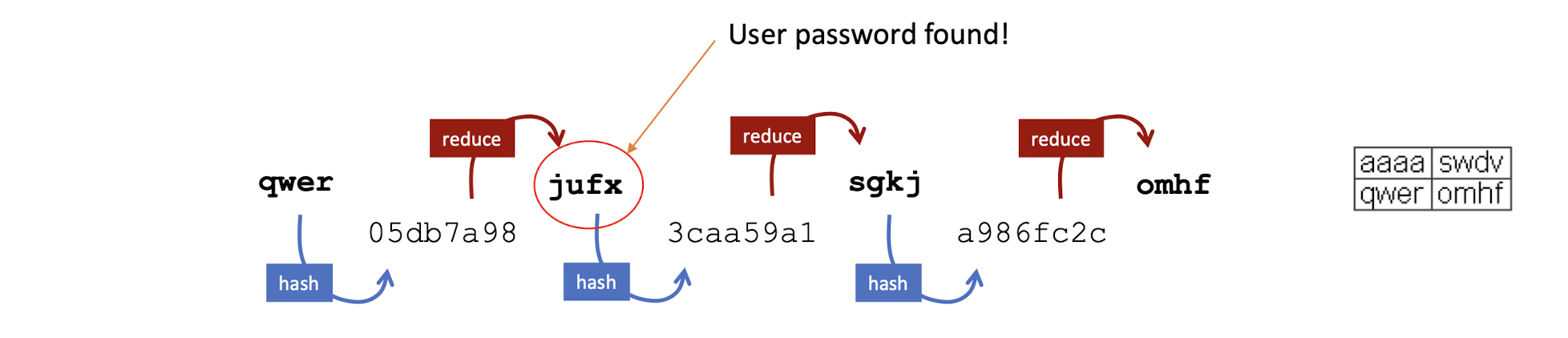

5.28 Find password in a rainbow table

Reduce function:

- Based on the input space $N$, like aaaa to zzzz

- $R(h) = Input \; List[h \; \text{mod} \; N]$

Given user password hash “3caa59a1”, reduce it

Is the reduced hash an endpoint in the table?

No, hash & reduce

Is the reduced hash an endpoint in the table?

Yes, ==recompute the whole chain== from the start point e.g. $qwer$

10k to 100k length of the chain.

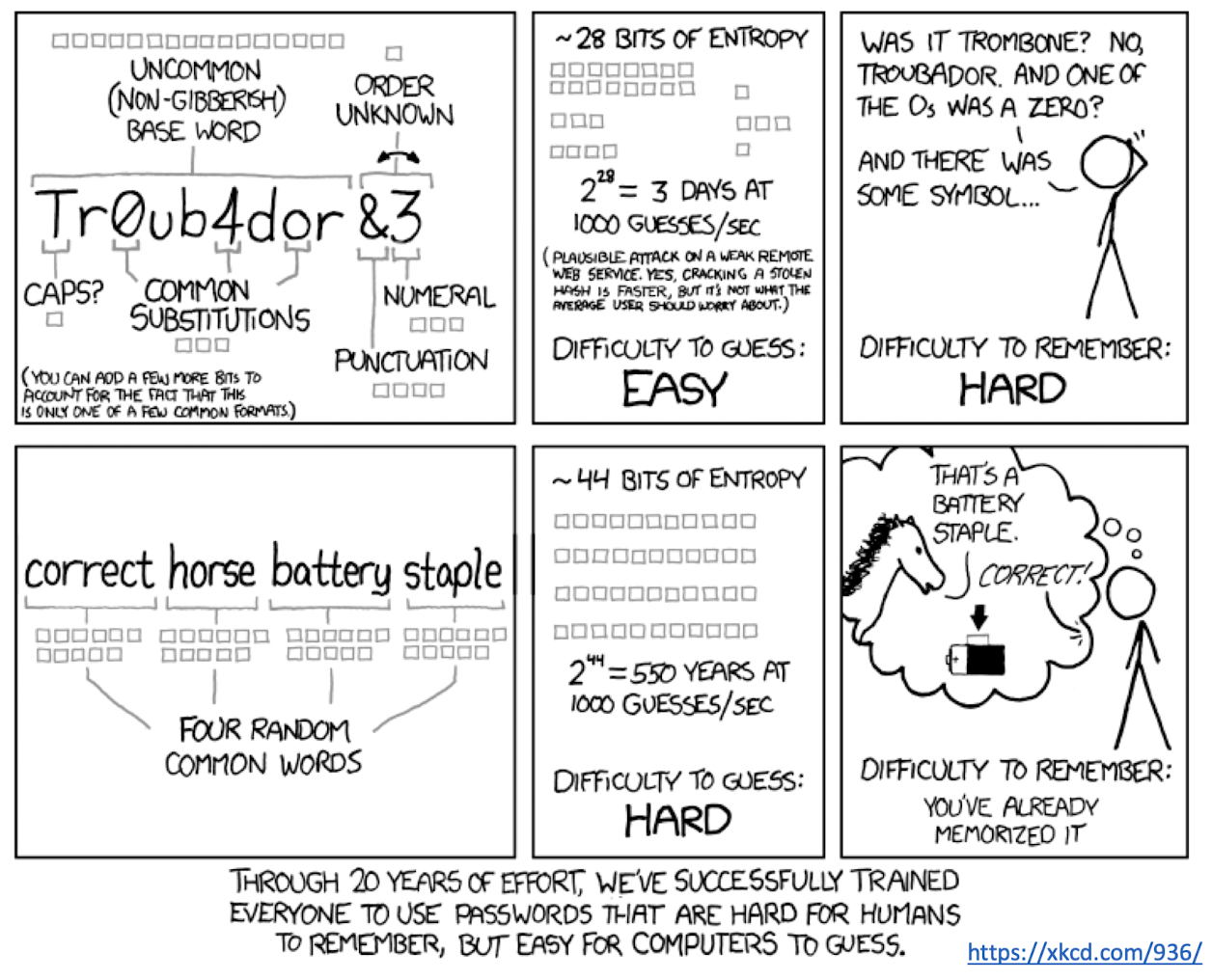

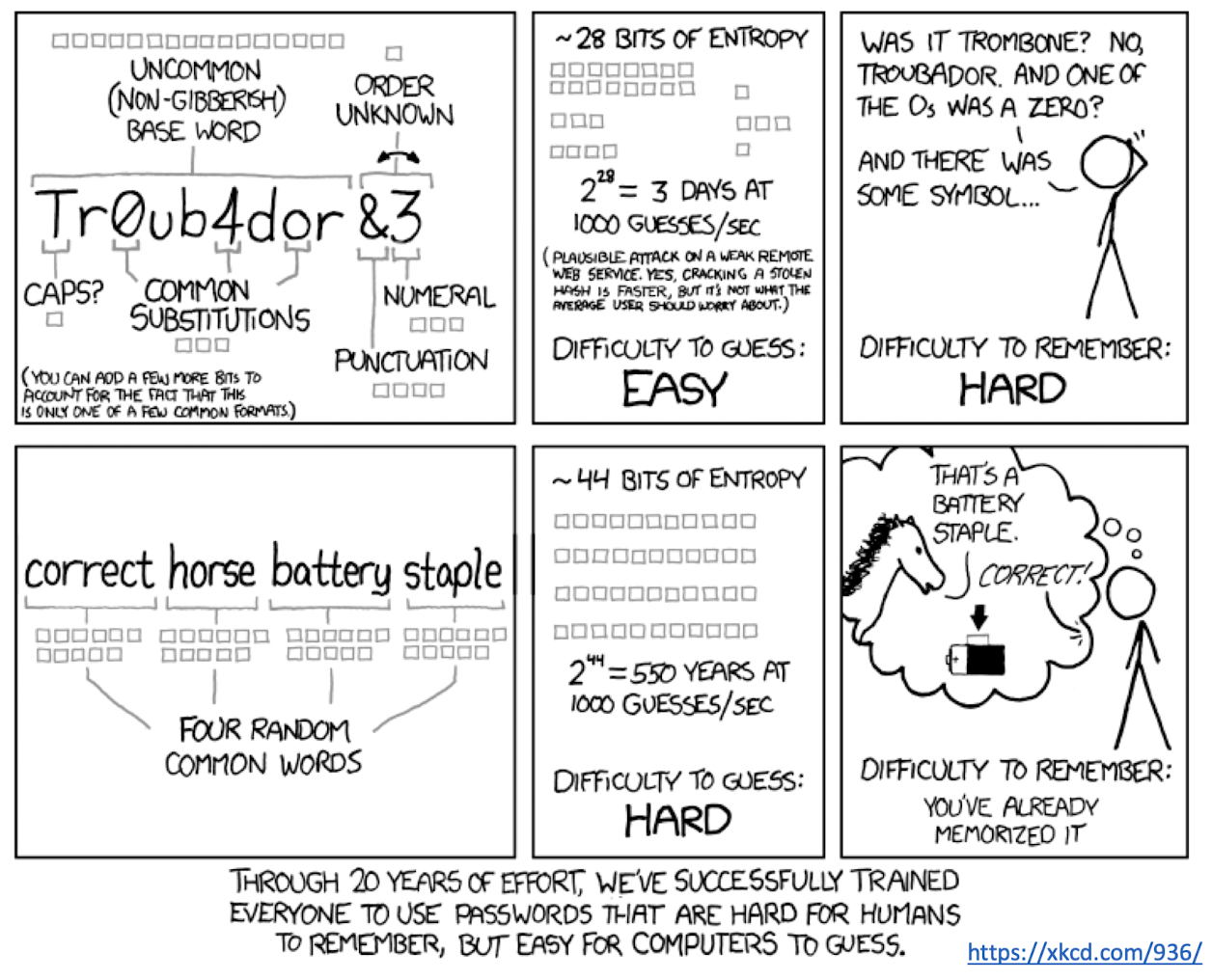

5.29 Strong passwords

- Does having complex composition requirements make passwords stronger?

- Lowercase, uppercase, digits, letter…

- Old (before 2017) NIST guidelines suggest yes

5.30 Passphrase

Passphrase are composed of several randomly selected words from a known dictionary

Overall complexity is $\text{wordlist size}^{\text{number of words}}$

$10^{13}$ combinations for a list of 2000 words and 4 chosen words, same as 7-character random password with digits and symbols

5.30 Passphrase

Passphrase are composed of several randomly selected words from a known dictionary

Overall complexity is $\text{wordlist size}^{\text{number of words}}$

$10^{13}$ combinations for a list of 2000 words and 4 chosen words, same as 7-character random password with digits and symbols

5.31 New NIST guidelines

- Newer NIST guidelines on Digital Identity – Authentication and Lifecycle Management (2017)

- Deprecates requirements on password complexity rules

- ==No more uppercase, digits, symbols counted towards strength!==

- Deprecates forced arbitrary password changes!

- ==No more changing passwords after 90days…==

- Considered ==harmful== to the quality of passwords

- Password strength ==only dependent on length==

- Min ==8 characters== for user-chosen passwords

- Comparison against simple password ==dictionaries of blacklisted passwords==

- Use of ==password-strength meter==

- ZXCVBN

5.32 HOTP/TOTP

One-time passwords ( $\neq$ one-time pad)

- $C$ is a counter, incremented each time

In TOTP, $C=\lfloor\frac{T}{T_X}\rfloor$

- Time-based OTP

- $T$: current timestamp

- $T_X$: length of one time duration (30sec)

- Setup: need to pre-share K between user and server

Disadvantages

- ==Plaintext code== is displayed and typed by user manually

- User needs to verify domain name (==phishing==?)

- Plaintext code response vulnerable to interception and MITM attack if user has been phished by malicious website

- ==Shared secret== may be stored in plaintext on server

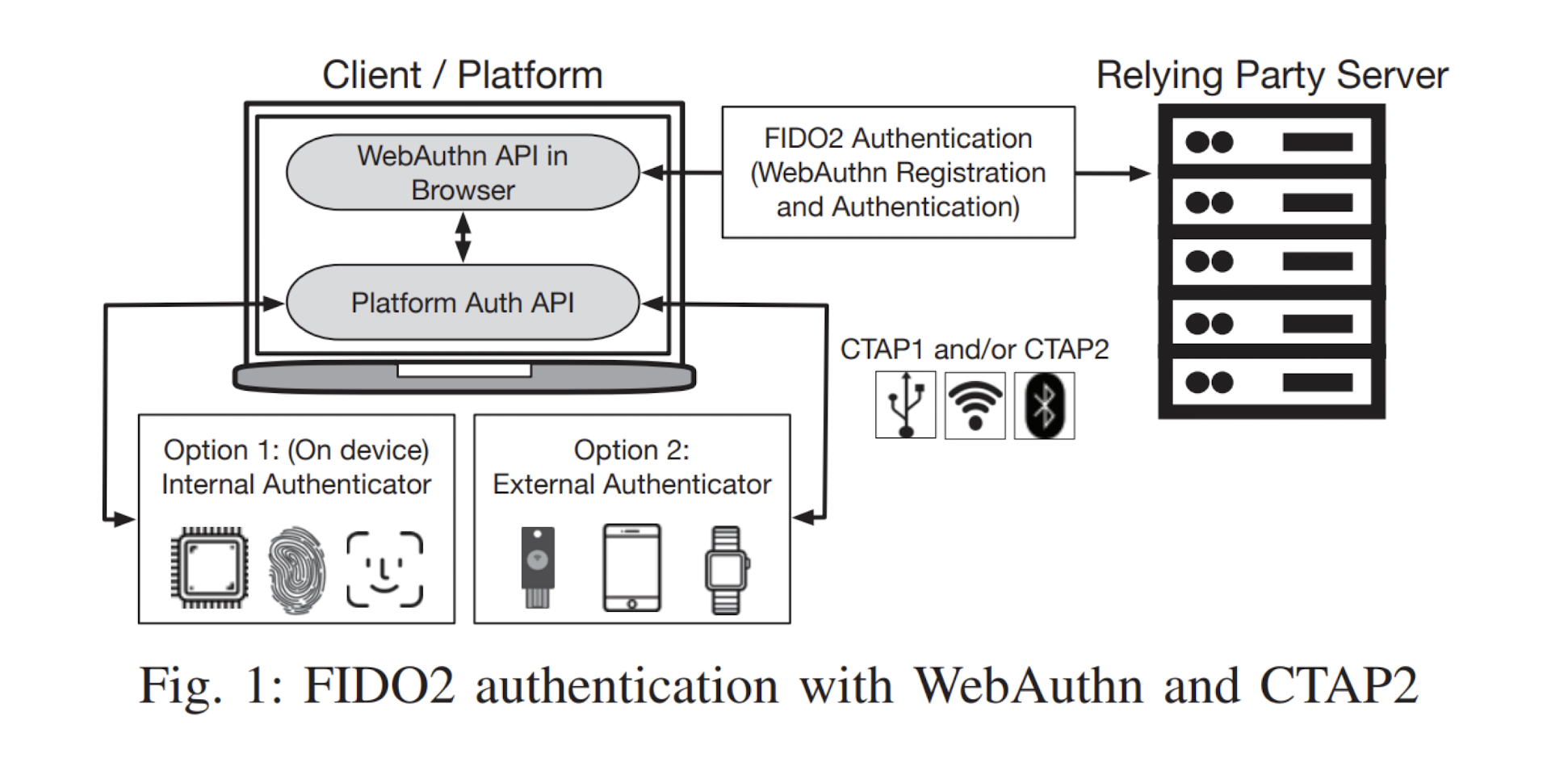

5.33 FIDO2 (formerly U2F)

- Protocol for strong authentication on the web

- FIDO2 = W3C Web Authentication (WebAuthn) standard + FIDO Client to Authenticator Protocol 2 (CTAP2)

- Open web authentication standard (WebAuthn)

- Supported by all modern browsers and native implementations, like on Android and Windows

- Relies on a cryptographic authenticator, e.g., smartphone or a hardware security key

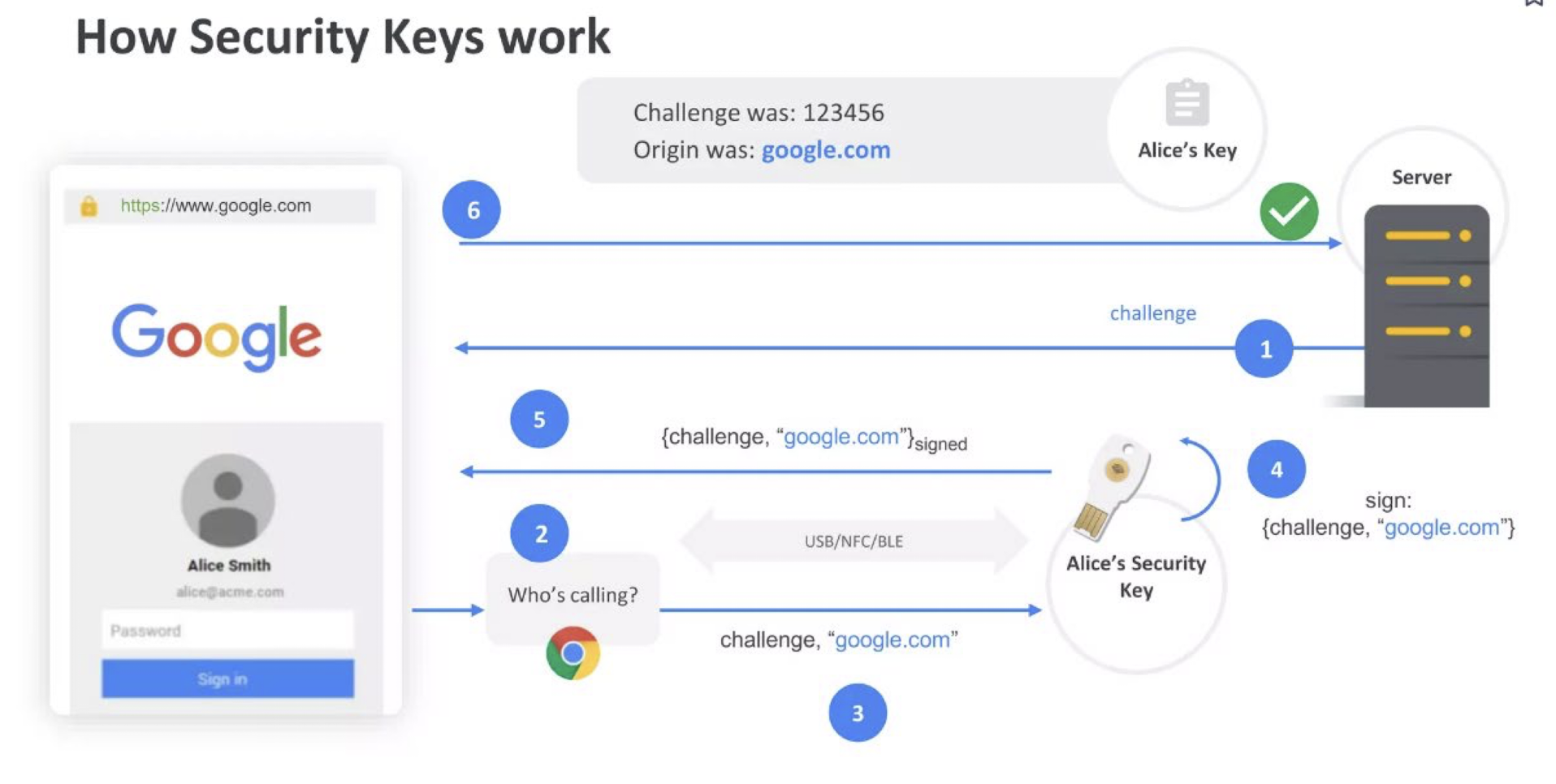

5.34 Authentication flow

- To avoid phishing, the domain name is not validated by the security key.

5.35 Registration/Authentication

- To register on a web service, the user’s authenticator (hardware) creates a public-private keypair unique to that website

- The authenticator can either be an external hardware key (roaming authenticator) connected to a device via USB, NFC, or Bluetooth, or a trusted module on the user’s existing computer or smartphone (platform authenticator)

- To sign into a web service, the authenticator signs a cryptographic challenge received from the server

- The server then verifies the signature using that user’s public key, received during account registration

- In contrast to password-based authentication, FIDO2 resists phishing, replay attacks, and breaches of the server

6. Authentication Protocols

6.1 Intro to public key cryptography

- Probably the most significant advance in the history of cryptography (~3000 years), with high impact on society

- Very recent in history (vs. symmetric encryption)

- 1970s

6.1.1 Problems with symmetric keys

- Pre-share key with intended recipient, not practical

Make sure the key is not intercepted, difficult

- Secure(?) channel

One symmetric key by pair of (sender, recipient), not scalable

- What if you could “split” this key into two pieces, one that you showed to everyone, one that you kept to yourself?

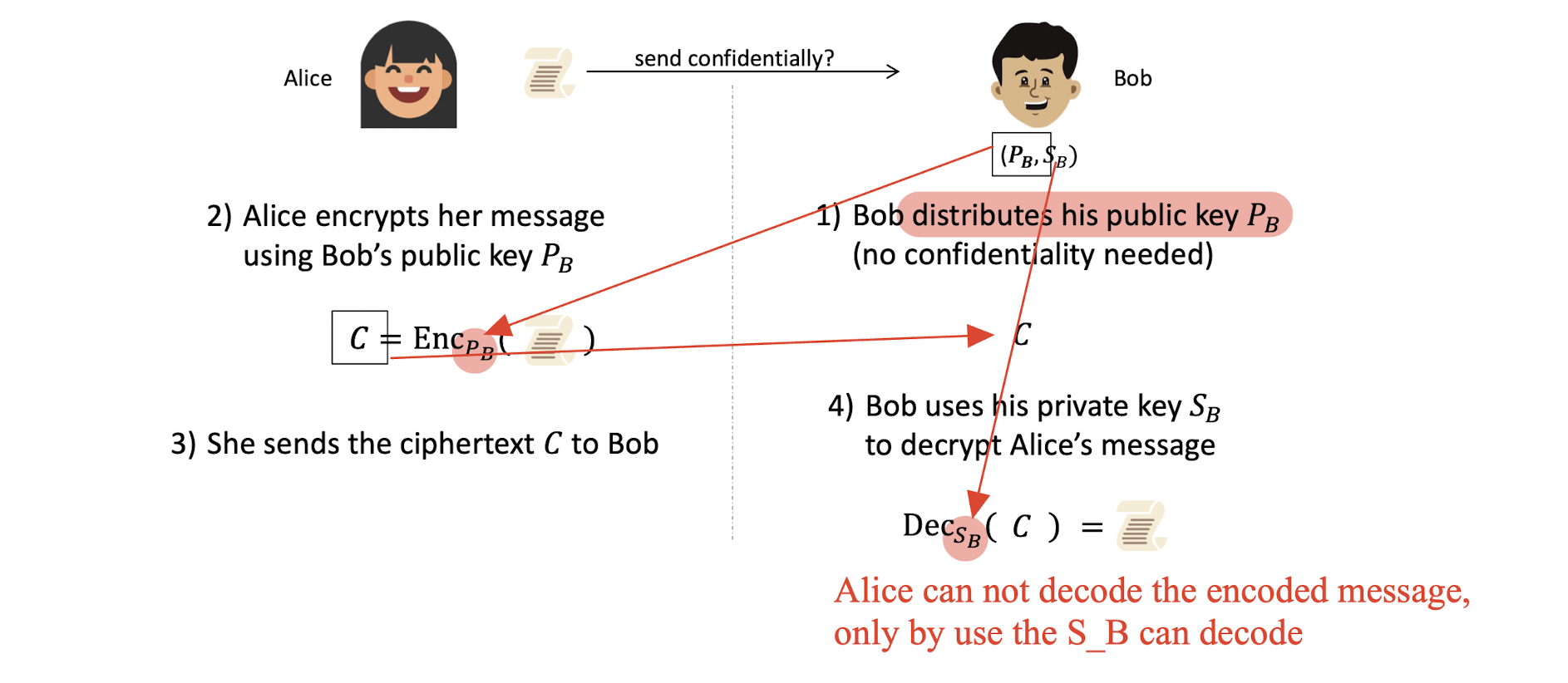

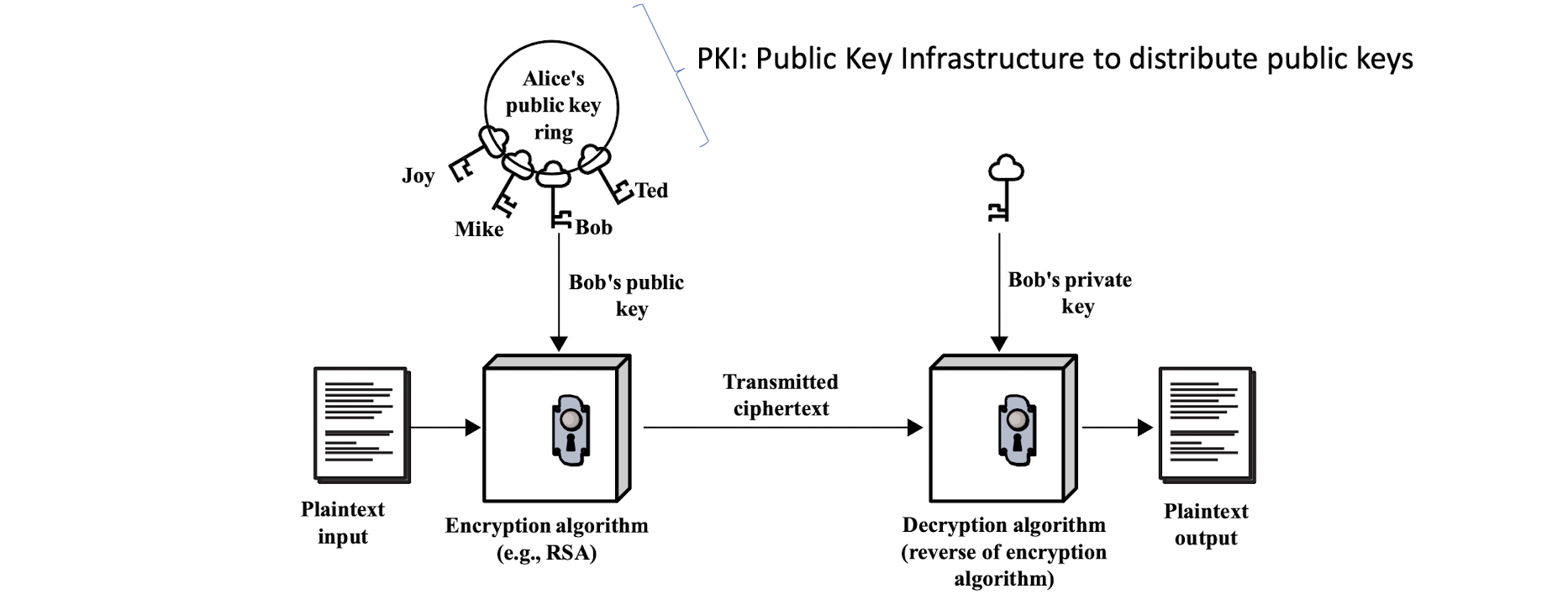

6.1.2 Public-key cryptography

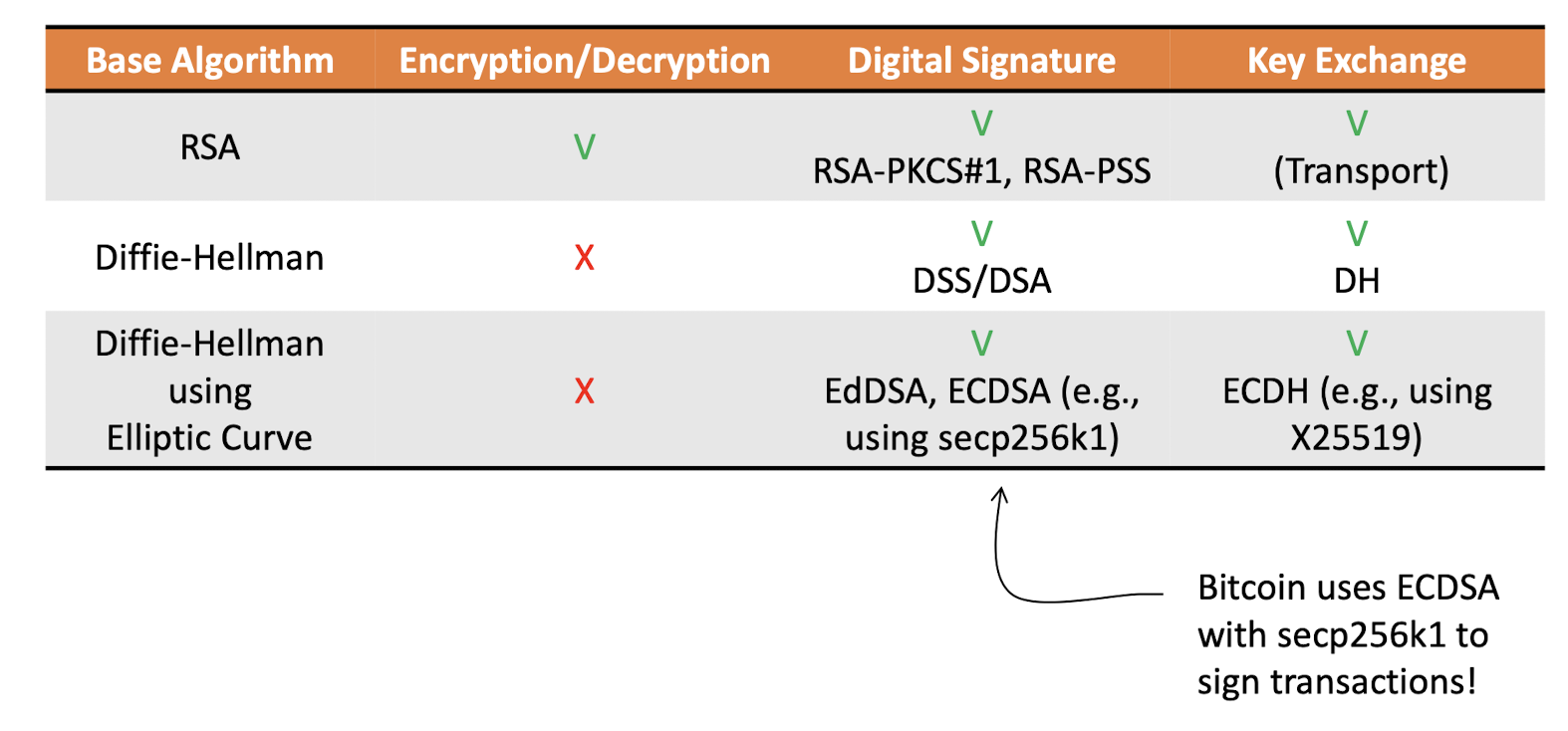

Public-key cryptosystems can be classified into three categories:

- Encryption/decryption

- The sender encrypts a message with the recipient’s public key

- Digital signature

- The sender “signs” a message with its private key

- Key exchange

- Two sides cooperate to exchange a session key

- Encryption/decryption

Some algorithms are suitable for all three applications, whereas others can be used only for one or two

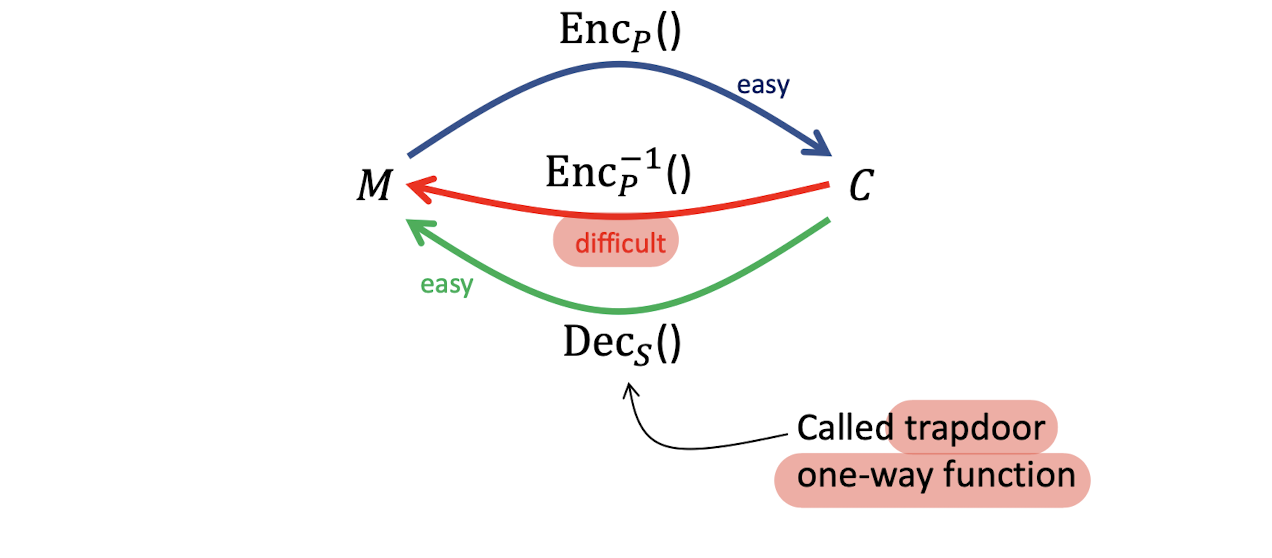

6.1.3 Public key (asymmetric) encryption

- Formally, each party has a pair of keys: a public key $P$, and a secret (or private) key $S$,

- $Dec_S(Enc_P(M))=M$

6.1.4 Key pair generation

- $sk$ must remain secret to the signer

- Secret ley, random number, chosen, e.g., ~256bits

- $pk$ is the public key, it is meant to be distributed

- Public key, random-looking number (or set of numbers), an identity

- $KeyGen$ easy to compute

- Public, deterministic, one-way function

6.1.5 Public key encryption example

6.1.6 Encryption/decryption, which key?

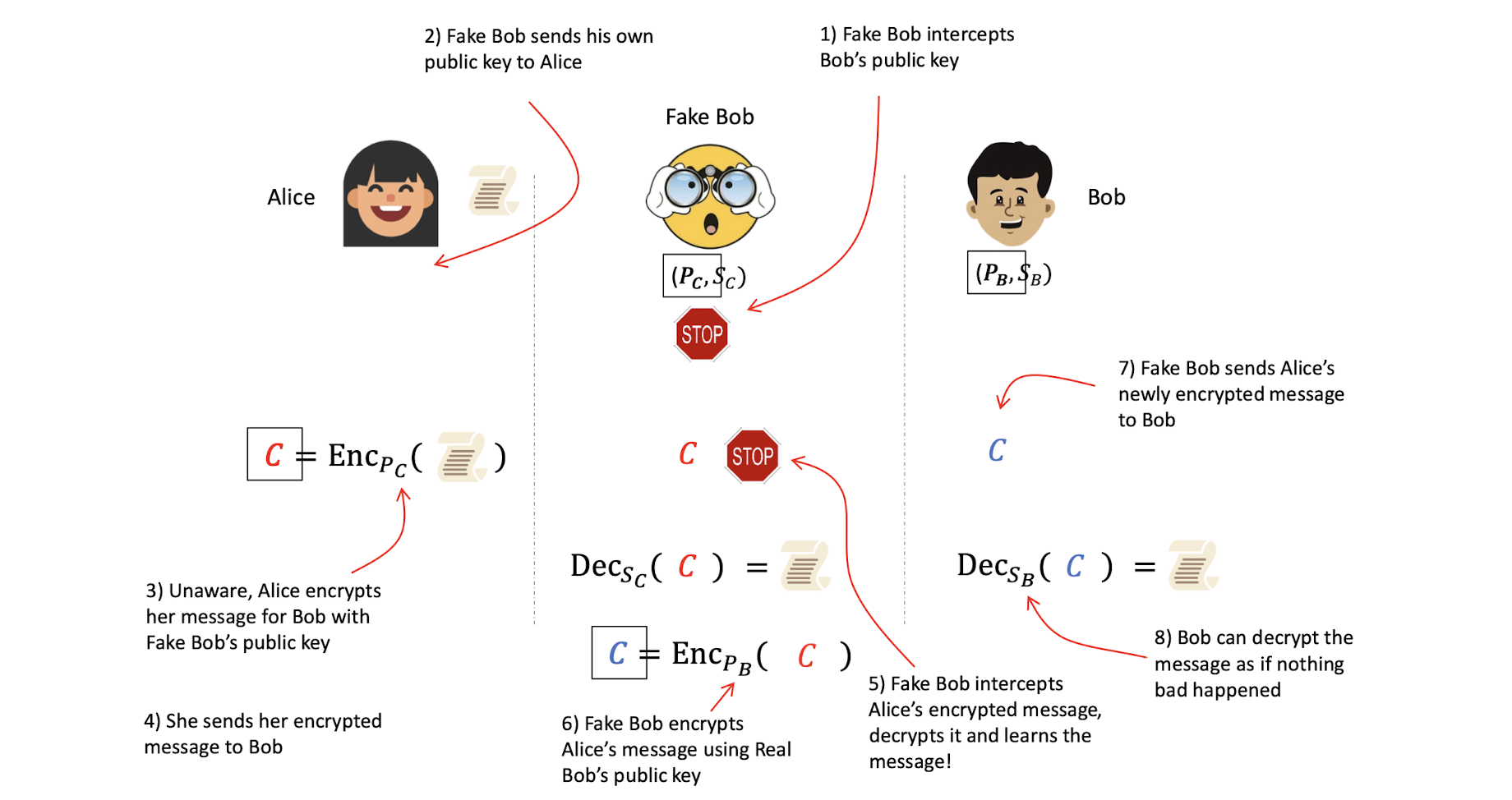

6.1.7 Man-in-the-middle attack

6.2 Signing

- $\sigma$: signature, random-looking number (or set of numbers)

- $m$: message to be signed, any size*, could be large

- $sk$: secret key, bound to who is signing

- $r$: randomness, makes the signature unique even on the same message

- $sign$: sign is deterministic

- Despite $r$: geiven the same {m, sk, r}, same output

6.2.1 Signature verification

- $m$: message that was signed

- $\sigma$: signature to verify, includes $r$ somehow

$pk$: public key from the person who signed

Unlike encryption that “hides” the message, signing does not hide anything, requires the original message to verify signature

- Message is public

- But the message can be a ciphertext

- ==Alice sign the message (or C), Bob verify the received message (or, C), to check whether the message is sent from Alice, not the Fake Bob. But it is not solve the problem of Fake Bob extract the message from the real bob.==

6.2.2 Different public-key cryptosystems

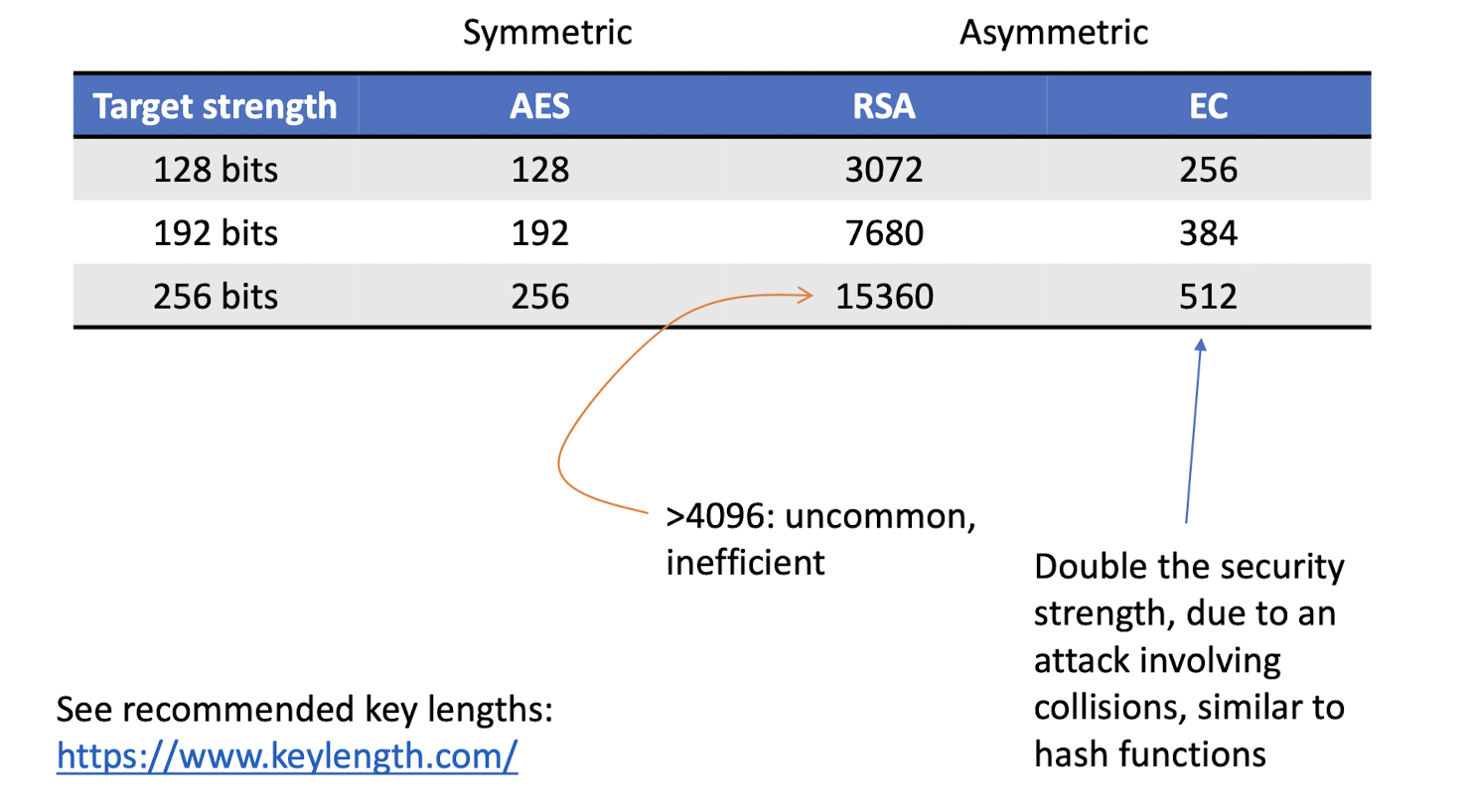

6.2.3 Security strength vs. key size

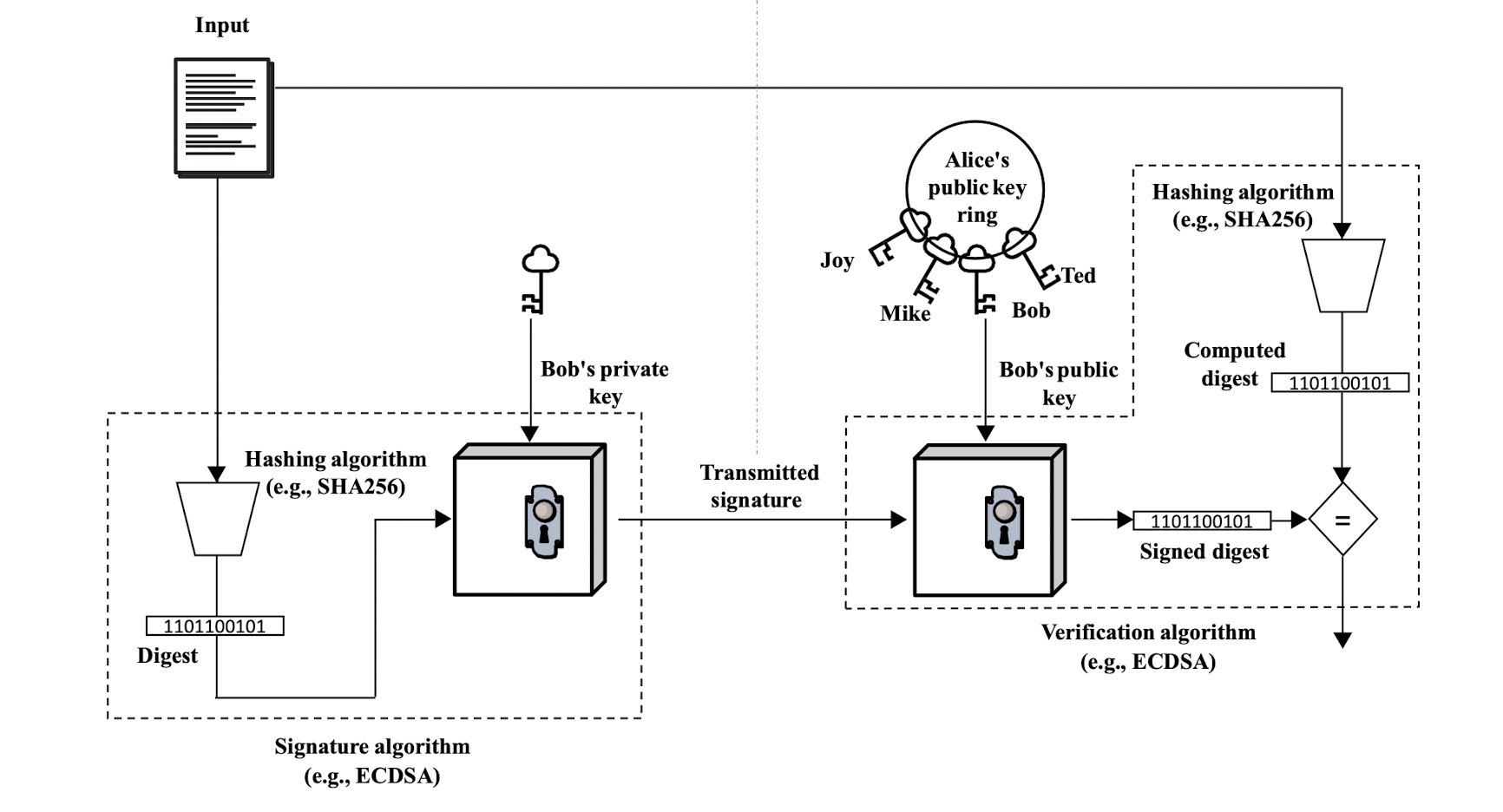

6.2.4 Hash & sign

message of any size because of one trick

- Signature algorithms work on a finite field, where input size is limited

- First, take a hash of the message, then sign it

- Works if hash function is collision resistant

- Equivalent to signing the entire message

- So in practice: $\sigma = sign(H(m), sk, r)$

- Could sign something you don’t know, except its hash…

- If you find a (weak) collision on H, you could obtain a signature on the first message, and automatically have the second one signed as well!

6.2.5 Signing/verifying

6.2.6 Digital signature functions

- To provide authentication of digital messages or documents

- To provide non‐repudiation

- meaning that the signer cannot successfully claim they did not sign a message, while also claiming their private key remains secret

- To provide integrity

- if a message is digitally signed, any change in the message after signature invalidates the signature

6.3 Diffie-Hellman Key Exchange

6.3.1 DH

- Referred as “D-H Key Exchange”

- W. Diffie and M. Hellman (1976)

- Enables two parties to ==jointly establish a shared secret key==

- ==no prior shared secret==!

- Weakness: no authentication

- Based on exponentiation in a finite field (modulo a prime), $Z^{*}_p$

- Easy for legitimate parties to compute

- Security relies on the difficulty of computing discrete logarithms (similar to factoring)

- Hard for adversaries to compute

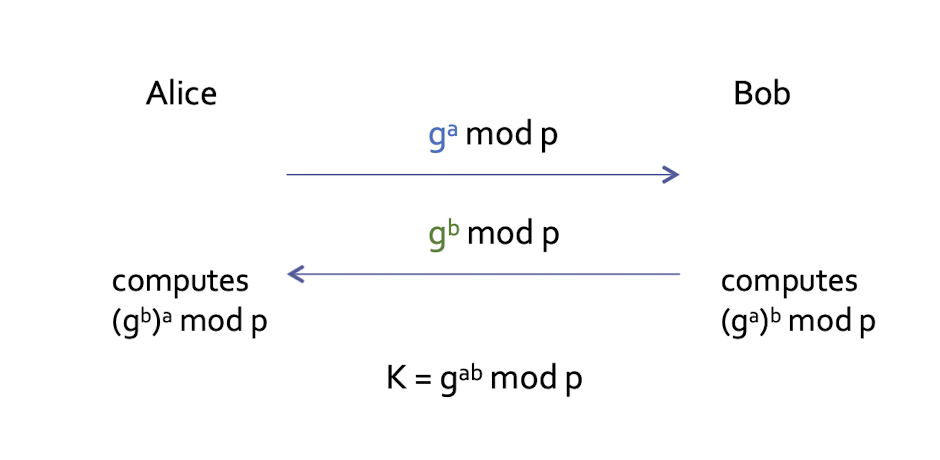

6.3.2 DH key exchange

Prior to the key exchange, Alice and Bob agree on a set of parameters:

$p$, a large ==prime integer==

$g$, a primitive root mod 𝑝𝑝 (also called a ==generator==)

- meaning a number whose powers successfully generate all elements mod $p$

- for every number $x$ between 1 and $p − 1$ , there is a power $k$ of $g$ such that $x=g^k \; \text{mod}\; p$

==$p,g$: both are public==, can be used by all system users

[Example]

==Session key== is then obtained by both parties calculating:

- $K_{AB} = g^{ab}\; \text{mod}\; p$

Alice can compute this by:

- $(g^b)^a \; \text{mod}\; p$

Bob can compute this by:

- $(g^a)^b \; \text{mod}\; p$

Eve needs either 𝑎𝑎 or 𝑏𝑏 to compute this key!

- $g^ag^b \; \text{mod}\; p = g^{a+b}\; \text{mod}\; p$

6.3.3 Is DH secure?

We don’t know for sure!

The Discrete Logarithm Problem (DLP) consists of finding the $y$ for which $g^y=x$ given a base number $g$ within some group $Z^{*}_p$, where $p$ is a prime number, and given a group element $x$

- We think it is pretty hard to solve

- Computational Diffie–Hellman (CDH) problem: compute $g^{ab}$ without some group $Z^{*}_p$, given only the public values $g^a$ and $g^b$, and not any of the secret values $a$ or $b$

- Equivalent to DLP?

- We think it is has hard as DLP

6.3.3 Man-in-the-middle on DH

- Previous DH key exchange is called anonymous because public parameters $g^a$ and $g^b$ are not authenticated

- In practice, DH should be authenticated

- See in TLS

6.3.4 Establishing authenticity

- Digital signatures provide integrity, authentication, and non-repudiation, but we still have a problem

- How do we obtain someone’s public key over a network, in a way that we can trust it belongs to the claimed entity?

6.4 TLS

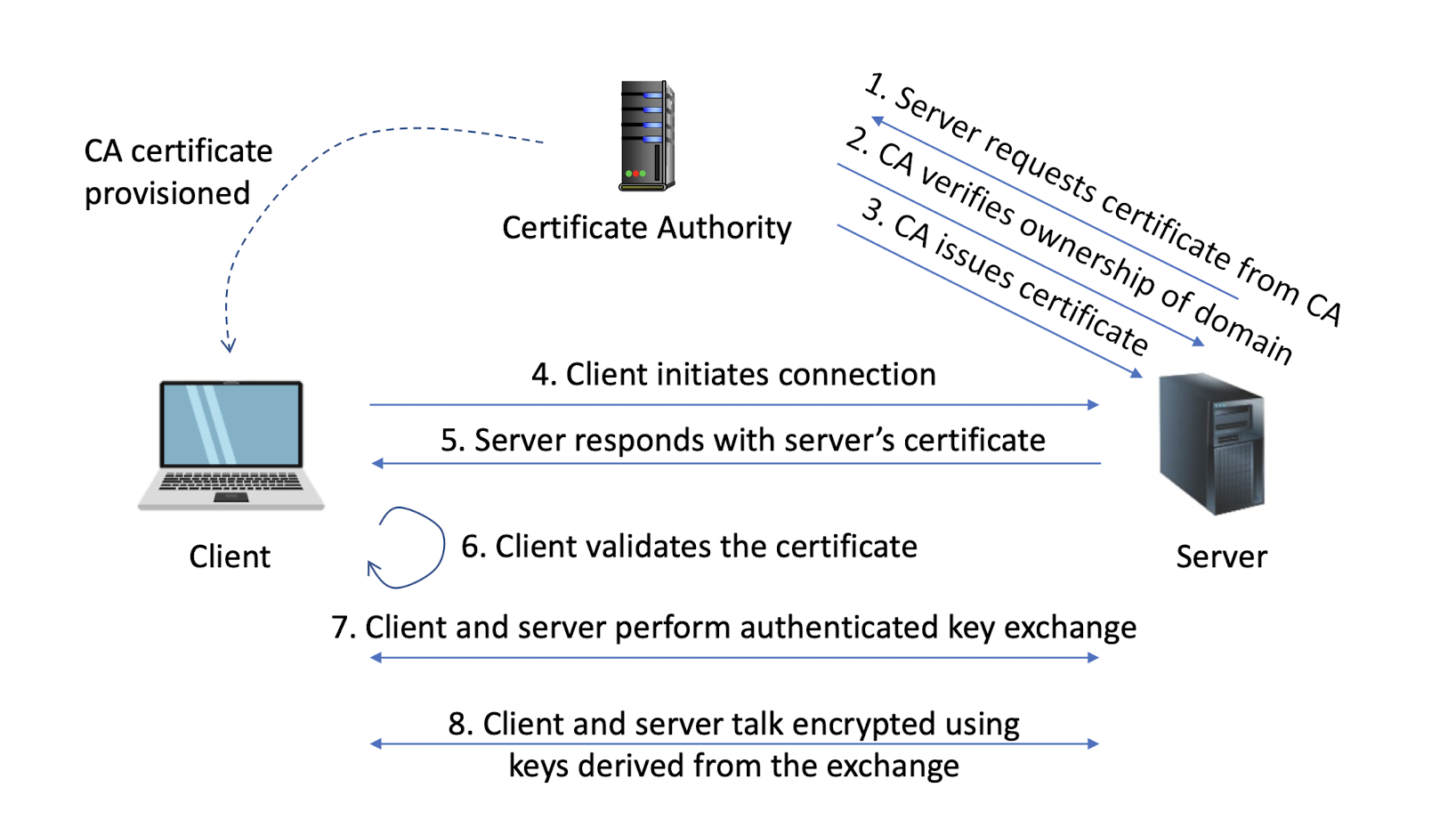

6.4.1 Introduction about TLS

When the Internet was originally designed, little thought was given to security. As a result, the core communication protocols are inherently insecure and rely on the honest behavior of all involved parties. That might have worked back in the day, when the Internet consisted of a small number of nodes—mostly universities—but falls apart completely today when everyone is online.

SSL and TLS are cryptographic protocols designed to provide secure communication over insecure infrastructure. What this means is that, if these protocols are properly deployed, you can open a communication channel to an arbitrary service on the Internet, be reasonably sure that you’re talking to the correct server, and exchange information safe in knowing that your data won’t fall into someone else’s hands and that it will be received intact.

6.4.2 Security properties ensured by TLS

- Confidentiality

- Message authenticity

- Authentication:

- ==Server authentication==

- ==Client authentication==

- SSL/TLS do not enforce:

- Non—repudiation

- Availability

6.4.3 Plaintext vs. ciphertext

6.4.4 Very simplified overview of TLS

6.4.5 History

- In its early years (1994), TLS was called SSL

- Secure Socket Layer

- Often, the name is still used, but this is incorrect

- Since 1999, the protocol is named TLS

- Transport Layer Security

- The latest standardized version is TLS 1.3

- TLS 1.2 is still widely used, lower versions are considered insecure

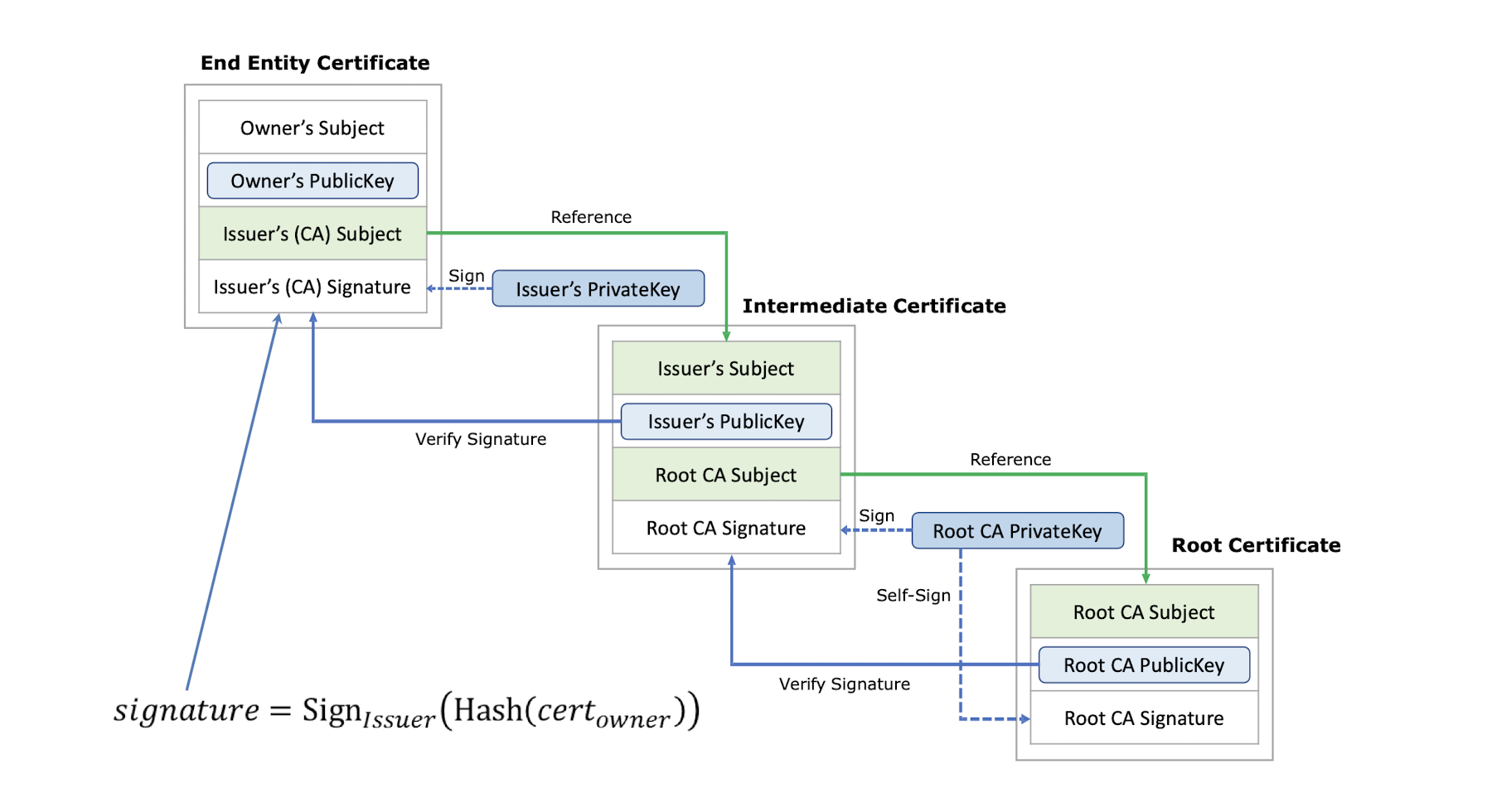

6.4.6 Certificates

- Certificate: set of public keys and identification information

- Typically signed by some other source, signatures included in certificate

- Signatures provide integrity and authenticity guarantees

- Source is normally a trusted third party (also called: certificate authority (CA))

- Root certificates are self-signed

- Example: X.509 certificates, PGP (“web of trust”, no trusted third parties)

6.4.7 Certificate Authorities

- A Certificate Authority is in charge of issuing public-key X.509 digital certificates that associate a public key with an identified owner

- Data structure that ==binds== a public key to a named Subject , by means of a digital signature generated by a trusted third party called a Certification Authority (CA)

- CA responsible to verify identity before issuing certificate

- Certificates on the web are binding a domain name

- TLS is used to secure communications (HTTPS or other protocols)

- Certificates often verify a domain name

6.4.8 Chain of Trust

6.4.9 Certificates vs. MITM

- Recall MITM attacks, where the recipient (Bob) has been fooled into thinking a message came from the claimed sender (Alice)

- If a trusted third party signs Alice’s certificate, Bob has some extra assurance that the certificate he received is actually Alice’s, and the signed messages verifiable with the certificate are truly from Alice

- Notice how we are moving the authentication problem of each certificate to a trusted third party

- Is this very different from what we do with e.g., passports?

6.4.10 Self-signed certificates

- Self-signed certificates: certificates issued by the entity it represents

- Who to verify whether it is legitimate?

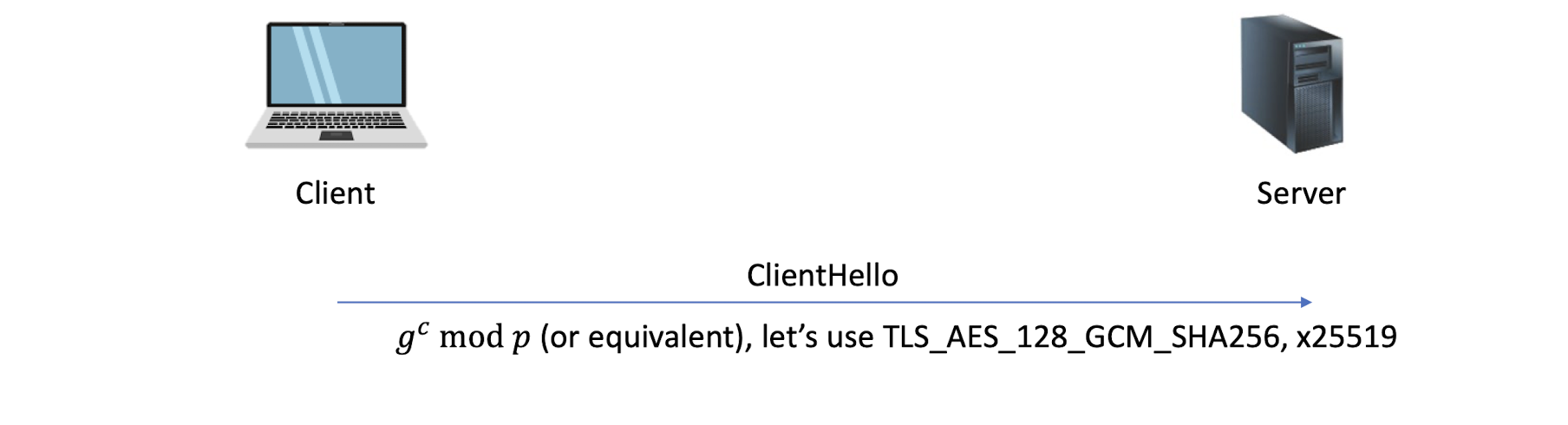

6.4.11 Handshake Protocol

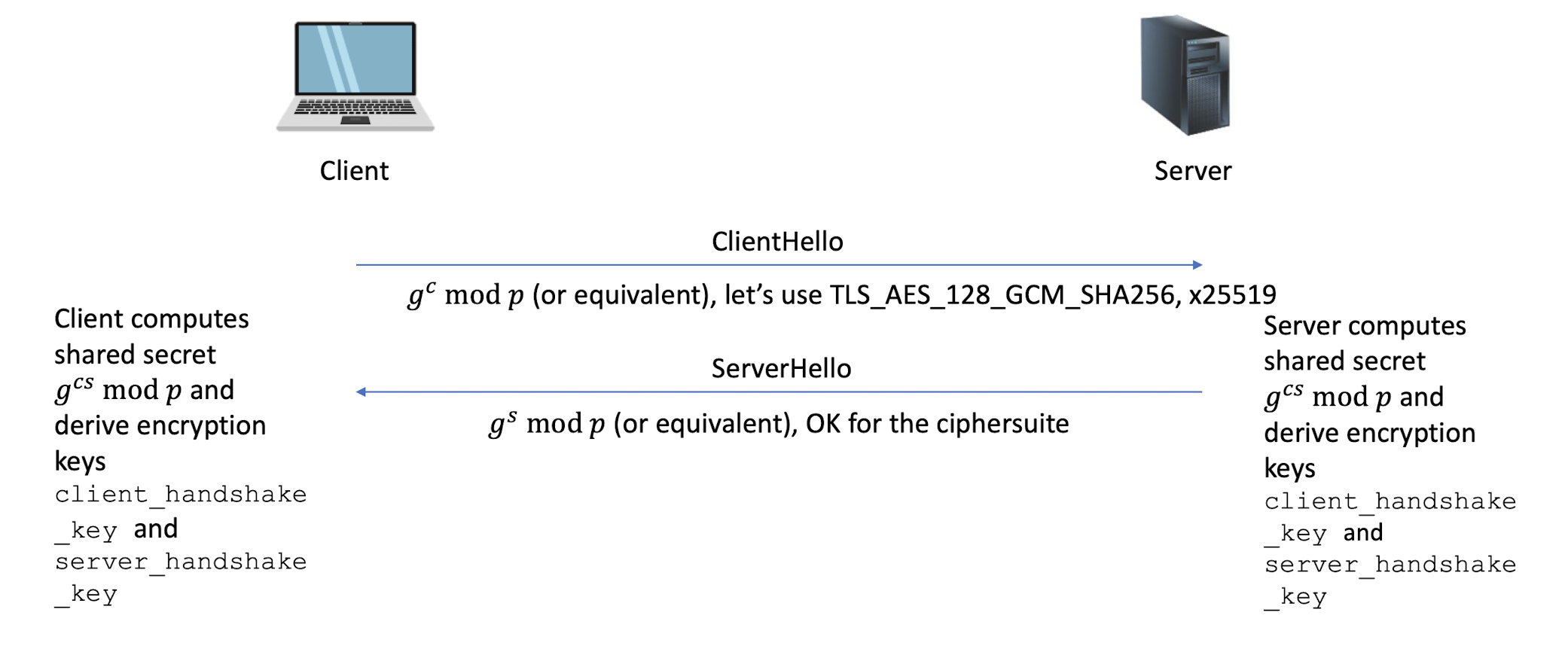

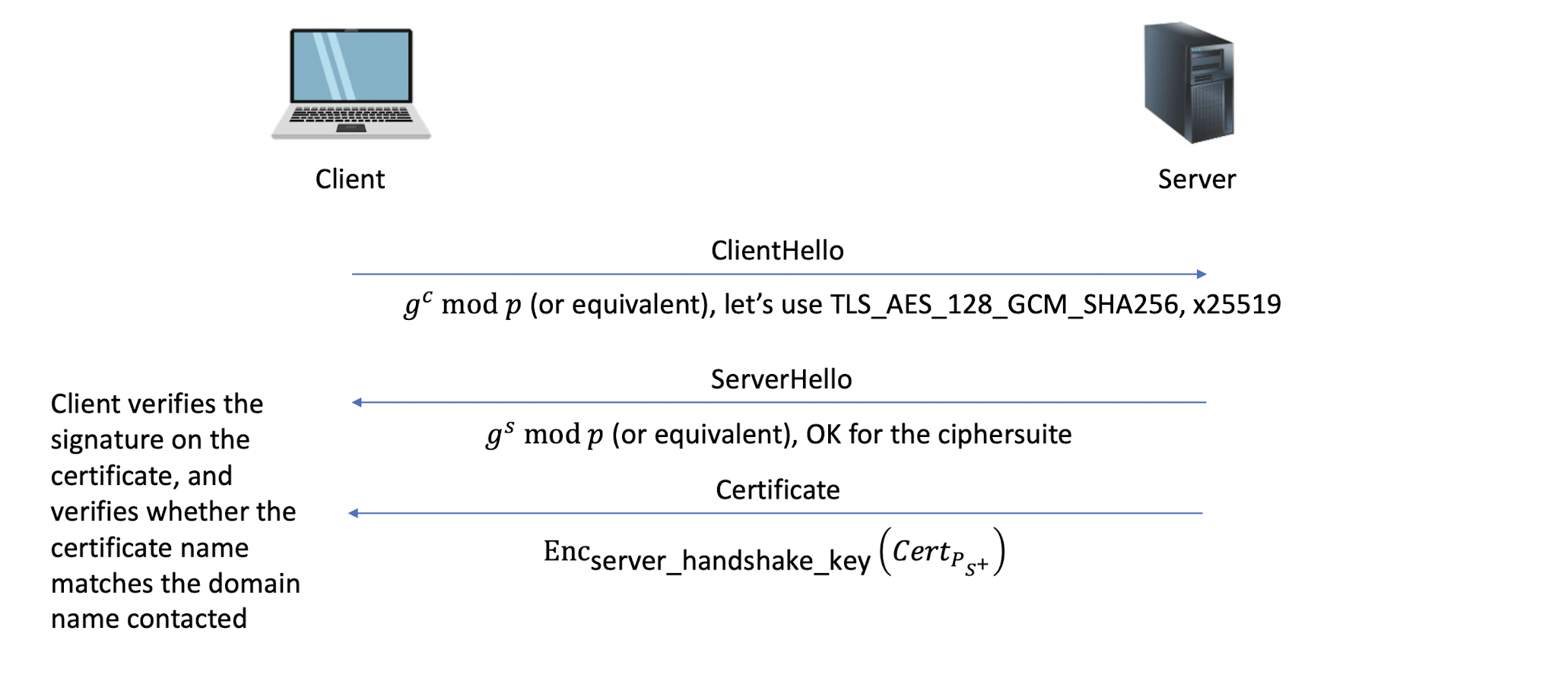

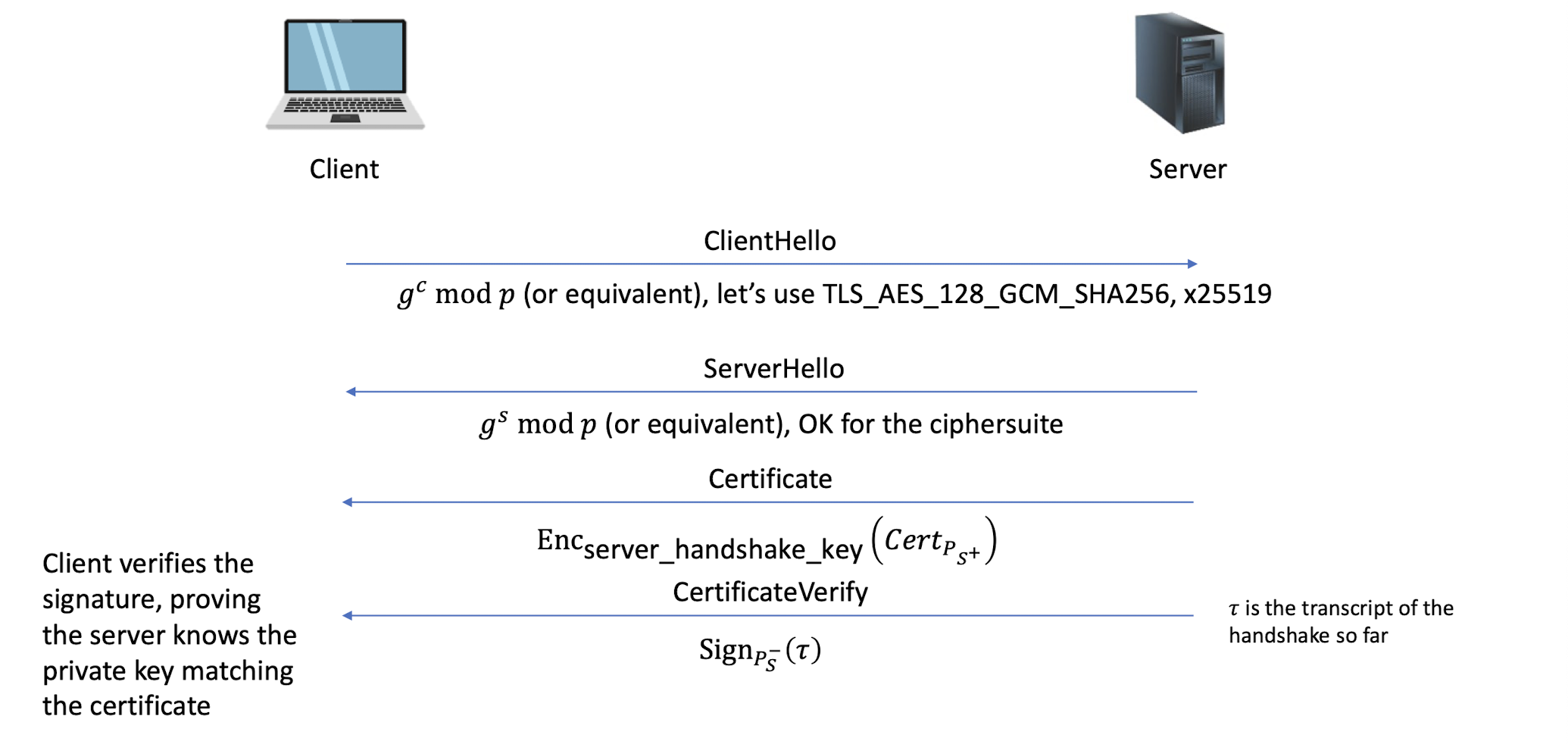

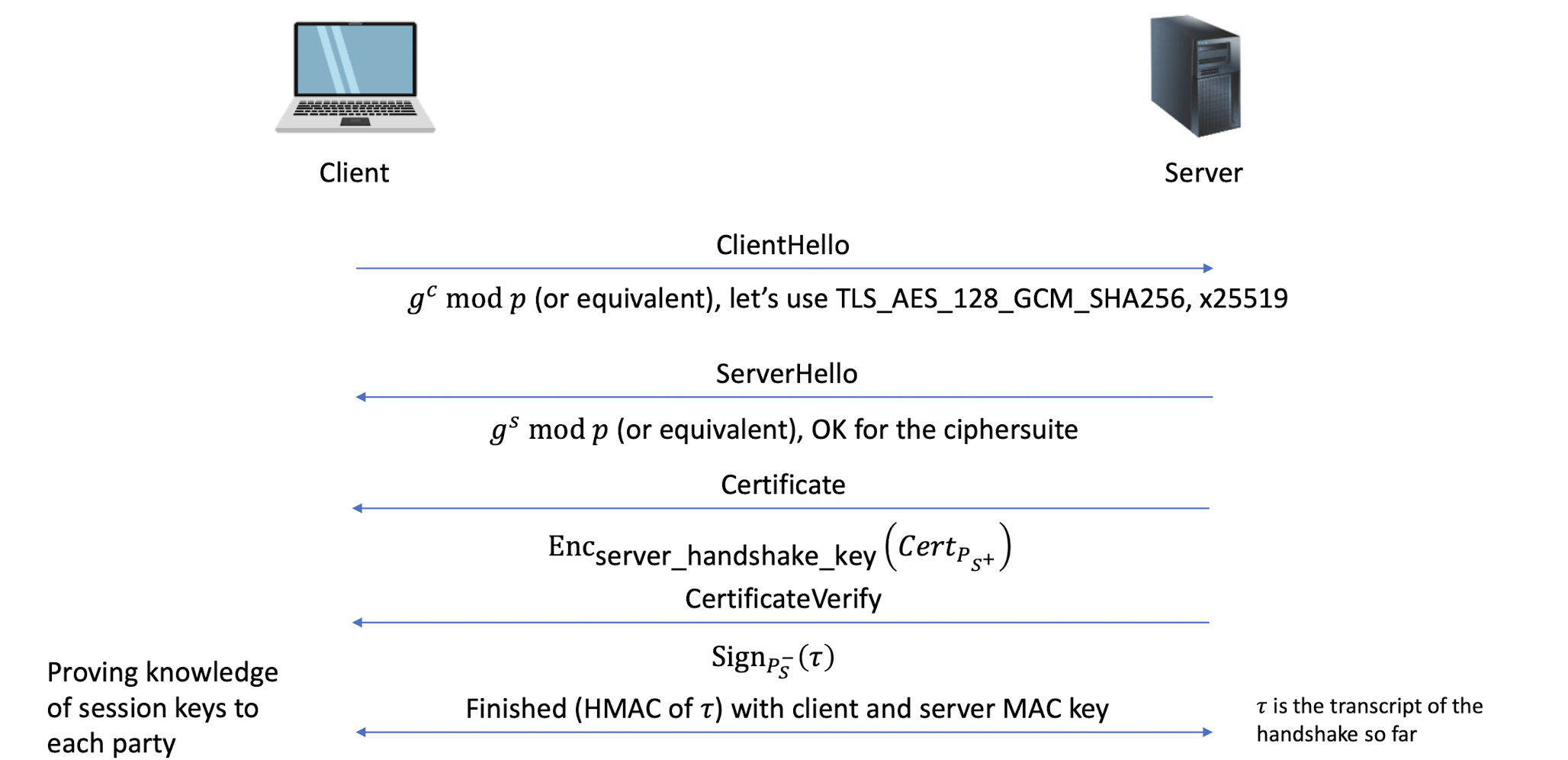

The handshake is the most elaborate part of the TLS protocol, during which the sides negotiate connection parameters and perform authentication

The result of which is a shared secret ( master secret ) that is derived into multiple keys for (authenticated) encryption

6.4.12 TLS 1.3 handshake simplified

7. Web Security

7.1 Web Vulnerabilities

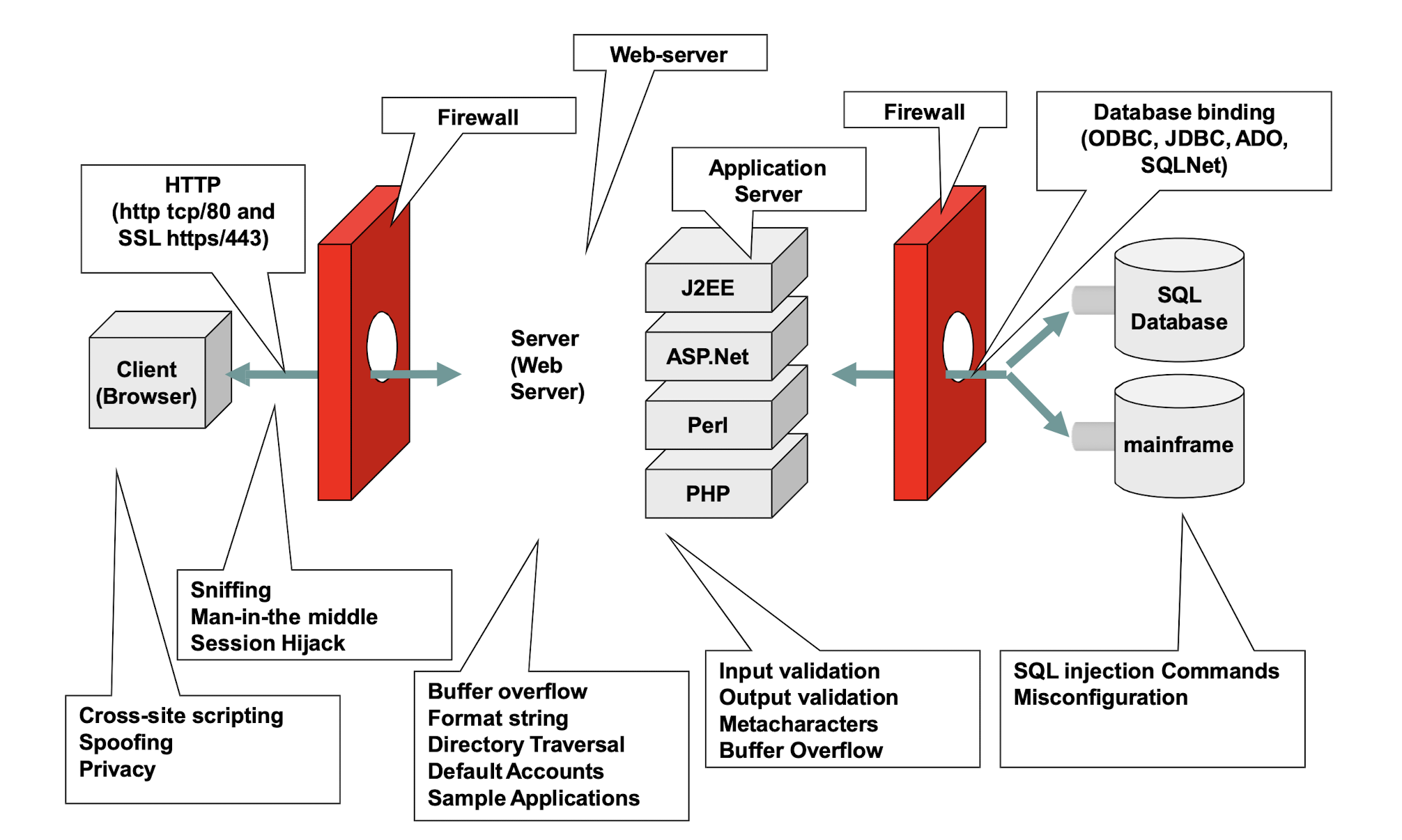

7.1.1 Developing a Secure Web Application

7.1.2 OWASP Top 10 – 2021

Broken Access Control

Cryptographic Failures

Injection (lack of input validation, sanitize)

Insecure Design

Security Misconfiguration

Vulnerable and Outdated Components (Use the defense-in-depth)

Identification and Authentication Failures

Software and Data Integrity Failures

Security Logging and Monitoring Failures

Server-Side Request Forgery

7.2 HTTP Authentication

7.2.1 HTTP 101

Visit http://example.com/

1 | |

Server response:

1 | |

7.2.2 Cookie 101

The server can ask the client (browser) to remember cookies

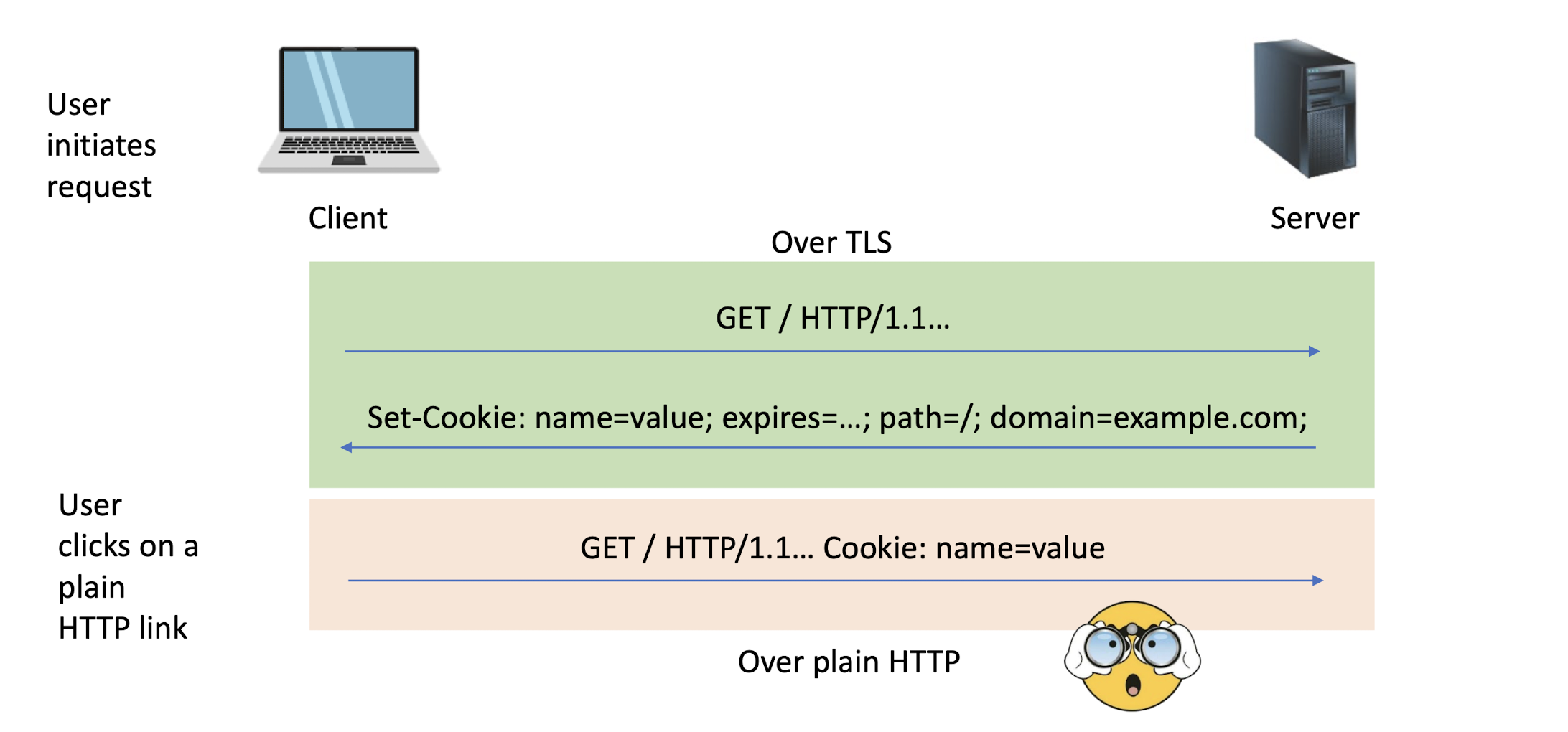

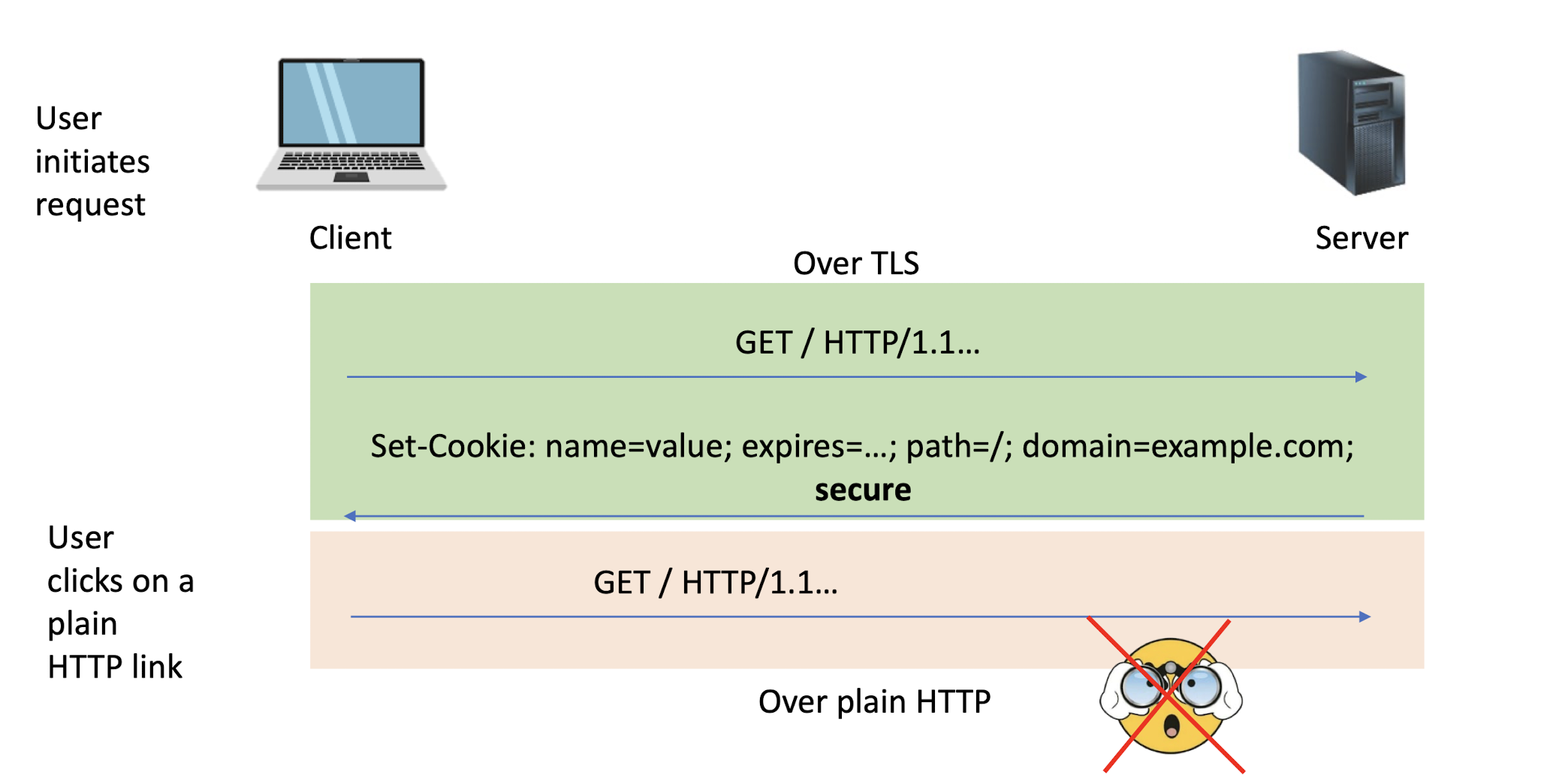

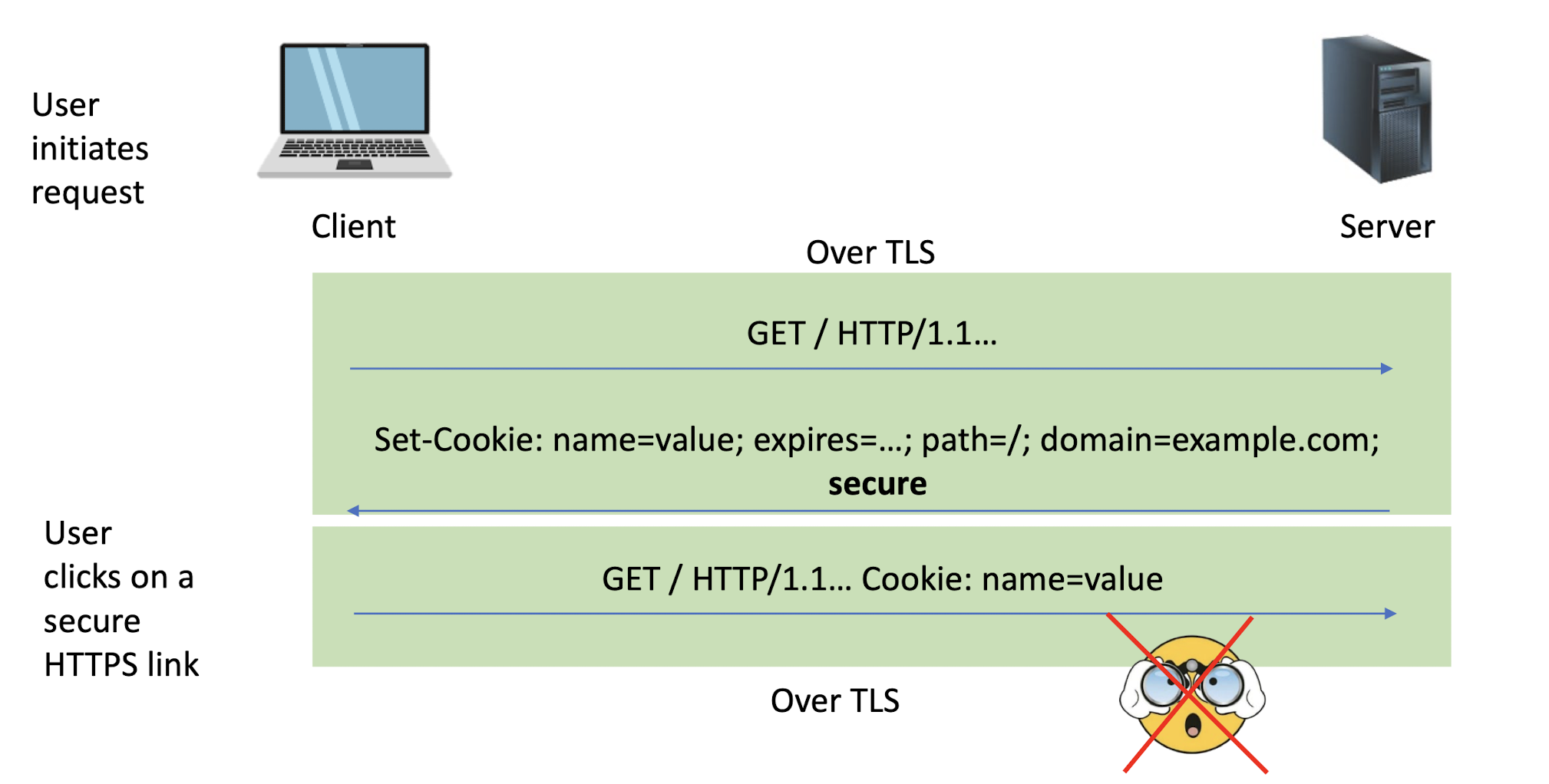

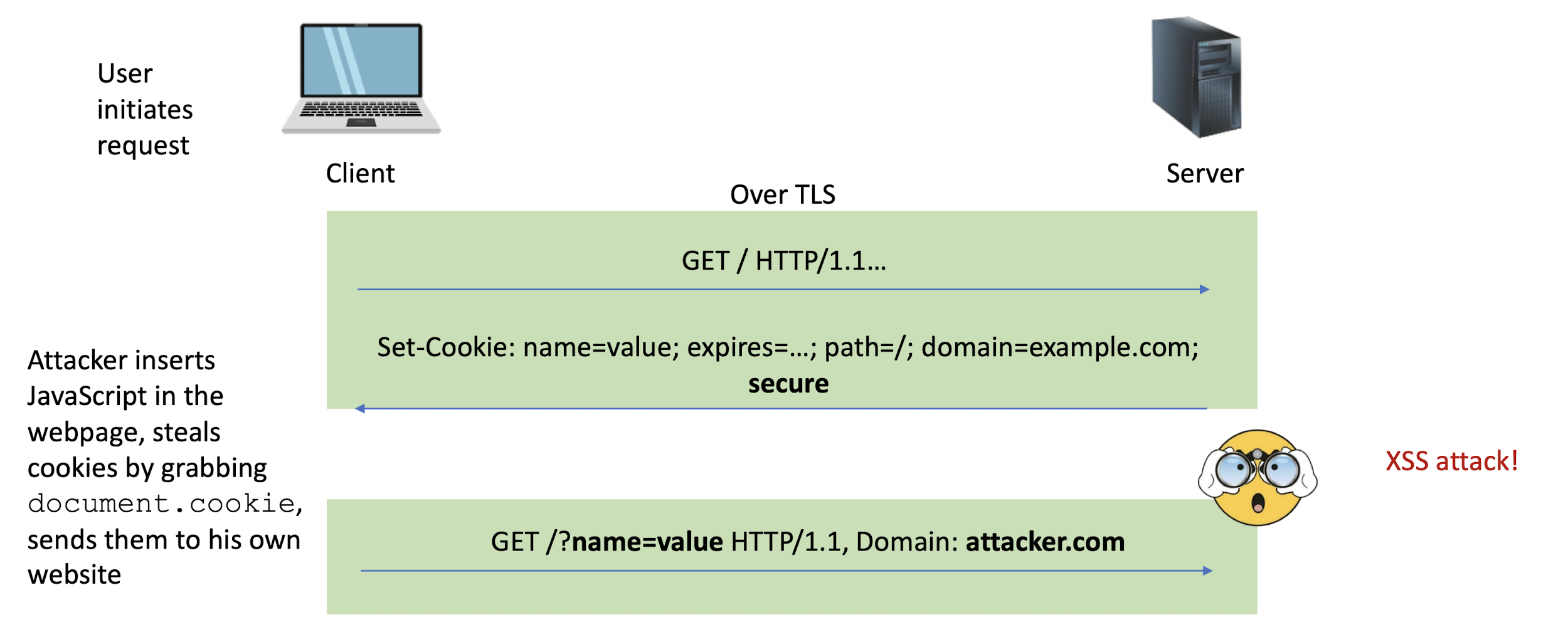

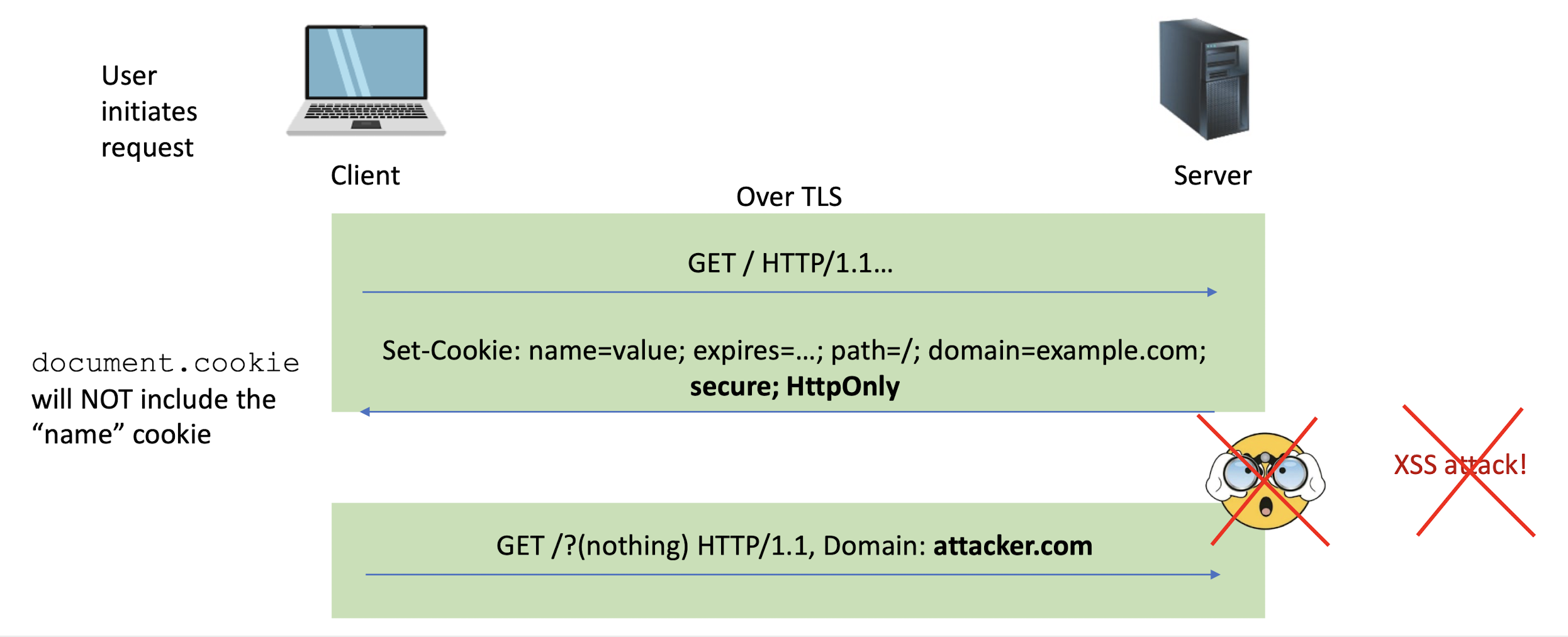

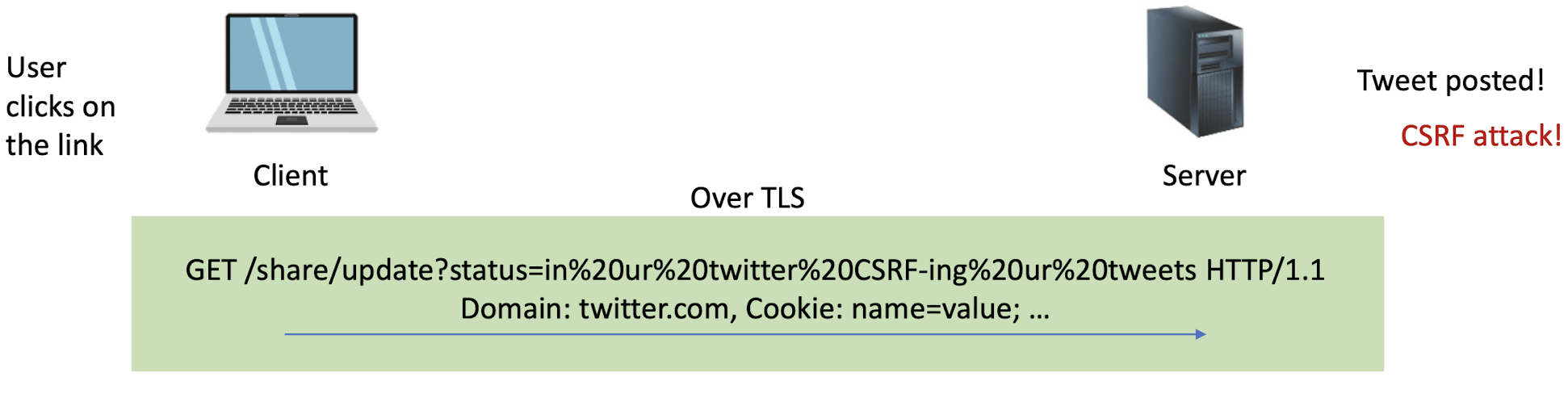

Set-Cookie: PHPSESSID=7618680...33ee; expires=Thu, 25-Apr-2024 03:02:17 GMT; path=/; domain=example.com; secure; HttpOnly

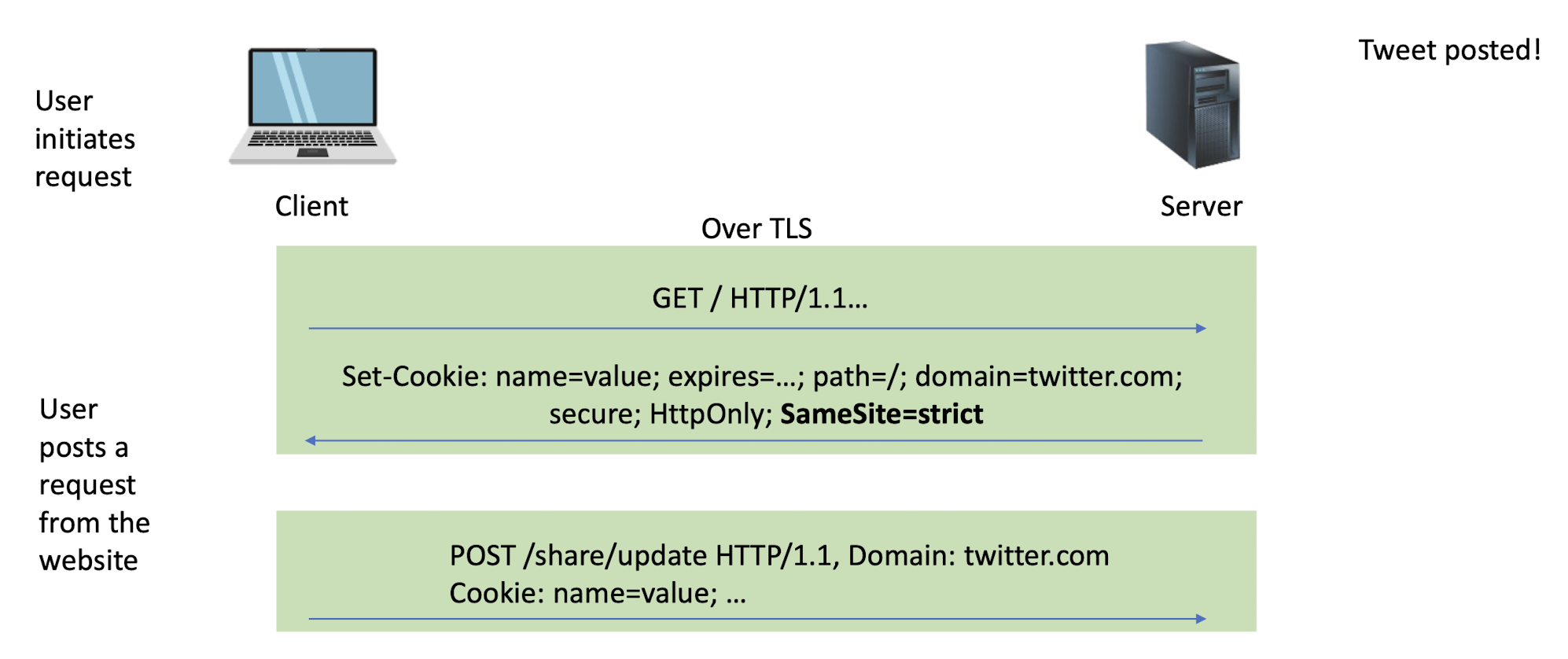

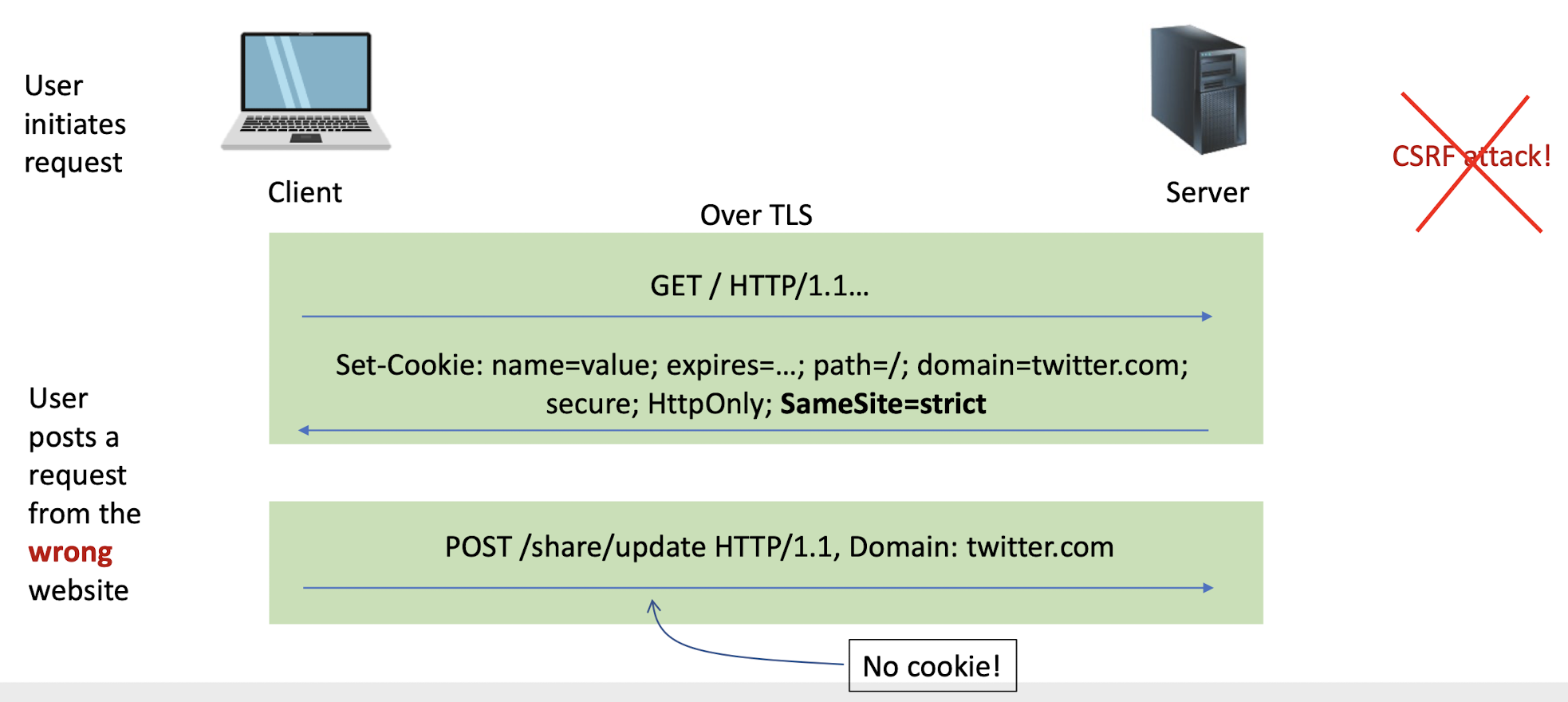

Every new web request to the server will include cookies (provided the request goes to the same path, cookie is not expired, etc.)

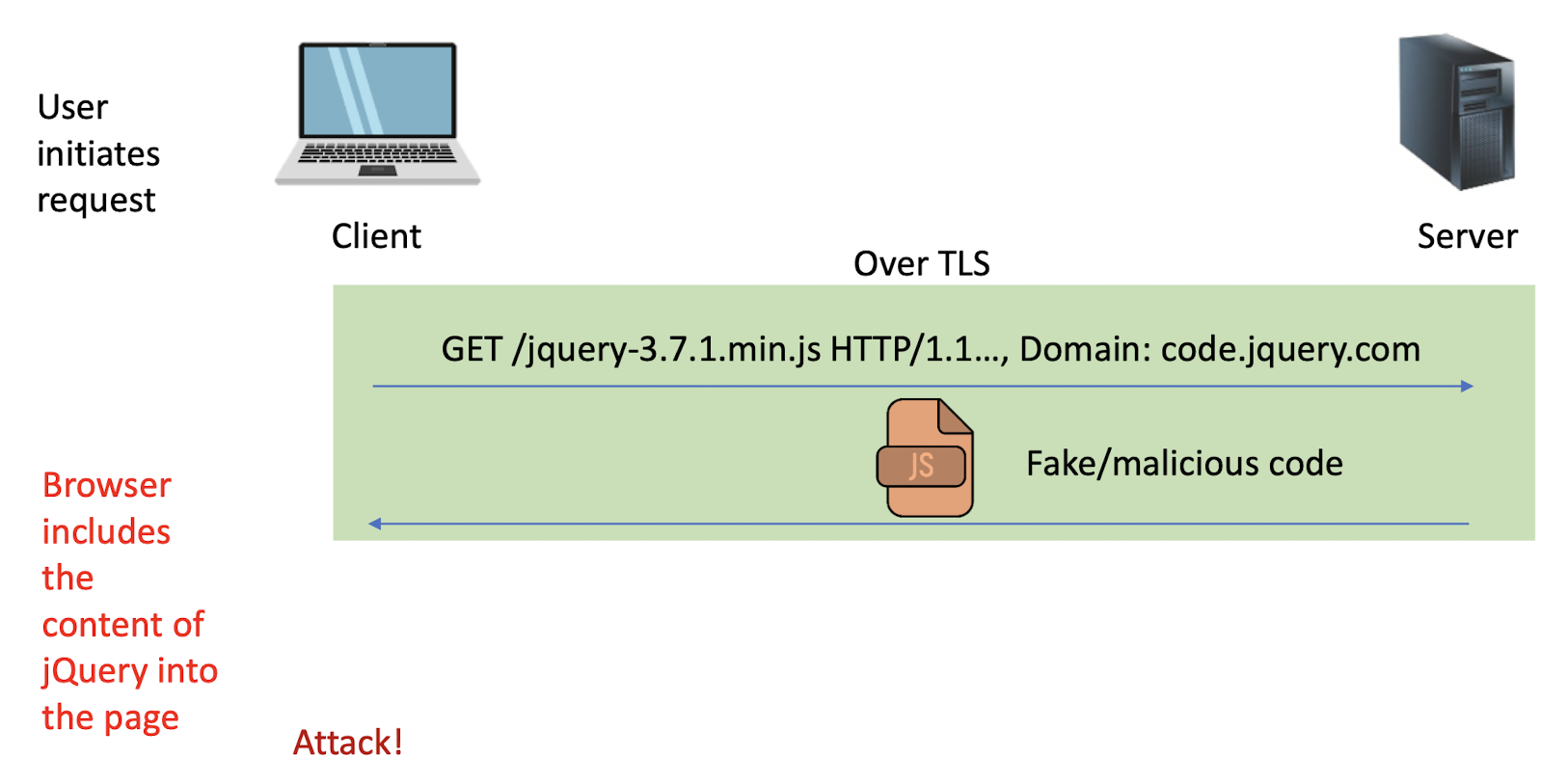

Cookie: PHPSESSID=7618680...33ee; cookie2=value2; ...